[ForecastPFN] Synthetically-Trained Zero-Shot Forecasting

https://arxiv.org/pdf/2311.01933

https://github.com/abacusai/forecastpfn

(Nov 2023)

Abstract

The vast majority of time-series forecasting approaches require a substantial training dataset. However, many real-life forecasting applications have very little initial observations, sometimes just 40 or fewer. Thus, the applicability of most forecasting methods is restricted in data-sparse commercial applications. While there is recent work in the setting of very limited initial data (so-called ‘zero-shot’ forecasting), its performance is inconsistent depending on the data used for pretraining. In this work, we take a different approach and devise ForecastPFN, the first zero-shot forecasting model trained purely on a novel synthetic data distribution. ForecastPFN is a prior-data fitted network, trained to approximate Bayesian inference, which can make predictions on a new time series dataset in a single forward pass. Through extensive experiments, we show that zero-shot predictions made by ForecastPFN are more accurate and faster compared to state-of-the-art forecasting methods, even when the other methods are allowed to train on hundreds of additional in-distribution data points.

1. Introduction

Time-series forecasting is an important and long-studied problem that has attracted significant attention for many years [10, 15, 20, 34]. Time-series forecasting has a wide variety of applications such as healthcare [11, 23], economics [33, 50], climate science [6, 45], and renewable energy [22, 62]. Many forecasting algorithms have been proposed, including traditional statistical methods [4, 13], and more recently, deep learning methods [34, 35, 61, 63].

Nearly all time-series forecasting approaches (including both traditional methods and deep learning methods) require a substantial number of initial time-series datapoints in order to learn patterns and make future predictions. However, many real-world forecasting applications have very few initial observations, sometimes just 40 or fewer [7, 9, 18, 27]. This setting is deemed ‘zero-shot forecasting’ in prior work [43], in contrast to the typical setting in which hundreds or thousands of observations from the target series are used for training. While there is recent work on zero-shot forecasting [43], its performance is inconsistent depending on the dataset used for pretraining.

In this work, we take a different approach and devise ForecastPFN, the first zero-shot method trained purely on a novel synthetic data distribution. ForecastPFN is a prior-data fitted network (PFN) [39]; PFNs are a recently-proposed paradigm, in which a model is pretrained offline on synthetic data generated from a prior to approximate Bayesian inference. PFNs have recently been used in a breakthrough result for tabular datasets [25]. However, there are significant challenges when designing a general and flexible PFN in the forecasting setting, namely, constructing a general time-series distribution, and tuning an architecture and training scheme that can learn it.

We overcome these challenges first by designing a novel synthetic time-series distribution. We carefully design the synthetic distribution to be as general and diverse as possible, with a bias for common patterns seen in real-world series, while still being possible for a machine learning model to learn the distribution. Specifically, we design a modular synthetic model which incorporates multi-scale seasonal trends, linear and exponential global trends, and a Weibull-based noise distribution, each of which are parameterized with enough variance to capture a diverse range of time series, while still being possible to learn. Furthermore, using synthetic training data consisting of a groundtruth trend and a separate noise component means that we can remove the noise in the labels when calculating the train loss, which significantly improves the training speed of our model. Next, we design a flexible transformer architecture that can scale across a wide range of time-series values. While many existing transformers for forecasting train with a fixed prediction length and only predict the next N timesteps into the future, ForecastPFN is trained to perform arbitrary queries – queries to any future timestep – allowing it to achieve zero-shot prediction for arbitrary prediction lengths.

We show that after a one-time, offline training phase on the synthetic dataset, ForecastPFN achieves strong zero-shot performance across a wide variety of real-world datasets, at a fraction of the runtime of other methods. ForecastPFN’s zero-shot predictions outperforms state-of-the-art forecasting methods, even when these other methods are allowed to train on hundreds of additional in-distribution datapoints. For example, given a new time series, ForecastPFN makes future predictions after only seeing the input (typically the most-recent ≈ 30 points of the series) and outperforms state-of-the-art methods which were allowed to train on an additional 300 historical points in the series (see Figure 1 and Figure 3). This remarkable result is due to the structure of our synthetic priors: by encoding multi-scale seasonal trends, global trends, and noise, across a variety of parameters, our model is able to learn how to forecast general time series. Finally, ForecastPFN is fast, producing predictions on a brand new dataset in just a single forward pass, taking 0.2 seconds. This is over 100 times faster than existing state-of-the-art transformer methods, which require expensive training procedures. Our codebase and our model are available at https://github.com/abacusai/forecastpfn.

Our contributions. We summarize our main contributions below.

• We introduce ForecastPFN, a prior-data fitted network for forecasting, trained purely on a novel synthetic data distribution. ForecastPFN is zero-shot: after its initial pretraining, it can make predictions on a brand new dataset with no training data from that dataset.

• Through extensive experiments across a variety of datasets, we demonstrate that ForecastPFN, without any retraining, outperforms state-of-the-art methods in low-resource settings, even when the other methods are allowed to train on additional in-distribution datapoints. Furthermore, ForecastPFN is fast, making predictions on a new dataset in a single forward pass.

2. Related Work

Time-series forecasting [10] has a multitude of applications throughout climate science [6, 45], healthcare [11, 23], business [8, 41], finance [32, 50], and renewable energy [22, 62]. A variety of approaches have been used for time-series forecasting [27, 34], including traditional statistical methods [4, 52] and deep learning methods [59, 61, 63].

ARIMA [4] is a statistical method from the 1970s that is still popular today, which builds an autoregressive model based on Markov processes. Another popular statistical method in practice is Prophet [54], which incorporates non-linear trends and multi-scale seasonality. Recently, deep learning methods for time series forecasting have become popular [34]. While early deep learning methods made use of RNNs [12, 49], transformer models have become popular more recently [31, 59, 61], following the success of transformers on NLP [56]. FEDformer [63] incorporates Fourier transforms and the seasonal trend decomposition method into a transformer architecture. For a survey on deep learning methods for time-series forecasting, see [34, 35], and for transfer learning for time-series forecasting, see [57].

Many real-world forecasting applications have very few initial observations, sometimes just forty or fewer [7, 9, 18, 27]. However, there is much less work in this setting (referred to as ‘zero-shot’ forecasting [43]). Oreshkin et al. [43] use a model based off of N-BEATS [42], a model that was state of the art at the time, training on a (single) real-world time series dataset and testing on a different time series. However, it achieves substantially different performance based on which training dataset is used. Another zero-shot forecasting method [44] uses an RNN base, yet it is only empirically compared to traditional methods and on a fixed prediction length.

Recently, concurrent work [21] introduces new tokenization procedures for large language models (LLMs), showing that GPT-3 [5] and other foundation models can achieve very strong zero-shot performance. Interestingly, their work achieves zero-shot predictions by repurposing LLMs, while our work uses purely synthetic pretraining data that is specific to time-series forecasting.

Another notable concurrent work [2] introduces LC-PFN, a PFN for learning curve extrapolation. They show that LC-PFN achieves significantly better extrapolation compared to existing MCMC approaches and use it to speed up AutoML algorithms by up to six times. The setting of learning curve extrapolation is significantly different from time-series forecasting, resulting in a much different prior compared to our work. For an extended discussion on related work, see Appendix A.

3. Prior-Data Fitted Networks for Forecasting

In this section, we give a background on prior-data fitted networks (PFNs), we introduce our synthetic data distribution, and we introduce ForecastPFN.

3.1. Background on Prior-Data Fitted Networks

3.2. Defining a Synthetic Prior for Time Series

Unlike prior work in forecasting, our model is not trained on any real-world data; it is trained purely on synthetic data. As discussed above, we assume that there is a prior distribution of time series, p(φ), from which real-world time series are derived. Intuitively, this distribution includes components such as periodic (weekly, monthly, and yearly) trends, global trends, and noise. We show that when we model data from the distribution described below, we can adequately capture aspects of time-series data in order to produce a high-performance, zero-shot forecasting model.

We model our synthetic data with the simple premise that time series data have two independent components: underlying (ψ) and noise (zt). We include time series for three scales: daily, monthly, and yearly, i.e., series where an observation is taken once a day, once a month, or once a year. Data are generated from each periodicity independently according to the following procedure. Now we describe daily series, and we describe the monthly and yearly time series in Appendix D.

We model the underlying time series as being comprised of a seasonal and a trend component. We see these as three independent aspects of time series data and model them as below, where the time series is the product of a trend and seasonality with an additional noise factor. The trend component is made up of a linear and exponential component with coefficients m_lin, c_lin, m_exp, c_exp. The seasonal component has a week, month, and year component where each comprises of coefficients p_week, p_month, p_year Finally, the noise in our model is derived from a Weibull distribution and is modeled such that the expected value of the noise model is 1, meaning in expectation the noise does not contribute to the seasonality or trend of the time series. Formally,

We choose multiplicative noise to better balance the amount of signal to noise across all series. If we had used additive noise, then the impact of the noise would have depended on the ratio of the scale of the base series and the scale of the noise. Since our base series have linear and exponential terms, additive noise would cause the signal-to-noise ratio to vary substantially over series based on the trend component. Furthermore, the Weibull distribution is a simple and natural method to parameterize between Gaussian and exponential distributions, two types of distributions that frequently come up in real-world time series.

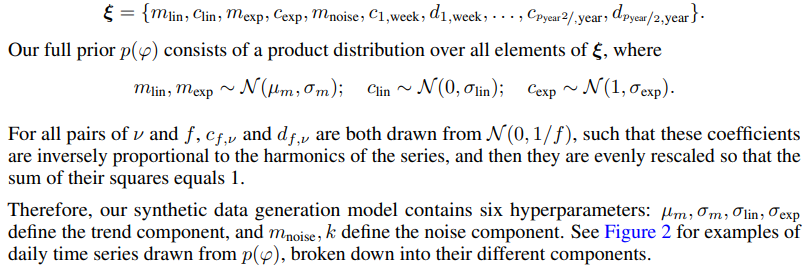

The above synthetic data generation model is extremely general and can capture a variety of time series we would encounter in real world forecasting applications. The model contains a number of parameters, which we denote as

3.3. ForecastPFN: a PFN for Zero-Shot Forecasting

Now we give the details for designing ForecastPFN, the first zero-shot forecasting method trained purely on synthetic data. We focus on the novel components inspired by the extreme challenge of training across a wide diversity of time-series scales with a single model.

Architecture Details.

As with the original PFNs [2, 25, 39], we use a transformer [56] as the base architecture. We use an encoder-based transformer, consisting of one multi-head attention layer and two feedforward layers. This is in contrast to prior work on zero-shot forecasting, which used residual networks [43] or recurrent neural networks [44].

The transformer accepts data in the form of tokens (t, yt). The time stamps t as part of the input data tokens are represented in terms of temporal features such as the year, month, day, day of the week, and day of the year. The series values are scaled as described in the robust scaler below. A query for ForecastPFN is extremely general: the transformer takes in a set of tokens (t, yt), along with a query consisting of a single, arbitrary future date, and then it predicts the output of this query. Thus, the input to the model is a set of tokens and the output is a single prediction at a future time, specified by the user. The input does not need to be a fixed size, and the input tokens do not need to be contiguous.

The generality of ForecastPFN queries allows it to perform well at test time, on a very diverse range of datasets and settings. This is also in stark contrast to existing transformer models for forecasting, which typically are only set up to predict the next N steps in the current sequence.

Robust Scaling.

One of the most challenging technical problems when training ForecastPFN is handling the extreme range of the scales of the time series, both in terms of the absolute values and the trends. This is a challenge both at training time and when computing the prediction of a time series, and partially stems from the inclusion of the multiplicative, exponential, and noise terms in our synthetic generation model. Conventional scaling techniques such as standardization (Z-score normalization) and min-max scaling techniques cannot apply: for example, not only does the absolute range differ greatly across training samples, but there is also large diversity in the number of outliers, and the scale of the pattern (i.e., periodicity and global trends) relative to the absolute range.

To handle these challenges, we perform three steps of outlier removal: (1) mask out any missing values, (2) standardize the data based on all non-outlier datapoints (defined as 2-sigma outliers), and (3) clip all 3-sigma outliers. This procedure allows us to put all time series into a relatively consistent range, while removing outliers that cause exploding gradients. Furthermore, when computing the loss of a time series, we scale the mean squared error (MSE) based on the maximum of the (scaled) input. The effect is a loss function that is in between MSE, which gives too much weight for series with a high range, and mean squared percentage error (MSPE), which gives too much weight for series with a small range. We show in Section 4 that robust scaling significantly boosts performance.

Training Details.

We train ForecastPFN using the synthetic prior p(φ) defined in Section 3.2. During early-stage model development, we chose hyperparameters µm, σm, σlin, σexp, mnoise, k to create the most diverse data distribution possible, with the model still being able to train without diverging or stalling. For example, when the parameters defining the noise distribution become too large, the training data is too noisy and the model cannot converge. We specifically note that (1) all model development was done before evaluating on the seven real-world datasets defined the next section, and (2) all experiments were done with a single ForecastPFN model (specifically, after developing the model, we trained it once, and then used it for all experiments in the next section. Crucially, since we use synthetic training data with a ground-truth trend and a separate noise component, we can remove the noise when calculating the train loss. This significantly improves the training speed of the model, since the model cannot learn from the noise, as it is independent of time (Appendix E.5).

We set the input length ℓ = 100 and the maximum prediction of 10 steps into the future (note: in the next section, we see that ForecastPFN is able to make accurate predictions significantly beyond these settings). We generate 300 000 synthetic series to use as training data, each of length 200, and we use a sliding window of size 100 to obtain 101 prediction tasks per generated series. Each epoch consists of 1 024 000 tasks, and we trained the transformer for 600 epochs with the Adam optimizer [30] on a single Tesla V100 16GB GPU, which took 30 hours. This training is done offline, and only once, and we open-source the trained model at https://github.com/abacusai/forecastpfn. For the full development, implementation, and training details, see Appendix E.

4. Experiments

We demonstrate the power of ForecastPFN by comparing it to various high-performing forecasting models across several datasets. We compare all models across different training time budgets, and we compare the zero-shot performance of ForecastPFN to other models with different data budgets.

Algorithmic Baselines.

We compare against three simple numerical baselines: Last, Mean, and SeasonalNaive [27]; two high-performing traditional methods: ARIMA [4] and Prophet [27]; three state-of-the-art transformer-based methods: FEDformer [63], Autoformer [59], and Informer [61]; a vanilla Transformer method [56]; and one state-of-the-art zero-shot method: Meta-N-BEATS [43]. All of the methods were implemented using their default hyperparameters and official codebase, with the exception of ARIMA (which has no ‘official’ codebase), for which we use pmdarima [52].

Benchmark Datasets.

To ensure a fair comparison, we evaluate on seven popular, real-world datasets across energy systems, economics, traffic, and weather: ECL (Electricity Consuming Load) [55], ETT 1 and 2 (Electricity Transformer Temperature) [61], Exchange [33], Illness [19], Traffic [46], and Weather [1]. These datasets are standard for benchmarking the performance of forecasting methods and are the same ones used by many of our baselines [43, 59, 61, 63]. The datasets are described in the Appendix E.

Experimental Setup.

The two zero-shot methods, ForecastPFN and Meta-N-BEATS, were both trained offline before the evaluations on benchmark datasets. We train ForecastPFN once on our synthetic data, as described in Section 3, and then that model is used for all evaluations in this paper. For Meta-N-Beats, we use the best version as described in their paper [43], which is the one trained on M4 [36]. However, since the prediction length needs to be specified upfront, we train several Meta-N-Beats models, one for each prediction length considered below. For each time series, the zero-shot models see only the input of length 36 and then predict future timesteps. These training regimes are different from the other, non-zero shot models which are trained for each configuration.

We compare the zero-shot methods to the nine other non-zero-shot methods described above, even though this is not a fair comparison: the non-zero-shot methods are allowed to train on earlier portions of the time series used at test time, as is common in standard forecasting algorithms. We explore the performance of these algorithms across various resource constraints in two distinct directions: by restricting the training time budget and by restricting the amount of training data budget given to the non-zero-shot methods. We restrict the data budget of the non-zero-shot methods to lengths from 50 to 500. We restrict the time budget of the non-zero-shot methods to wall clock budgets from 1 to 120 seconds, where timing is calculated after data loading and only during the training loop.

These two restrictions follow naturally from the ways in which forecasting datasets and applications can be constrained in the real world. Many real-world forecasting applications have very few initial observations [7, 9, 18, 27]. For example, many businesses need demand forecasts or customer churn forecasts for a large number of products or customers, respectively, which are only size 100 daily series or size 36 monthly series. Furthermore, there is also a wide range of applications which require a low time (or computational) budget in resource constrained environments, such as on a CPU or on edge devices. For example, forecasting may be needed to predict energy demand in developing countries. Another application is as a forecasting data-exploration tool, allowing users to see instant forecasting visualizations as they navigate their dataset.

We also test all algorithms with different prediction lengths, or the number of steps into the future an algorithm must predict. Most deep-learning-based algorithms require this hyperparameter to be set before training, and then that prediction length is encoded into the algorithm’s architecture. This is true for FEDFormer, Informer, Autoformer, the vanilla transformer, and Meta-N-BEATS, so we retrain these models for each experiment with a new prediction length. We choose prediction lengths from 6 to 48 as in prior work [63]. At test time, all algorithms are given series with input length of 36 and asked to predict into the future at these variable prediction lengths. For example, say that we have a time series {(t, yt)} 600 t=1, a data budget of x, and a prediction length of ℓ. We allow algorithms to use 10% of their data budget on validation. All non-zero-shot methods are allowed to train on {(t, yt)} 500 t=500−x . Then, at test time, all algorithms see the 36 input data points and make a prediction length of ℓ, e.g., input of {(t, yt)} 536 t=501 and make predictions for timesteps t = 537 to 537 + ℓ.

We conduct training runs under the Cartesian product of each prediction length and each data budget, as well as the Cartesian product of each prediction length and each time budget. We repeat these runs on every dataset and every algorithm. We conduct each run five times with different random seeds (in the randomness of the algorithm). However, ForecastPFN, Meta-N-BEATS, ARIMA, Prophet, and the baselines are deterministic algorithms, so they are only evaluated with one seed per run.

We plot the MSE in this section, and we give additional metrics in Appendix E. In order to aggregate results among all datasets and different random seeds, for each experiment, we compute the number of ‘wins’ (rank of 1) for each algorithm, as is commonly done in prior work [25, 39]. We plot the performance across data and time budgets in Figure 3, aggregated over datasets and prediction lengths, and tabulate the MSE of each model on each dataset in Table 1. Additionally, we plot the change in performance for the prediction lengths on each data budget in Figure 4 and in Appendix E). We plot error bars as one standard deviation from the mean by averaging over the training runs.

Results and Discussion.

We find that ForecastPFN significantly outperforms all ten other methods in the low data budget and low time budget settings (Figure 3). Specifically, we see that ForecastPFN is the best architecture by the number of MSE wins for time budgets 1 to 30. Similarly, ForecastPFN is the best architecture by the number of MSE wins for data budgets from 50 to 250. In the settings with higher data or time budget, ForecastPFN is still competitive with state-of-the-art methods. This story continues to hold across prediction lengths as well in Figure 4. ForecastPFN is the outright winner in nearly every prediction length for data budgets 50, 100, and 150, and becomes either outright best or very competitive in nearly every prediction length for budgets 200, 250, and 300. Additionally, we see from Table 1 that ForecastPFN achieves the lowest average MSE value on more datasets than any other algorithm, at a data budget of 50, and remains competitive at a data budget of 500. We also find that the simple baselines, SeasonalNaive and Last, often achieve second place behind ForecastPFN in the lowest training and runtime settings. This is not unexpected and is a testament to the challenge of predicting a series when given very little training data or training time.

The remarkable performance of ForecastPFN is despite the fact that, as explained above, the zero shot methods are at a disadvantage because the other methods were allowed to train on data from earlier in the time series. When ForecastPFN is compared to Meta-N-Beats, the only fair comparison, we see that ForecastPFN strictly outperforms Meta-N-Beats across all settings. We believe these strong results are due to our synthetic prior: by encoding multi-scale seasonal trends, global trends, and noise, across a variety of parameters, ForecastPFN learns very strong prior knowledge about the common patterns in time series. While ≈ 250 datapoints of a series contain enough complexity and noise for the training-based algorithms to struggle to make accurate predictions when learning the series ‘from scratch’, ForecastPFN’s prior knowledge from synthetic series is significant enough to make better predictions when only given access to the 36 input points.

Furthermore, ForecastPFN is significantly faster than the other transformer-based methods such as FEDformer, Informer, and Autoformer. ForecastPFN makes predictions in a single forward pass, just 0.2 seconds; in order to reach the same accuracy as ForecastPFN, the other transformer-based methods need take over 100 times more runtime.

Ablation Studies.

Since ForecastPFN takes 30 GPU hours to train, we do not conduct substantial ablation studies. However, we are able to investigate two important aspects of the ForecastPFN algorithm: our custom scaler and our noise model.

First, we investigate the impact of robust scaling (described in Section 3.3) on the performance of ForecastPFN. We compare robust scaling to min-max scaling. We conduct a training run of ForecastPFN with each scaler and plot the validation MSE loss on the synthetic dataset in Figure 5. We can clearly see that the robust scaler achieves a lower validation loss throughout training. We conclude the use of our robust scaler mitigates the effect of extreme values. While the presence of extreme values make it harder for the model to differentiate small-scale trends in a series, our robust scaler allows for better, faster convergence.

Finally, we investigate the impact of noise in our synthetic training data generation process (described in Section 3.3). We trained ForecastPFN with three scales of our noise parameter, mnoise: 1 (original ForecastPFN), 2/3 (low noise), and 1/6 (lowest noise). We do not change the scale of the noise in the validation data. In Table 2, we report the train and validation MSE on synthetic data for each model. Recall that we remove noise in the ground-truth predictions of the training data as a design decision that improves performance, which is why the scale looks different for the train and validation losses. We also look at removing the noise entirely in Appendix E.5 and come to similar conclusions about the level of noise. We can conclude from this ablation that the noise parameters we chose in our experiments provide for robust minimal train and validation losses, validating our approach.

5. Conclusions, Limitations, and Future Work

In this work, we introduced ForecastPFN, the first zero-shot forecasting model that is trained purely on synthetic data. Our novel synthetic data distribution incorporates multi-scale seasonal trends, linear and exponential global trends, and a noise distribution, capturing an extremely diverse range of time series. ForecastPFN is a prior-data fitted network, trained once, offline to approximate Bayesian inference, which can make predictions on a new time series dataset in a single forward pass. Through extensive experiments, we show that zero-shot predictions made by ForecastPFN are more accurate and faster compared to state-of-the-art forecasting methods, even when the other methods are allowed to train on hundreds of additional in-distribution data points.

Limitations and Future Work.

While ForecastPFN has shown remarkable success in the zero-shot setting, there are still limitations as well as exciting directions left for future work. Due to the vanilla transformer architecture, ForecastPFN is limited by its training data to time series of fewer than 1000 points. However, given the recent advances in scaling transformers [3, 14, 31, 53], this is not inherently a problem for ForecastPFN. Currently, ForecastPFN only works for univariate time series. Once again, this is not an inherent limitation, and designing ForecastPFN for multivariate time series is a promising direction for future work. Furthermore, using exogenous features are interesting ideas for follow-up work.

Our synthetic prior was created to focus on ‘human-like’ or ‘earth-like’ time series (daily, weekly, yearly periods), so ForecastPFN may not not perform well on uncommon periods such as 41, 89, or 97. ForecastPFN also only approximates the posterior predictive distribution and makes pointwise predictions. Outputting probabilistic predictions are an exciting area for future work. Finally, ForecastPFN is particularly well-suited to handling missing data (including large gaps), or varying time frequencies within the same series, in contrast to existing forecasting methods. An exciting future direction is to explore the potential to forecast time series in which the input contains large gaps or varying frequencies.

E. Additional Experimental Details

E.1. ForecastPFN Development

We estimate that the total computational budget used in this project, from initial research to running all the final evaluations, is 500 GPU hours. Our computation consisted mainly of designing the architecture and designing the synthetic data distribution. In this design phase, we used the train loss of the synthetic data as a signal, because many synthetic data distributions, and architectures, cause the model not to train, for example, setting the noise parameter too high. We also used the synthetic held-out validation set to make decisions, such as in Figure 5 and Appendix E.5.

The training of our ForecastPFN model is 30 GPU hours on a single Tesla V100 16GB GPU. We emphasize that this model training is only done once, and we released the trained ForecastPFN model.

For an ablation study, we tried to achieve non-trivial zero-shot performance with the FedFormer architecture. However, after significant effort, we were unable to achieve non-degenerate performance with the FedFormer architecture.

E.2. Implementation Details

ForecastPFN Implementation Details.

We use an encoder-based transformer architecture, with two encoders. Each encoder consists of a multi-head attention layer with four heads, and two feedforward layers. The first feedforward layer is size 32 times the embedding dimension, and the second feedforward layer is size 8 times the embedding dimension.

Baseline Implementation Details.

We compare ForecastPFN against ARIMA [4], Autoformer [59], FEDformer [63], Informer [61], Meta-N-BEATS [43], Prophet [54], and a vanilla Transformer method [56]. All of the methods were implemented using their default hyperparameters and official codebase. For ARIMA (which was designed in the 1970s and has no ‘official’ codebase), we used pmdarima [52]. The full implementation details of all methods can be found in our open source codebase. Furthermore, we compared to three baselines: Last (prediction is the last seen value in the series), Mean (prediction is the mean value in the series), and SeasonalNaive (prediction is the mean value from all prior instances of the same day of the week).

In Section 4, in the experiments with a restricted time budget, we restrict the time budget of the non-zero-shot methods to wall clock budgets from 1 to 120 seconds, where timing is calculated after data loading and only during the training loop. After each training step, we stop the training if the total budget was exceeded. In case an algorithm is unable to output any predictions within the data budget (such as ARIMA with a 1 second budget), we set the output to 0’s.

E.3 Training Details

We generate 100 000 each of synthetic daily, weekly, and monthly series, each of length 200, and we use a sliding window of size 100 to obtain 101 prediction tasks per series. We sample 1024 tasks in a training step, and there are 1000 training steps in an epoch. We trained the transformer for 300 epochs on a V100 16GB GPU, which took 30 hours.

We use the Adam optimizer with a learning rate of 0.0001, and MSE loss. We set the maximum prediction into the future to be 10 times the frequency of the training series.