Maximum Likelihood Training of Score-Based Diffusion Models

https://arxiv.org/pdf/2101.09258

https://github.com/yang-song/score_flow

Oct 2021 (NeurIPS 2021)

Abstract

Score-based diffusion models synthesize samples by reversing a stochastic process that diffuses data to noise, and are trained by minimizing a weighted combination of score matching losses. The log-likelihood of score-based diffusion models can be tractably computed through a connection to continuous normalizing flows, but log-likelihood is not directly optimized by the weighted combination of score matching losses. We show that for a specific weighting scheme, the objective upper bounds the negative log-likelihood, thus enabling approximate maximum likelihood training of score-based diffusion models. We empirically observe that maximum likelihood training consistently improves the likelihood of score-based diffusion models across multiple datasets, stochastic processes, and model architectures. Our best models achieve negative log-likelihoods of 2.83 and 3.76 bits/dim on CIFAR-10 and ImageNet 32 × 32 without any data augmentation, on a par with state-of-the-art autoregressive models on these tasks.

1. Introduction

Score-based generative models [44, 45, 48] and diffusion probabilistic models [43, 19] have recently achieved state-of-the-art sample quality in a number of tasks, including image generation [48, 11], audio synthesis [5, 27, 37], and shape generation [3]. Both families of models perturb data with a sequence of noise distributions, and generate samples by learning to reverse this path from noise to data. Through stochastic calculus, these approaches can be unified into a single framework [48] which we refer to as score-based diffusion models in this paper.

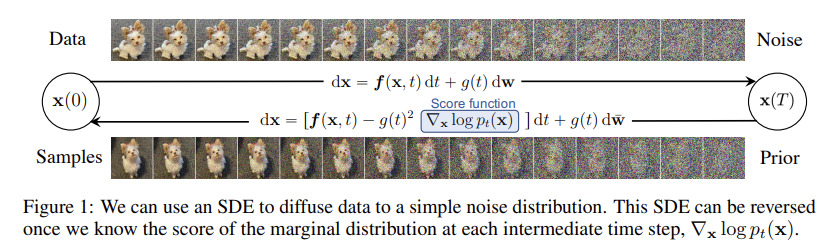

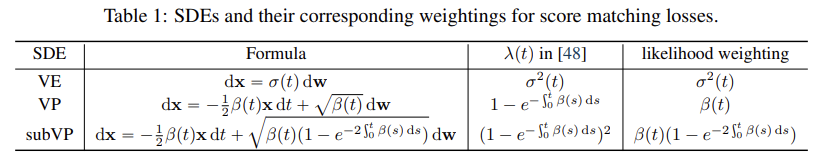

The framework of score-based diffusion models [48] involves gradually diffusing the data distribution towards a given noise distribution using a stochastic differential equation (SDE), and learning the time reversal of this SDE for sample generation. Crucially, the reverse-time SDE has a closed-form expression which depends solely on a time-dependent gradient field (a.k.a., score) of the perturbed data distribution. This gradient field can be efficiently estimated by training a neural network (called a score-based model [44, 45]) with a weighted combination of score matching losses [23, 56, 46] as the objective. A key advantage of score-based diffusion models is that they can be transformed into continuous normalizing flows (CNFs) [6, 15], thus allowing tractable likelihood computation with numerical ODE solvers.

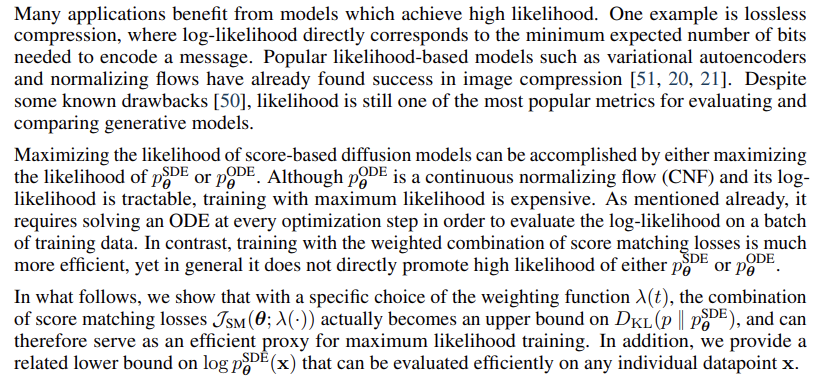

Compared to vanilla CNFs, score-based diffusion models are much more efficient to train. This is because the maximum likelihood objective for training CNFs requires running an expensive ODE solver for every optimization step, while the weighted combination of score matching losses for training score-based models does not. However, unlike maximum likelihood training, minimizing a combination of score matching losses does not necessarily lead to better likelihood values. Since better likelihoods are useful for applications including compression [21, 20, 51], semi-supervised learning [10], adversarial purification [47], and comparing against likelihood-based generative models, we seek a training objective for score-based diffusion models that is as efficient as score matching but also promotes higher likelihoods.

We show that such an objective can be readily obtained through slight modification of the weighted combination of score matching losses. Our theory reveals that with a specific choice of weighting, which we term the likelihood weighting, the combination of score matching losses actually upper bounds the negative log-likelihood. We further prove that this upper bound becomes tight when our score-based model corresponds to the true time-dependent gradient field of a certain reverse-time SDE. Using likelihood weighting increases the variance of our objective, which we counteract by introducing a variance reduction technique based on importance sampling. Our bound is analogous to the classic evidence lower bound used for training latent-variable models in the variational autoencoding framework [26, 39], and can be viewed as a continuous-time generalization of [43].

With our likelihood weighting, we can minimize the weighted combination of score matching losses for approximate maximum likelihood training of score-based diffusion models. Compared to weightings in previous work [48], we consistently improve likelihood values across multiple datasets, model architectures, and SDEs, with only slight degradation of Fréchet Inception distances [17]. Moreover, our upper bound on negative log-likelihood allows training with variational dequantization [18], with which we reach negative log-likelihood of 2.83 bits/dim on CIFAR-10 [28] and 3.76 bits/dim on ImageNet 32×32 [55] with no data augmentation. Our models present the first instances of normalizing flows which achieve comparable likelihood to cutting-edge autoregressive models.

2. Score-based diffusion models

Score-based diffusion models are deep generative models that smoothly transform data to noise with a diffusion process, and synthesize samples by learning and simulating the time reversal of this diffusion. The overall idea is illustrated in Fig. 1.

2.1. Diffusing data to noise with an SDE

2.2. Generating samples with the reverse SDE

3. Likelihood of score-based diffusion models

4. Bounding the likelihood of score-based diffusion models

4.1. Bounding the KL divergence with likelihood weighting

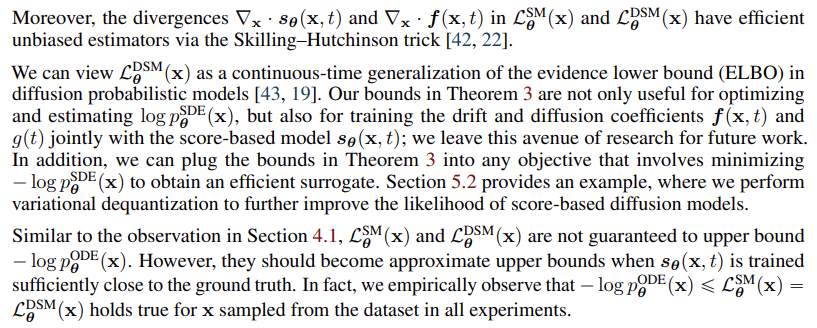

4.2. Bounding the log-likelihood on individual datapoints

4.3. Numerical stability

So far we have assumed that the SDEs are defined in the time horizon [0, T] in all theoretical analysis. In practice, however, we often face numerical instabilities when t → 0. To avoid them, we choose a small non-zero starting time ε >0, and train/evaluate score-based diffusion models in the time horizon [ ε, T] instead of [0, T]. Since ε is small, training score-based diffusion models with likelihood weighting still approximately maximizes their model likelihood. Yet at test time, the likelihood bound as computed in Theorem 3 is slightly biased, rendering the values not directly comparable to results reported in other works. We use Jensen’s inequality to correct for this bias in our experiments, for which we provide a detailed explanation in Appendix B.

4.4. Related work

Our result in Theorem 2 can be viewed as a generalization of De Bruijin’s identity ([49], Eq. 2.12) from its original differential form to an integral form. De Bruijn’s identity relates the rate of change of the Shannon entropy under an additive Gaussian noise channel to the Fisher information, a result which can be interpreted geometrically as relating the rate of change of the volume of a distribution’s typical set to its surface area. Ref. [2] (Lemma 1) builds on this result and presents an integral and relative form of de Bruijn’s identity which relates the KL divergence to the integral of the relative Fisher information for a distribution of interest and a reference standard normal. More generally, various identities and inequalities involving the (relative) Shannon entropy and (relative) Fisher information have found use in proofs of the central limit theorem [24]. Ref. [31] (Theorem 1) covers similar ground to the relative form of de Bruijn’s identity, but is perhaps the first to consider its implications for learning in probabilistic models by framing the discussion in terms of the score matching objective ([23], Eq. 2).

5. Improving the likelihood of score-based diffusion models

5.1. Variance reduction via importance sampling

5.2. Variational dequantization

5.3. Experiments

6. Conclusion

We propose an efficient training objective for approximate maximum likelihood training of score-based diffusion models. Our theoretical analysis shows that the weighted combination of score matching losses upper bounds the negative log-likelihood when using a particular weighting function which we term the likelihood weighting. By minimizing this upper bound, we consistently improve the likelihood of score-based diffusion models across multiple model architectures, SDEs, and datasets. When combined with variational dequantization, we achieve competitive likelihoods on CIFAR-10 and ImageNet 32×32, matching the performance of best-in-class autoregressive models.

Our upper bound is analogous to the evidence lower bound commonly used for training variational autoencoders. Aside from promoting higher likelihood, the bound can be combined with other objectives that depend on the negative log-likelihood, and also enables joint training of the forward and backward SDEs, which we leave as a future research direction. Our results suggest that score-based diffusion models are competitive alternatives to continuous normalizing flows which enjoy the same tractable likelihood computation but with more efficient maximum likelihood training.

Limitations and broader impact

Despite promising experimental results, we would like to emphasize that there is no theoretical guarantee that improving the SDE likelihood will improve the ODE likelihood, and this is explicitly a limitation of our work. Score-based diffusion models also suffer from slow sampling. In our experiments, the ODE solver typically need around 550 and 450 evaluations of the score-based model for generation and likelihood computation on CIFAR-10 and ImageNet respectively, which is considerably slower than alternative generative models like VAEs and GANs. In addition, the current formulation of score-based diffusion models only supports continuous data, and cannot be naturally adapted to discrete data without resorting to dequantization. Same as other deep generative models, score-based diffusion models can potentially be used to generate harmful media contents such as “deepfakes”, and might reflect and amplify undesirable social bias that could exist in the training dataset.