-

https://arxiv.org/pdf/2208.11970

1. Introduction: Generative Models

Given observed samples x from a distribution of interest, the goal of a generative model is to learn to model its true data distribution p(x). Once learned, we can generate new samples from our approximate model at will. Furthermore, under some formulations, we are able to use the learned model to evaluate the likelihood of observed or sampled data as well.

There are several well-known directions in current literature, that we will only introduce briefly at a high level. Generative Adversarial Networks (GANs) model the sampling procedure of a complex distribution, which is learned in an adversarial manner. Another class of generative models, termed "likelihood-based", seeks to learn a model that assigns a high likelihood to the observed data samples. This includes autoregressive models, normalizing flows, and Variational Autoencoders (VAEs). Another similar approach is energy-based modeling, in which a distribution is learned as an arbitrarily flexible energy function that is then normalized.

Score-based generative models are highly related; instead of learning to model the energy function itself, they learn the score of the energy-based model as a neural network. In this work we explore and review diffusion models, which as we will demonstrate, have both likelihood-based and score-based interpretations. We showcase the math behind such models in excruciating detail, with the aim that anyone can follow along and understand what diffusion models are and how they work.

2. Background: ELBO, VAE, and Hierarchical VAE

For many modalities, we can think of the data we observe as represented or generated by an associated unseen latent variable, which we can denote by random variable z. The best intuition for expressing this idea is through Plato’s Allegory of the Cave. In the allegory, a group of people are chained inside a cave their entire life and can only see the two-dimensional shadows projected onto a wall in front of them, which are generated by unseen three-dimensional objects passed before a fire. To such people, everything they observe is actually determined by higher-dimensional abstract concepts that they can never behold.

Analogously, the objects that we encounter in the actual world may also be generated as a function of some higher-level representations; for example, such representations may encapsulate abstract properties such as color, size, shape, and more. Then, what we observe can be interpreted as a three-dimensional projection or instantiation of such abstract concepts, just as what the cave people observe is actually a two-dimensional projection of three-dimensional objects. Whereas the cave people can never see (or even fully comprehend) the hidden objects, they can still reason and draw inferences about them; in a similar way, we can approximate latent representations that describe the data we observe.

Whereas Plato’s Allegory illustrates the idea behind latent variables as potentially unobservable representations that determine observations, a caveat of this analogy is that in generative modeling, we generally seek to learn lower-dimensional latent representations rather than higher-dimensional ones. This is because trying to learn a representation of higher dimension than the observation is a fruitless endeavor without strong priors. On the other hand, learning lower-dimensional latents can also be seen as a form of compression, and can potentially uncover semantically meaningful structure describing observations.

2.1. Evidence Lower Bound

Mathematically, we can imagine the latent variables and the data we observe as modeled by a joint distribution p(x, z). Recall one approach of generative modeling, termed "likelihood-based", is to learn a model to maximize the likelihood p(x) of all observed x. There are two ways we can manipulate this joint distribution to recover the likelihood of purely our observed data p(x); we can explicitly marginalize out the latent variable z:

or, we could also appeal to the chain rule of probability:

Directly computing and maximizing the likelihood p(x) is difficult because it either involves integrating out all latent variables z in Equation 1, which is intractable for complex models, or it involves having access to a ground truth latent encoder p(z|x) in Equation 2. However, using these two equations, we can derive a term called the Evidence Lower Bound (ELBO), which as its name suggests, is a lower bound of the evidence. The evidence is quantified in this case as the log likelihood of the observed data. Then, maximizing the ELBO becomes a proxy objective with which to optimize a latent variable model; in the best case, when the ELBO is powerfully parameterized and perfectly optimized, it becomes exactly equivalent to the evidence. Formally, the equation of the ELBO is:

To make the relationship with the evidence explicit, we can mathematically write:

Here, qφ(z|x) is a flexible approximate variational distribution with parameters φ that we seek to optimize. Intuitively, it can be thought of as a parameterizable model that is learned to estimate the true distribution over latent variables for given observations x; in other words, it seeks to approximate true posterior p(z|x). As we will see when exploring the Variational Autoencoder, as we increase the lower bound by tuning the parameters φ to maximize the ELBO, we gain access to components that can be used to model the true data distribution and sample from it, thus learning a generative model. For now, let us try to dive deeper into why the ELBO is an objective we would like to maximize.

Let us begin by deriving the ELBO, using Equation 1:

In this derivation, we directly arrive at our lower bound by applying Jensen’s Inequality. However, this does not supply us much useful information about what is actually going on underneath the hood; crucially, this proof gives no intuition on exactly why the ELBO is actually a lower bound of the evidence, as Jensen’s Inequality handwaves it away. Furthermore, simply knowing that the ELBO is truly a lower bound of the data does not really tell us why we want to maximize it as an objective. To better understand the relationship between the evidence and the ELBO, let us perform another derivation, this time using Equation 2:

From this derivation, we clearly observe from Equation 15 that the evidence is equal to the ELBO plus the KL Divergence between the approximate posterior qφ(z|x) and the true posterior p(z|x). In fact, it was this KL Divergence term that was magically removed by Jensen’s Inequality in Equation 8 of the first derivation. Understanding this term is the key to understanding not only the relationship between the ELBO and the evidence, but also the reason why optimizing the ELBO is an appropriate objective at all.

Firstly, we now know why the ELBO is indeed a lower bound: the difference between the evidence and the ELBO is a strictly non-negative KL term, thus the value of the ELBO can never exceed the evidence.

Secondly, we explore why we seek to maximize the ELBO. Having introduced latent variables z that we would like to model, our goal is to learn this underlying latent structure that describes our observed data. In other words, we want to optimize the parameters of our variational posterior qφ(z|x) to exactly match the true posterior distribution p(z|x), which is achieved by minimizing their KL Divergence (ideally to zero). Unfortunately, it is intractable to minimize this KL Divergence term directly, as we do not have access to the ground truth p(z|x) distribution. However, notice that on the left hand side of Equation 15, the likelihood of our data (and therefore our evidence term log p(x)) is always a constant with respect to φ, as it is computed by marginalizing out all latents z from the joint distribution p(x, z) and does not depend on φ whatsoever. Since the ELBO and KL Divergence terms sum up to a constant, any maximization of the ELBO term with respect to φ necessarily invokes an equal minimization of the KL Divergence term. Thus, the ELBO can be maximized as a proxy for learning how to perfectly model the true latent posterior distribution; the more we optimize the ELBO, the closer our approximate posterior gets to the true posterior. Additionally, once trained, the ELBO can be used to estimate the likelihood of observed or generated data as well, since it is learned to approximate the model evidence log p(x).

2.2. Variational Autoencoders

In the default formulation of the Variational Autoencoder (VAE) [1], we directly maximize the ELBO. This approach is variational, because we optimize for the best qφ(z|x) amongst a family of potential posterior distributions parameterized by φ. It is called an autoencoder because it is reminiscent of a traditional autoencoder model, where input data is trained to predict itself after undergoing an intermediate bottlenecking representation step. To make this connection explicit, let us dissect the ELBO term further:

In this case, we learn an intermediate bottlenecking distribution qφ(z|x) that can be treated as an encoder ; it transforms inputs into a distribution over possible latents. Simultaneously, we learn a deterministic function pθ(x|z) to convert a given latent vector z into an observation x, which can be interpreted as a decoder.

The two terms in Equation 19 each have intuitive descriptions: the first term measures the reconstruction likelihood of the decoder from our variational distribution; this ensures that the learned distribution is modeling effective latents that the original data can be regenerated from. The second term measures how similar the learned variational distribution is to a prior belief held over latent variables. Minimizing this term encourages the encoder to actually learn a distribution rather than collapse into a Dirac delta function. Maximizing the ELBO is thus equivalent to maximizing its first term and minimizing its second term.

A defining feature of the VAE is how the ELBO is optimized jointly over parameters φ and θ. The encoder of the VAE is commonly chosen to model a multivariate Gaussian with diagonal covariance, and the prior is often selected to be a standard multivariate Gaussian:

Then, the KL divergence term of the ELBO can be computed analytically, and the reconstruction term can be approximated using a Monte Carlo estimate. Our objective can then be rewritten as:

where latents {z (l)} L l=1 are sampled from qφ(z|x), for every observation x in the dataset. However, a problem arises in this default setup: each z (l) that our loss is computed on is generated by a stochastic sampling procedure, which is generally non-differentiable. Fortunately, this can be addressed via the reparameterization trick when qφ(z|x) is designed to model certain distributions, including the multivariate Gaussian.

The reparameterization trick rewrites a random variable as a deterministic function of a noise variable; this allows for the optimization of the non-stochastic terms through gradient descent. For example, samples from a normal distribution x ∼ N (x; µ, σ2 ) with arbitrary mean µ and variance σ 2 can be rewritten as:

In other words, arbitrary Gaussian distributions can be interpreted as standard Gaussians (of which ε is a sample) that have their mean shifted from zero to the target mean µ by addition, and their variance stretched by the target variance σ 2 . Therefore, by the reparameterization trick, sampling from an arbitrary Gaussian distribution can be performed by sampling from a standard Gaussian, scaling the result by the target standard deviation, and shifting it by the target mean.

In a VAE, each z is thus computed as a deterministic function of input x and auxiliary noise variable ε:

where represents an element-wise product. Under this reparameterized version of z, gradients can then be computed with respect to φ as desired, to optimize µφ and σφ. The VAE therefore utilizes the reparameterization trick and Monte Carlo estimates to optimize the ELBO jointly over φ and θ.

After training a VAE, generating new data can be performed by sampling directly from the latent space p(z) and then running it through the decoder. Variational Autoencoders are particularly interesting when the dimensionality of z is less than that of input x, as we might then be learning compact, useful representations. Furthermore, when a semantically meaningful latent space is learned, latent vectors can be edited before being passed to the decoder to more precisely control the data generated.

2.3. Hierarchical Variational Autoencoders

A Hierarchical Variational Autoencoder (HVAE) [2, 3] is a generalization of a VAE that extends to multiple hierarchies over latent variables. Under this formulation, latent variables themselves are interpreted as generated from other higher-level, more abstract latents. Intuitively, just as we treat our three-dimensional observed objects as generated from a higher-level abstract latent, the people in Plato’s cave treat threedimensional objects as latents that generate their two-dimensional observations. Therefore, from the perspective of Plato’s cave dwellers, their observations can be treated as modeled by a latent hierarchy of depth two (or more).

Whereas in the general HVAE with T hierarchical levels, each latent is allowed to condition on all previous latents, in this work we focus on a special case which we call a Markovian HVAE (MHVAE). In a MHVAE, the generative process is a Markov chain; that is, each transition down the hierarchy is Markovian, where decoding each latent zt only conditions on previous latent zt+1. Intuitively, and visually, this can be seen as simply stacking VAEs on top of each other, as depicted in Figure 2; another appropriate term describing this model is a Recursive VAE. Mathematically, we represent the joint distribution and the posterior of a Markovian HVAE as:

Then, we can easily extend the ELBO to be:

We can then plug our joint distribution (Equation 23) and posterior (Equation 24) into Equation 28 to produce an alternate form:

As we will show below, when we investigate Variational Diffusion Models, this objective can be further decomposed into interpretable components.

3. Variational Diffusion Models

The easiest way to think of a Variational Diffusion Model (VDM) [4, 5, 6] is simply as a Markovian Hierarchical Variational Autoencoder with three key restrictions:

- The latent dimension is exactly equal to the data dimension

- The structure of the latent encoder at each timestep is not learned; it is pre-defined as a linear Gaussian model. In other words, it is a Gaussian distribution centered around the output of the previous timestep

- The Gaussian parameters of the latent encoders vary over time in such a way that the distribution of the latent at final timestep T is a standard Gaussian

Furthermore, we explicitly maintain the Markov property between hierarchical transitions from a standard Markovian Hierarchical Variational Autoencoder.

Let us expand on the implications of these assumptions. From the first restriction, with some abuse of notation, we can now represent both true data samples and latent variables as xt, where t = 0 represents true data samples and t ∈ [1, T] represents a corresponding latent with hierarchy indexed by t. The VDM posterior is the same as the MHVAE posterior (Equation 24), but can now be rewritten as:

From the second assumption, we know that the distribution of each latent variable in the encoder is a Gaussian centered around its previous hierarchical latent. Unlike a Markovian HVAE, the structure of the encoder at each timestep t is not learned; it is fixed as a linear Gaussian model, where the mean and standard deviation can be set beforehand as hyperparameters [5], or learned as parameters [6]. We parameterize the Gaussian encoder with mean µt(xt) = √ αtxt−1, and variance Σt(xt) = (1 − αt)I, where the form of the coefficients are chosen such that the variance of the latent variables stay at a similar scale; in other words, the encoding process is variance-preserving. Note that alternate Gaussian parameterizations are allowed, and lead to similar derivations. The main takeaway is that αt is a (potentially learnable) coefficient that can vary with the hierarchical depth t, for flexibility. Mathematically, encoder transitions are denoted as:

From the third assumption, we know that αt evolves over time according to a fixed or learnable schedule structured such that the distribution of the final latent p(xT ) is a standard Gaussian. We can then update the joint distribution of a Markovian HVAE (Equation 23) to write the joint distribution for a VDM as:

Collectively, what this set of assumptions describes is a steady noisification of an image input over time; we progressively corrupt an image by adding Gaussian noise until eventually it becomes completely identical to pure Gaussian noise. Visually, this process is depicted in Figure 3.

Note that our encoder distributions q(xt|xt−1) are no longer parameterized by φ, as they are completely modeled as Gaussians with defined mean and variance parameters at each timestep. Therefore, in a VDM, we are only interested in learning conditionals pθ(xt−1|xt), so that we can simulate new data. After optimizing the VDM, the sampling procedure is as simple as sampling Gaussian noise from p(xT ) and iteratively running the denoising transitions pθ(xt−1|xt) for T steps to generate a novel x0.

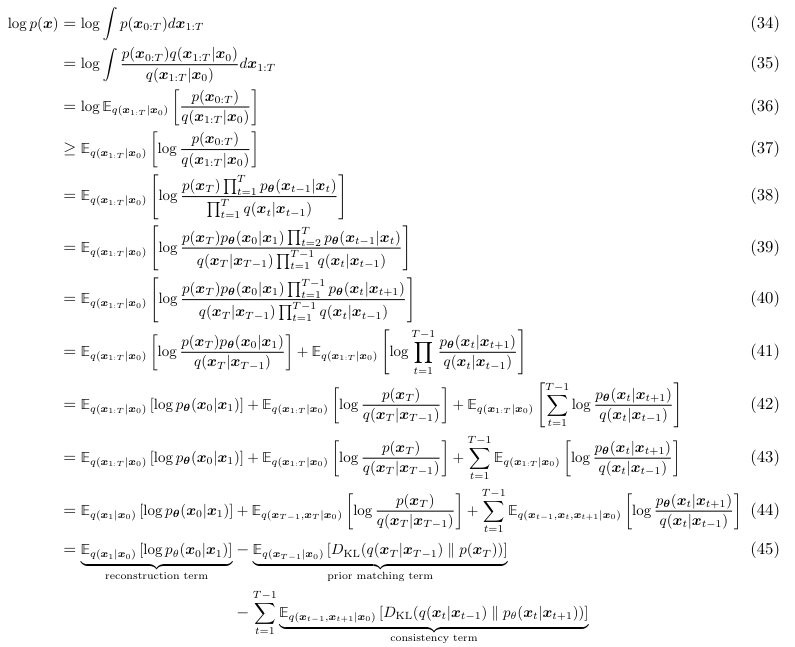

Like any HVAE, the VDM can be optimized by maximizing the ELBO, which can be derived as:

---------------------------- 요 부분 생략 가능 ↓ (밑에 다른 derivation 나옴) ----------------------------

The derived form of the ELBO can be interpreted in terms of its individual components:

Visually, this interpretation of the ELBO is depicted in Figure 4. The cost of optimizing a VDM is primarily dominated by the third term, since we must optimize over all timesteps t.

Under this derivation, all terms of the ELBO are computed as expectations, and can therefore be approximated using Monte Carlo estimates. However, actually optimizing the ELBO using the terms we just derived might be suboptimal; because the consistency term is computed as an expectation over two random variables {xt−1, xt+1} for every timestep, the variance of its Monte Carlo estimate could potentially be higher than a term that is estimated using only one random variable per timestep. As it is computed by summing up T −1 consistency terms, the final estimated value of the ELBO may have high variance for large T values.

---------------------------- 요 부분 생략 가능 ↑ ----------------------------

Let us instead try to derive a form for our ELBO where each term is computed as an expectation over only one random variable at a time. The key insight is that we can rewrite encoder transitions as q(xt|xt−1) = q(xt|xt−1, x0), where the extra conditioning term is superfluous due to the Markov property. Then, according to Bayes rule, we can rewrite each transition as:

Armed with this new equation, we can retry the derivation resuming from the ELBO in Equation 37:

We have therefore successfully derived an interpretation for the ELBO that can be estimated with lower variance, as each term is computed as an expectation of at most one random variable at a time. This formulation also has an elegant interpretation, which is revealed when inspecting each individual term:

As a side note, one observes that in the process of both ELBO derivations (Equation 45 and Equation 58), only the Markov assumption is used; as a result these formulae will hold true for any arbitrary Markovian HVAE. Furthermore, when we set T = 1, both of the ELBO interpretations for a VDM exactly recreate the ELBO equation of a vanilla VAE, as written in Equation 19.

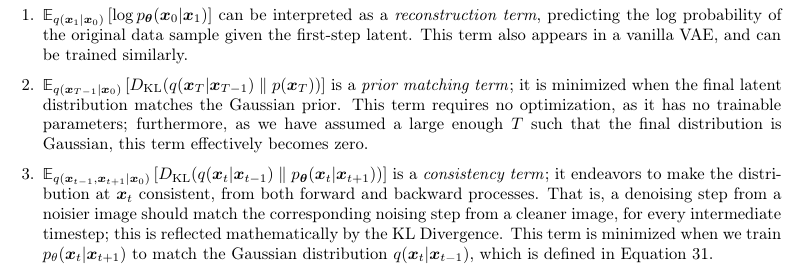

In this derivation of the ELBO, the bulk of the optimization cost once again lies in the summation term, which dominates the reconstruction term. Whereas each KL Divergence term DKL(q(xt−1|xt, x0) k pθ(xt−1|xt)) is difficult to minimize for arbitrary posteriors in arbitrarily complex Markovian HVAEs due to the added complexity of simultaneously learning the encoder, in a VDM we can leverage the Gaussian transition assumption to make optimization tractable. By Bayes rule, we have:

Then, the form of q(xt|x0) can be recursively derived through repeated applications of the reparameterization trick. Suppose that we have access to 2T random noise variables {ε∗ t , εt} T t=0 iid∼ N (ε; 0, I). Then, for an arbitrary sample xt ∼ q(xt|x0), we can rewrite it as:

We have therefore derived the Gaussian form of q(xt|x0). This derivation can be modified to also yield the Gaussian parameterization describing q(xt−1|x0). Now, knowing the forms of both q(xt|x0) and q(xt−1|x0), we can proceed to calculate the form of q(xt−1|xt, x0) by substituting into the Bayes rule expansion:

where in Equation 75, C(xt, x0) is a constant term with respect to xt−1 computed as a combination of only xt, x0, and α values; this term is implicitly returned in Equation 84 to complete the square.

We have therefore shown that at each step, xt−1 ∼ q(xt−1|xt, x0) is normally distributed, with mean µq(xt, x0) that is a function of xt and x0, and variance Σq(t) as a function of α coefficients. These α coefficients are known and fixed at each timestep; they are either set permanently when modeled as hyperparameters, or treated as the current inference output of a network that seeks to model them. Following Equation 84, we can rewrite our variance equation as Σq(t) = σ 2 q (t)I, where:

In order to match approximate denoising transition step pθ(xt−1|xt) to ground-truth denoising transition step q(xt−1|xt, x0) as closely as possible, we can also model it as a Gaussian. Furthermore, as all α terms are known to be frozen at each timestep, we can immediately construct the variance of the approximate denoising transition step to also be Σq(t) = σ 2 q (t)I. We must parameterize its mean µθ(xt, t) as a function of xt, however, since pθ(xt−1|xt) does not condition on x0.

Recall that the KL Divergence between two Gaussian distributions is:

In our case, where we can set the variances of the two Gaussians to match exactly, optimizing the KL Divergence term reduces to minimizing the difference between the means of the two distributions:

where we have written µq as shorthand for µq(xt, x0), and µθ as shorthand for µθ(xt, t) for brevity. In other words, we want to optimize a µθ(xt, t) that matches µq(xt, x0), which from our derived Equation 84, takes the form:

As µθ(xt, t) also conditions on xt, we can match µq(xt, x0) closely by setting it to the following form

where xˆθ(xt, t) is parameterized by a neural network that seeks to predict x0 from noisy image xt and time index t. Then, the optimization problem simplifies to

Therefore, optimizing a VDM boils down to learning a neural network to predict the original ground truth image from an arbitrarily noisified version of it [5]. Furthermore, minimizing the summation term of our derived ELBO objective (Equation 58) across all noise levels can be approximated by minimizing the expectation over all timesteps:

which can then be optimized using stochastic samples over timesteps.

3.1. Learning Diffusion Noise Parameters

Let us investigate how the noise parameters of a VDM can be jointly learned. One potential approach is to model αt using a neural network αˆη(t) with parameters η. However, this is inefficient as inference must be performed multiple times at each timestep t to compute α¯t. Whereas caching can mitigate this computational cost, we can also derive an alternate way to learn the diffusion noise parameters. By substituting our variance equation from Equation 85 into our derived per-timestep objective in Equation 99, we can reduce:

As the name implies, the SNR represents the ratio between the original signal and the amount of noise present; a higher SNR represents more signal and a lower SNR represents more noise. In a diffusion model, we require the SNR to monotonically decrease as timestep t increases; this formalizes the notion that perturbed input xt becomes increasingly noisy over time, until it becomes identical to a standard Gaussian at t = T.

Following the simplification of the objective in Equation 110, we can directly parameterize the SNR at each timestep using a neural network, and learn it jointly along with the diffusion model. As the SNR must monotonically decrease over time, we can represent it as:

where ωη(t) is modeled as a monotonically increasing neural network with parameters η. Negating ωη(t) results in a monotonically decreasing function, whereas the exponential forces the resulting term to be positive. Note that the objective in Equation 100 must now optimize over η as well. By combining our parameterization of SNR in Equation 111 with our definition of SNR in Equation 109, we can also explicitly derive elegant forms for the value of α¯t as well as for the value of 1 − α¯t:

These terms are necessary for a variety of computations; for example, during optimization, they are used to create arbitrarily noisy xt from input x0 using the reparameterization trick, as derived in Equation 69.

3.2. Three Equivalent Interpretations

As we previously proved, a Variational Diffusion Model can be trained by simply learning a neural network to predict the original natural image x0 from an arbitrary noised version xt and its time index t. However, x0 has two other equivalent parameterizations, which leads to two further interpretations for a VDM.

Firstly, we can utilize the reparameterization trick. In our derivation of the form of q(xt|x0), we can rearrange Equation 69 to show that:

Plugging this into our previously derived true denoising transition mean µq(xt, x0), we can rederive as:

Therefore, we can set our approximate denoising transition mean µθ(xt, t) as:

and the corresponding optimization problem becomes:

Here, εˆθ(xt, t) is a neural network that learns to predict the source noise ε0 ∼ N (ε; 0, I) that determines xt from x0. We have therefore shown that learning a VDM by predicting the original image x0 is equivalent to learning to predict the noise; empirically, however, some works have found that predicting the noise resulted in better performance [5, 7].

To derive the third common interpretation of Variational Diffusion Models, we appeal to Tweedie’s Formula [8]. In English, Tweedie’s Formula states that the true mean of an exponential family distribution, given samples drawn from it, can be estimated by the maximum likelihood estimate of the samples (aka empirical mean) plus some correction term involving the score of the estimate. In the case of just one observed sample, the empirical mean is just the sample itself. It is commonly used to mitigate sample bias; if observed samples all lie on one end of the underlying distribution, then the negative score becomes large and corrects the naive maximum likelihood estimate of the samples towards the true mean.

As it turns out, the two terms are off by a constant factor that scales with time! The score function measures how to move in data space to maximize the log probability; intuitively, since the source noise is added to a natural image to corrupt it, moving in its opposite direction "denoises" the image and would be the best update to increase the subsequent log probability. Our mathematical proof justifies this intuition; we have explicitly shown that learning to model the score function is equivalent to modeling the negative of the source noise (up to a scaling factor).

We have therefore derived three equivalent objectives to optimize a VDM: learning a neural network to predict the original image x0, the source noise ε0, or the score of the image at an arbitrary noise level ∇ log p(xt). The VDM can be scalably trained by stochastically sampling timesteps t and minimizing the norm of the prediction with the ground truth target.

4. Score-based Generative Models

We have shown that a Variational Diffusion Model can be learned simply by optimizing a neural network sθ(xt, t) to predict the score function ∇ log p(xt). However, in our derivation, the score term arrived from an application of Tweedie’s Formula; this doesn’t necessarily provide us with great intuition or insight into what exactly the score function is or why it is worth modeling. Fortunately, we can look to another class of generative models, Score-based Generative Models [9, 10, 11], for exactly this intuition. As it turns out, we can show that the VDM formulation we have previously derived has an equivalent Score-based Generative Modeling formulation, allowing us to flexibly switch between these two interpretations at will.

To begin to understand why optimizing a score function makes sense, we take a detour and revisit energybased models [12, 13]. Arbitrarily flexible probability distributions can be written in the form:

What does the score function represent? For every x, taking the gradient of its log likelihood with respect to x essentially describes what direction in data space to move in order to further increase its likelihood.

Intuitively, then, the score function defines a vector field over the entire space that data x inhabits, pointing towards the modes. Visually, this is depicted in the right plot of Figure 6. Then, by learning the score function of the true data distribution, we can generate samples by starting at any arbitrary point in the same space and iteratively following the score until a mode is reached. This sampling procedure is known as Langevin dynamics, and is mathematically described as:

where x0 is randomly sampled from a prior distribution (such as uniform), and ε ∼ N (ε; 0, I) is an extra noise term to ensure that the generated samples do not always collapse onto a mode, but hover around it for diversity. Furthermore, because the learned score function is deterministic, sampling with a noise term involved adds stochasticity to the generative process, allowing us to avoid deterministic trajectories. This is particularly useful when sampling is initialized from a position that lies between multiple modes. A visual depiction of Langevin dynamics sampling and the benefits of the noise term is shown in Figure 6.

Note that the objective in Equation 157 relies on having access to the ground truth score function, which is unavailable to us for complex distributions such as the one modeling natural images. Fortunately, alternative techniques known as score matching [14, 15, 16, 17] have been derived to minimize this Fisher divergence without knowing the ground truth score, and can be optimized with stochastic gradient descent.

Collectively, learning to represent a distribution as a score function and using it to generate samples through Markov Chain Monte Carlo techniques, such as Langevin dynamics, is known as Score-based Generative Modeling [9, 10, 11].

There are three main problems with vanilla score matching, as detailed by Song and Ermon [9]. Firstly, the score function is ill-defined when x lies on a low-dimensional manifold in a high-dimensional space. This can be seen mathematically; all points not on the low-dimensional manifold would have probability zero, the log of which is undefined. This is particularly inconvenient when trying to learn a generative model over natural images, which is known to lie on a low-dimensional manifold of the entire ambient space.

Secondly, the estimated score function trained via vanilla score matching will not be accurate in low density regions. This is evident from the objective we minimize in Equation 157. Because it is an expectation over p(x), and explicitly trained on samples from it, the model will not receive an accurate learning signal for rarely seen or unseen examples. This is problematic, since our sampling strategy involves starting from a random location in the high-dimensional space, which is most likely random noise, and moving according to the learned score function. Since we are following a noisy or inaccurate score estimate, the final generated samples may be suboptimal as well, or require many more iterations to converge on an accurate output.

Lastly, Langevin dynamics sampling may not mix, even if it is performed using the ground truth scores. Suppose that the true data distribution is a mixture of two disjoint distributions:

Then, when the score is computed, these mixing coefficients are lost, since the log operation splits the coefficient from the distribution and the gradient operation zeros it out. To visualize this, note that the ground truth score function shown in the right Figure 6 is agnostic of the different weights between the three distributions; Langevin dynamics sampling from the depicted initialization point has a roughly equal chance of arriving at each mode, despite the bottom right mode having a higher weight in the actual Mixture of Gaussians.

It turns out that these three drawbacks can be simultaneously addressed by adding multiple levels of Gaussian noise to the data. Firstly, as the support of a Gaussian noise distribution is the entire space, a perturbed data sample will no longer be confined to a low-dimensional manifold. Secondly, adding large Gaussian noise will increase the area each mode covers in the data distribution, adding more training signal in low density regions. Lastly, adding multiple levels of Gaussian noise with increasing variance will result in intermediate distributions that respect the ground truth mixing coefficients.

where λ(t) is a positive weighting function that conditions on noise level t. Note that this objective almost exactly matches the objective derived in Equation 148 to train a Variational Diffusion Model. Furthermore, the authors propose annealed Langevin dynamics sampling as a generative procedure, in which samples are produced by running Langevin dynamics for each t = T, T −1, ..., 2, 1 in sequence. The initialization is chosen from some fixed prior (such as uniform), and each subsequent sampling step starts from the final samples of the previous simulation. Because the noise levels steadily decrease over timesteps t, and we reduce the step size over time, the samples eventually converge into a true mode. This is directly analogous to the sampling procedure performed in the Markovian HVAE interpretation of a Variational Diffusion Model, where a randomly initialized data vector is iteratively refined over decreasing noise levels.

Therefore, we have established an explicit connection between Variational Diffusion Models and Score-based Generative Models, both in their training objectives and sampling procedures.

One question is how to naturally generalize diffusion models to an infinite number of timesteps. Under the Markovian HVAE view, this can be interpreted as extending the number of hierarchies to infinity T → ∞. It is clearer to represent this from the equivalent score-based generative model perspective; under an infinite number of noise scales, the perturbation of an image over continuous time can be represented as a stochastic process, and therefore described by a stochastic differential equation (SDE). Sampling is then performed by reversing the SDE, which naturally requires estimating the score function at each continuous-valued noise level [10]. Different parameterizations of the SDE essentially describe different perturbation schemes over time, enabling flexible modeling of the noising procedure [6].

5. Guidance

So far, we have focused on modeling just the data distribution p(x). However, we are often also interested in learning conditional distribution p(x|y), which would enable us to explicitly control the data we generate through conditioning information y. This forms the backbone of image super-resolution models such as Cascaded Diffusion Models [18], as well as state-of-the-art image-text models such as DALL-E 2 [19] and Imagen [7].

A natural way to add conditioning information is simply alongside the timestep information, at each iteration. Recall our joint distribution from Equation 32:

Then, to turn this into a conditional diffusion model, we can simply add arbitrary conditioning information y at each transition step as:

For example, y could be a text encoding in image-text generation, or a low-resolution image to perform super-resolution on. We are thus able to learn the core neural networks of a VDM as before, by predicting xˆθ(xt, t, y) ≈ x0, εˆθ(xt, t, y) ≈ ε0, or sθ(xt, t, y) ≈ ∇ log p(xt|y) for each desired interpretation and implementation.

A caveat of this vanilla formulation is that a conditional diffusion model trained in this way may potentially learn to ignore or downplay any given conditioning information. Guidance is therefore proposed as a way to more explicitly control the amount of weight the model gives to the conditioning information, at the cost of sample diversity. The two most popular forms of guidance are known as Classifier Guidance [10, 20] and Classifier-Free Guidance [21].

5.1. Classifier Guidance

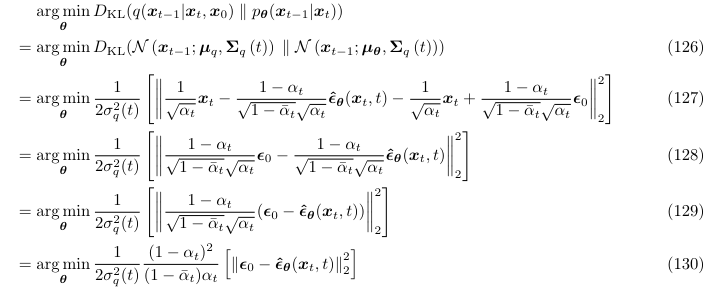

Let us begin with the score-based formulation of a diffusion model, where our goal is to learn ∇ log p(xt|y), the score of the conditional model, at arbitrary noise levels t. Recall that ∇ is shorthand for ∇xt in the interest of brevity. By Bayes rule, we can derive the following equivalent form:

where we have leveraged the fact that the gradient of log p(y) with respect to xt is zero.

Our final derived result can be interpreted as learning an unconditional score function combined with the adversarial gradient of a classifier p(y|xt). Therefore, in Classifier Guidance [10, 20], the score of an unconditional diffusion model is learned as previously derived, alongside a classifier that takes in arbitrary noisy xt and attempts to predict conditional information y. Then, during the sampling procedure, the overall conditional score function used for annealed Langevin dynamics is computed as the sum of the unconditional score function and the adversarial gradient of the noisy classifier.

In order to introduce fine-grained control to either encourage or discourage the model to consider the conditioning information, Classifier Guidance scales the adversarial gradient of the noisy classifier by a γ hyperparameter term. The score function learned under Classifier Guidance can then be summarized as:

Intuitively, when γ = 0 the conditional diffusion model learns to ignore the conditioning information entirely, and when γ is large the conditional diffusion model learns to produce samples that heavily adhere to the conditioning information. This would come at the cost of sample diversity, as it would only produce data that would be easy to regenerate the provided conditioning information from, even at noisy levels.

One noted drawback of Classifier Guidance is its reliance on a separately learned classifier. Because the classifier must handle arbitrarily noisy inputs, which most existing pretrained classification models are not optimized to do, it must be learned ad hoc alongside the diffusion model.

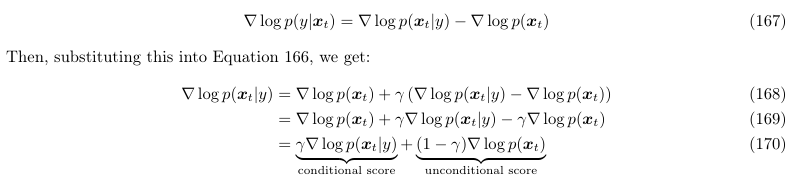

5.2. Classifier-Free Guidance

In Classifier-Free Guidance [21], the authors ditch the training of a separate classifier model in favor of an unconditional diffusion model and a conditional diffusion model. To derive the score function under Classifier-Free Guidance, we can first rearrange Equation 165 to show that:

Once again, γ is a term that controls how much our learned conditional model cares about the conditioning information. When γ = 0, the learned conditional model completely ignores the conditioner and learns an unconditional diffusion model. When γ = 1, the model explicitly learns the vanilla conditional distribution without guidance. When γ > 1, the diffusion model not only prioritizes the conditional score function, but also moves in the direction away from the unconditional score function. In other words, it reduces the probability of generating samples that do not use conditioning information, in favor of the samples that explicitly do. This also has the effect of decreasing sample diversity at the cost of generating samples that accurately match the conditioning information.

Because learning two separate diffusion models is expensive, we can learn both the conditional and unconditional diffusion models together as a singular conditional model; the unconditional diffusion model can be queried by replacing the conditioning information with fixed constant values, such as zeros. This is essentially performing random dropout on the conditioning information. Classifier-Free Guidance is elegant because it enables us greater control over our conditional generation procedure while requiring nothing beyond the training of a singular diffusion model.

6. Closing

Allow us to recapitulate our findings over the course of our explorations. First, we derive Variational Diffusion Models as a special case of a Markovian Hierarchical Variational Autoencoder, where three key assumptions enable tractable computation and scalable optimization of the ELBO. We then prove that optimizing a VDM boils down to learning a neural network to predict one of three potential objectives: the original source image from any arbitrary noisification of it, the original source noise from any arbitrarily noisified image, or the score function of a noisified image at any arbitrary noise level. Then, we dive deeper into what it means to learn the score function, and connect it explicitly with the perspective of Score-based Generative Modeling. Lastly, we cover how to learn a conditional distribution using diffusion models.

In summary, diffusion models have shown incredible capabilities as generative models; indeed, they power the current state-of-the-art models on text-conditioned image generation such as Imagen and DALL-E 2. Furthermore, the mathematics that enable these models are exceedingly elegant. However, there still remain a few drawbacks to consider:

- It is unlikely that this is how we, as humans, naturally model and generate data; we do not generate samples as random noise that we iteratively denoise.

- The VDM does not produce interpretable latents. Whereas a VAE would hopefully learn a structured latent space through the optimization of its encoder, in a VDM the encoder at each timestep is already given as a linear Gaussian model and cannot be optimized flexibly. Therefore, the intermediate latents are restricted as just noisy versions of the original input.

- The latents are restricted to the same dimensionality as the original input, futher frustrating efforts to learn meaningful, compressed latent structure.

- Sampling is an expensive procedure, as multiple denoising steps must be run under both formulations. Recall that one of the restrictions is that a large enough number of timesteps T is chosen to ensure the final latent is completely Gaussian noise; during sampling we must iterate over all these timesteps to generate a sample.

As a final note, the success of diffusion models highlights the power of Hierarchical VAEs as a generative model. We have shown that when we generalize to infinite latent hierarchies, even if the encoder is trivial and the latent dimension is fixed and Markovian transitions are assumed, we are still able to learn powerful models of data. This suggests that further performance gains can be achieved in the case of general, deep HVAEs, where complex encoders and semantically meaningful latent spaces can be potentially learned.

'Research > Generative Model' 카테고리의 다른 글

Understanding Generative Adversarial Networks (GANs) (0) 2024.05.05 How to Train Your Energy-Based Models (0) 2024.05.04 Variational Inference with Normalizing Flows on MNIST (0) 2024.04.30 Two Formulations of Diffusion Models (0) 2024.04.29 Understanding Diffusion Probabilistic Models (DPMs) (0) 2024.04.27