-

[Flow-GAN] Combining Maximum Likelihood and Adversarial Learning in Generative Models*Generative Model/Generative Model 2024. 5. 18. 15:21

https://arxiv.org/pdf/1705.08868

Abstract

Adversarial learning of probabilistic models has recently emerged as a promising alternative to maximum likelihood. Implicit models such as generative adversarial networks (GAN) often generate better samples compared to explicit models trained by maximum likelihood. Yet, GANs sidestep the characterization of an explicit density which makes quantitative evaluations challenging. To bridge this gap, we propose Flow-GANs, a generative adversarial network for which we can perform exact likelihood evaluation, thus supporting both adversarial and maximum likelihood training. When trained adversarially, Flow-GANs generate high-quality samples but attain extremely poor log-likelihood scores, inferior even to a mixture model memorizing the training data; the opposite is true when trained by maximum likelihood. Results on MNIST and CIFAR-10 demonstrate that hybrid training can attain high held-out likelihoods while retaining visual fidelity in the generated samples.

1. Introduction

Highly expressive parametric models have enjoyed great success in supervised learning, where learning objectives and evaluation metrics are typically well-specified and easy to compute. On the other hand, the learning objective for unsupervised settings is less clear. At a fundamental level, the idea is to learn a generative model that minimizes some notion of divergence with respect to the data distribution. Minimizing the Kullback-Liebler divergence between the data distribution and the model, for instance, is equivalent to performing maximum likelihood estimation (MLE) on the observed data. Maximum likelihood estimators are asymptotically statistically efficient, and serve as natural objectives for learning prescribed generative models (Mohamed and Lakshminarayanan 2016).

In contrast, an alternate principle that has recently attracted much attention is based on adversarial learning, where the objective is to generate data indistinguishable from the training data. Adversarially learned models such as generative adversarial networks (GAN; (Goodfellow et al. 2014)) can sidestep specifying an explicit density for any data point and belong to the class of implicit generative models (Diggle and Gratton 1984).

The lack of characterization of an explicit density in GANs is however problematic for two reasons. Several application areas of deep generative models rely on density estimates; for instance, count based exploration strategies based on density estimation using generative models have recently achieved state-of-the-art performance on challenging reinforcement learning environments (Ostrovski et al. 2017). Secondly, it makes the quantitative evaluation of the generalization performance of such models challenging. The typical evaluation criteria based on ad-hoc sample quality metrics (Salimans et al. 2016; Che et al. 2017) do not address this issue since it is possible to generate good samples by memorizing the training data, or missing important modes of the distribution, or both (Theis, Oord, and Bethge 2016). Alternatively, density estimates based on approximate inference techniques such as annealed importance sampling (AIS; (Neal 2001; Wu et al. 2017)) and non-parameteric methods such as kernel density estimation (KDE; (Parzen 1962; Goodfellow et al. 2014)) are computationally slow and crucially rely on assumptions of a Gaussian observation model for the likelihood that could lead to misleading estimates as we shall demonstrate in this paper.

To sidestep the above issues, we propose Flow-GANs, a generative adversarial network with a normalizing flow generator. A Flow-GAN generator transforms a prior noise density into a model density through a sequence of invertible transformations. By using an invertible generator, FlowGANs allow us to tractably evaluate exact likelihoods using the change-of-variables formula and perform exact posterior inference over the latent variables while still permitting efficient ancestral sampling, desirable properties of any probabilistic model that a typical GAN would not provide.

Using a Flow-GAN, we perform a principled quantitative comparison of maximum likelihood and adversarial learning on benchmark datasets viz. MNIST and CIFAR-10. While adversarial learning outperforms MLE on sample quality metrics as expected based on strong evidence in prior work, the log-likelihood estimates of adversarial learning are orders of magnitude worse than those of MLE. The difference is so stark that a simple Gaussian mixture model baseline outperforms adversarially learned models on both sample quality and held-out likelihoods. Our quantitative analysis reveals that the poor likelihoods of adversarial learning can be explained as a result of an ill-conditioned Jacobian matrix for the generator function suggesting a mode collapse, rather than overfitting to the training dataset.

To resolve the dichotomy of perceptually good-looking samples at the expense of held-out likelihoods in the case of adversarial learning (and vice versa in the case of MLE), we propose a hybrid objective that bridges implicit and prescribed learning by augmenting the adversarial training objective with an additional term corresponding to the loglikelihood of the observed data. While the hybrid objective achieves the intended effect of smoothly trading-off the two goals in the case of CIFAR-10, it has a regularizing effect on MNIST where it outperforms MLE and adversarial learning on both held-out likelihoods and sample quality metrics. Overall, this paper makes the following contributions:

- We propose Flow-GANs, a generative adversarial network with an invertible generator that can perform efficient ancestral sampling and exact likelihood evaluation.

- We propose a hybrid learning objective for Flow-GANs that attains good log-likelihoods and generates high-quality samples on MNIST and CIFAR-10 datasets.

- We demonstrate the limitations of AIS and KDE for log-likelihood evaluation and ranking of implicit models.

- We analyze the sigular value distribution for the Jacobian of the generator function to explain the low log-likelihoods observed due to adversarial learning.

2. Preliminaries

We begin with a review of maximum likelihood estimation and adversarial learning in the context of generative models. For ease of presentation, all distributions are w.r.t. any arbitrary x ∈ R d , unless otherwise specified. We use upper-case to denote probability distributions and assume they all admit absolutely continuous densities (denoted by the corresponding lower-case notation) on a reference measure dx.

Consider the following setting for learning generative models. Given some data X = {xi ∈ R d} m i=1 sampled i.i.d. from an unknown probability density pdata, we are interested in learning a probability density pθ where θ denotes the parameters of a model. Given a parameteric family of models M, the typical approach to learn θ ∈ M is to minimize a notion of divergence between Pdata and Pθ. The choice of divergence and the optimization procedure dictate learning, leading to the following two objectives.

2.1. Maximum likelihood estimation

In maximum likelihood estimation (MLE), we minimize the Kullback-Liebler (KL) divergence between the data distribution and the model distribution. Formally, the learning objective can be expressed as:

Since pdata is independent of θ, the above optimization problem can be equivalently expressed as:

Hence, evaluating the learning objective for MLE in Eq. (1) requires the ability to evaluate the model density pθ(x). Models that provide an explicit characterization of the likelihood function are referred to as prescribed generative models (Mohamed and Lakshminarayanan 2016).

2.2. Adversarial learning

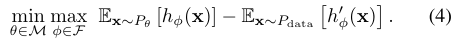

A generative model can be learned to optimize divergence notions beyond the KL divergence. A large family of divergences can be conveniently expressed as:

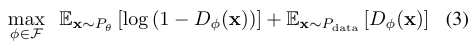

where F denotes a set of parameters, hφ and h'φ are appropriate real-valued functions parameterized by φ. Different choices of F, hφ and h'φ can lead to a variety of f-divergences such as Jenson-Shannon divergence and integral probability metrics such as the Wasserstein distance. For instance, the GAN objective proposed by Goodfellow et al. (2014) can also be cast in the form of Eq. (2) below:

where φ denotes the parameters of a neural network function Dφ. We refer the reader to (Nowozin, Cseke, and Tomioka 2016; Mescheder, Nowozin, and Geiger 2017b) for further details on other possible choices of divergences. Importantly, a Monte Carlo estimate of the objective in Eq. (2) requires only samples from the model. Hence, any model that allows tractable sampling can be used to evaluate the following minimax objective:

As a result, even differentiable implicit models which do not provide a characterization of the model likelihood but allow tractable sampling can be learned adversarially by optimizing minimax objectives of the form given in Eq. (4).

2.3. Adversarial learning of latent variable models

From a statistical perspective, maximum likelihood estimators are statistically efficient asymptotically (under some conditions) and hence minimizing the KL divergence is a natural objective for many prescribed models (Huber 1967). However, not all models allow for a well-defined, tractable, and easy-to-optimize likelihood.

For example, exact likelihood evaluation and sampling are tractable in directed, fully observed models such as Bayesian networks and autoregressive models (Larochelle and Murray 2011; Oord, Kalchbrenner, and Kavukcuoglu 2016). Hence, they are usually trained by maximum likelihood. Undirected models, on the other hand, provide only unnormalized likelihoods and are sampled from using expensive Markov chains. Hence, they are usually learned by approximating the likelihood using methods such as contrastive divergence (Carreira-Perpinan and Hinton 2005) and pseudolikelihood (Besag 1977). The likelihood is generally intractable to compute in latent variable models (even directed ones) as it requires marginalization. These models are typically learned by optimizing a stochastic lower bound to the log-likelihood using variational Bayes approaches (Kingma and Welling 2014).

Directed latent variable models allow for efficient ancestral sampling and hence these models can also be trained using other divergences, e.g., adversarially (Mescheder, Nowozin, and Geiger 2017a; Mao et al. 2017; Song, Zhao, and Ermon 2017). A popular class of latent variable models learned adversarially consist of generative adversarial networks (GAN; (Goodfellow et al. 2014)). GANs comprise of a pair of generator and discriminator networks. The generator Gθ : Rk → Rd is a deterministic function differentiable with respect to the parameters θ. The function takes as input a source of randomness z ∈ Rk sampled from a tractable prior density p(z) and transforms it to a sample Gθ(z) through a forward pass. Evaluating likelihoods assigned by a GAN is challenging because the model density pθ is specified only implicitly using the prior density p(z) and the generator function Gθ. In fact, the likelihood for any data point is ill-defined (with respect to the Lesbegue measure over R n) if the prior distribution over z is defined over a support smaller than the support of the data distribution.

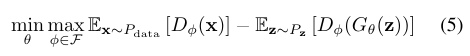

GANs are typically learned adversarially with the help of a discriminator network. The discriminator Dφ : R d → R is another real-valued function that is differentiable with respect to a set of parameters φ. Given the discriminator function, we can express the functions h and h' in Eq. (4) as compositions of Dφ with divergence-specific functions. For instance, the Wasserstein GAN (WGAN; (Arjovsky, Chintala, and Bottou 2017)) optimizes the following objective:

where F is defined such that Dφ is 1-Lipschitz. Empirically, GANs generate excellent samples of natural images (Radford, Metz, and Chintala 2015), audio signals (Pascual, Bonafonte, and Serra 2017), and of behaviors in imitation learning (Ho and Ermon 2016; Li, Song, and Ermon 2017).

3. Flow Generative Adversarial Networks

As discussed above, generative adversarial networks can tractably generate high-quality samples but have intractable or ill-defined likelihoods. Monte Carlo techniques such as AIS and non-parameteric density estimation methods such as KDE get around this by assuming a Gaussian observation model pθ(x|z) for the generator. This assumption alone is not sufficient for quantitative evaluation since the marginal likelihood of the observed data, pθ(x) = R pθ(x, z)dz in this case would be intractable as it requires integrating over all the latent factors of variation. This would then require approximate inference (e.g., Monte Carlo or variational methods) which in itself is a computational challenge for highdimensional distributions. To circumvent these issues, we propose flow generative adversarial networks (Flow-GAN).

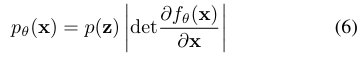

A Flow-GAN consists of a pair of generator-discriminator networks with the generator specified as a normalizing flow model (Dinh, Krueger, and Bengio 2014). A normalizing flow model specifies a parametric transformation from a prior density p(z) : Rd → R+0 to another density over the same space, pθ(x) : Rd → R+0 where R+0 is the set of nonnegative reals. The generator transformation Gθ : Rd → Rd is invertible, such that there exists an inverse function fθ = G−1 θ . Using the change-of-variables formula and letting z = fθ(x), we have:

where ∂fθ(x) / ∂x denotes the Jacobian of fθ at x. The above formula can be applied recursively over compositions of many invertible transformations to produce a complex final density. Hence, we can evaluate and optimize for the loglikelihood assigned by the model to a data point as long as the prior density is tractable and the determinant of the Jacobian of fθ evaluated at x can be efficiently computed.

Evaluating the likelihood assigned by a Flow-GAN model in Eq. (6) requires overcoming two major challenges. First, requiring the generator function Gθ to be reversible imposes a constraint on the dimensionality of the latent variable z to match that of the data x. Thereafter, we require the transformations between the various layers of the generator to be invertible such that their overall composition results in an invertible Gθ. Secondly, the Jacobian of high-dimensional distributions can however be computationally expensive to compute. If the transformations are designed such that the Jacobian is an upper or lower triangular matrix, then the determinant can be easily evaluated as the product of its diagonal entries. We consider two such family of transformations.

4. Hybrid learning of Flow-GANs

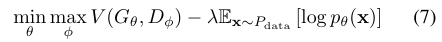

In the previous section, we observed that adversarially learning Flow-GANs models attain poor held-out log-likelihoods. This makes it challenging to use such models for applications requiring density estimation. On the other hand, FlowGANs learned using MLE are “mode covering” but do not generate high quality samples. With a Flow-GAN, it is possible to trade-off the two goals by combining the learning objectives corresponding to both these inductive principles. Without loss of generality, let V (Gθ, Dφ) denote the minimax objective of any GAN model (such as WGAN). The hybrid objective of a Flow-GAN can be expressed as:

where λ ≥ 0 is a hyperparameter for the algorithm. By varying λ, we can interpolate between plain adversarial training (λ = 0) and MLE (very high λ).

We summarize the results from MLE, ADV, and Hybrid for log-likelihood and sample quality evaluation in Table 1 and Table 2 for MNIST and CIFAR-10 respectively. The tables report the test log-likelihoods corresponding to the best validated MLE and ADV models and the highest MODE/Inception scores observed during training. The samples generated by models with the best MODE/Inception scores for each objective are shown in Figure 1c.

While the results on CIFAR-10 are along expected lines, the hybrid objective interestingly outperforms MLE and ADV on both test log-likelihoods and sample quality metrics in the case of MNIST. One potential explanation for this is that the ADV objective can regularize MLE to generalize to the test set and in turn, the MLE objective can stabilize the optimization of the ADV objective. Hence, the hybrid objective in Eq. (7) can smoothly balance the two objectives using the tunable hyperparameter λ, and in some cases such as MNIST, the performance on both tasks could improve as a result of the hybrid objective.

6. Discussion

Any model which allows for efficient likelihood evaluation and sampling can be trained using maximum likelihood and adversarial learning. This line of reasoning has been explored to some extent in prior work that combine the objectives of prescribed latent variable models such as VAEs (maximizing an evidence lower bound on the data) with adversarial learning (Larsen et al. 2015; Mescheder, Nowozin, and Geiger 2017a; Srivastava et al. 2017). However, the benefits of such procedures do not come for “free” since we still need some form of approximate inference to get a handle on the log-likelihoods. This could be expensive, for instance combining a VAE with a GAN introduces an additional inference network that increases the overall model complexity.

Our approach sidesteps the additional complexity due to approximate inference by considering a normalizing flow model. The trade-off made by a normalizing flow model is that the generator function needs to be invertible while other generative models such as VAEs have no such requirement. On the positive side, we can tractably evaluate exact log-likelihoods assigned by the model for any data point. Normalizing flow models have been previously used in the context of maximum likelihood estimation of fully observed and latent variable models (Dinh, Krueger, and Bengio 2014; Rezende and Mohamed 2015; Kingma, Salimans, and Welling 2016; Dinh, Sohl-Dickstein, and Bengio 2017).

The low dimensional support of the distributions learned by adversarial learning often manifests as lack of sample diversity and is referred to as mode collapse. In prior work, mode collapse is detected based on visual inspection or heuristic techniques (Goodfellow 2016; Arora and Zhang 2017). Techniques for avoiding mode collapse explicitly focus on stabilizing GAN training such as (Metz et al. 2016; Che et al. 2017; Mescheder, Nowozin, and Geiger 2017b) rather than quantitative methods based on likelihoods.

7. Conclusion

As an attempt to more quantitatively evaluate generative models, we introduced Flow-GAN. It is a generative adversarial network which allows for tractable likelihood evaluation, exactly like in a flow model. Since it can be trained both adversarially (like a GAN) and in terms of MLE (like a flow model), we can quantitatively evaluate the trade-offs involved. We observe that adversarial learning assigns very low-likelihoods to both training and validation data while generating superior quality samples. To put this observation in perspective, we demonstrate how a naive Gaussian mixture model can outperform adversarially learned models on both log-likelihood estimates and sample quality metrics. Quantitative evaluation methods based on AIS and KDE fail to detect this behavior and can be poor approximations of the true log-likelihood (at least for the models we considered).

Analyzing the Jacobian of the generator provides insights into the contrast between maximum likelihood estimation and adversarial learning. The latter have a tendency to learn distributions of low support, which can lead to low likelihoods. To correct for this behavior, we proposed a hybrid objective function which involves loss terms corresponding to both MLE and adversarial learning. The use of such models in applications requiring both density estimation and sample generation is an exciting direction for future work.

'*Generative Model > Generative Model' 카테고리의 다른 글