-

IMDIFFUSION: Imputated Diffusion Models for Multivariate Time Series Anomaly DetectionGenerative Model/Generative Model 2024. 5. 20. 06:51

https://arxiv.org/pdf/2307.00754

https://github.com/17000cyh/IMDiffusion.git

ABSTRACT

Anomaly detection in multivariate time series data is of paramount importance for ensuring the efficient operation of large-scale systems across diverse domains. However, accurately detecting anomalies in such data poses significant challenges due to the need for precise modeling of complex multivariate time series data. Existing approaches, including forecasting and reconstruction-based methods, struggle to address these challenges effectively. To overcome these limitations, we propose a novel anomaly detection framework named ImDiffusion, which combines time series imputation and diffusion models to achieve accurate and robust anomaly detection. The imputation-based approach employed by ImDiffusion leverages the information from neighboring values in the time series, enabling precise modeling of temporal and inter-correlated dependencies, reducing uncertainty in the data, thereby enhancing the robustness of the anomaly detection process. ImDiffusion further leverages diffusion models as time series imputers to accurately capture complex dependencies. We leverage the step-by-step denoised outputs generated during the inference process to serve as valuable signals for anomaly prediction, resulting in improved accuracy and robustness of the detection process.

We evaluate the performance of ImDiffusion via extensive experiments on benchmark datasets. The results demonstrate that our proposed framework significantly outperforms state-of-the-art approaches in terms of detection accuracy and timeliness. ImDiffusion is further integrated into the real production system in Microsoft and observes a remarkable 11.4% increase in detection F1 score compared to the legacy approach. To the best of our knowledge, ImDiffusion represents a pioneering approach that combines imputation-based techniques with time series anomaly detection, while introducing the novel use of diffusion models to the field.

1. INTRODUCTION

The efficient operation of large-scale systems or entities heavily relies on the generation and analysis of extensive and highdimensional time series data. These data serve as a vital source of information for continuous monitoring and ensuring the optimal functioning of these systems. However, within these systems, various abnormal events may occur, resulting in deviations from the expected downstream performance of numerous applications [4, 31, 60]. These anomalous events can encompass a broad spectrum of issues, including production faults [12, 44], delivery bottlenecks [28], system defects [74, 76], or irregular heart rhythms [37]. When different time series dimensions are combined, they form a multivariate time series (MTS). The detection of anomalies in MTS data has emerged as a critical task across diverse domains. Industries spanning manufacturing, finance, and healthcare monitoring, have recognized the importance of anomaly detection in maintaining operational efficiency and minimizing disruptions [29, 60], and the field of MTS anomaly detection has garnered significant attention from both academia and industry [2, 5, 7, 9, 43].

However, achieving accurate anomaly detection on MTS data is not straightforward, as it necessitates precise modeling of time series data [4, 47, 78]. The complexity of modern large-scale systems introduces additional challenges, as their performance is monitored by multiple sensors, generating heterogeneous time series data that encompasses multidimensional, intricate, and interrelated temporal information [38, 46]. Modeling complex correlations like these requires a high level of capability from the model. Furthermore, time series data often displays significant variability [45], leading to increased levels of uncertainty. This variability can sometimes result in erroneous identification of anomalies. This adds complexity to the anomaly detection process, as the detector must effectively differentiate between stochastic anomalies and other variations to achieve robust detection performance [66, 87].

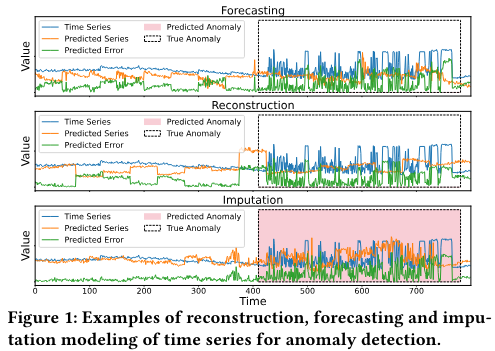

The aforementioned challenges have spurred the emergence of numerous self-supervised learning solutions aimed at automating anomaly detection. Recent methods can be classified into various categories [60], where forecasting [51, 88] and reconstruction-based [46, 70, 85] approaches have been most widely employed. The former leverages past information to predict future values in the time series and utilizes the prediction error as an indicator for anomaly detection. However, future time series values can exhibit high levels of uncertainty and variability, making them inherently challenging to accurately predict in complex real-world systems. Relying solely on forecasting-based methods may have a detrimental impact on anomaly detection performance [38, 48]. On the other hand, reconstruction-based methods encode entire time sequences into an embedding space. Anomaly labels are then inferred based on the reconstruction error. Since these approaches operate and need to reconstruct the entire time series, their performance heavily relies on the capabilities of the reconstruction model [46]. In cases where the original data exhibit heterogeneity, complexity, and interdependencies, reconstruction-based methods may encounter challenges in achieving low overall reconstruction error and variance [3, 36]. As a result, the anomaly detection performance of such approaches may be sub-optimal. Given these considerations, there is a clear need to rethink and enhance forecasting and reconstruction approaches to achieve accurate and robust anomaly detection.

To address these challenges and overcome the limitations of existing approaches, we propose a novel anomaly detector named ImDiffusion. This detector combines the use of time series imputation [18] and diffusion models [24] to achieve accurate and robust anomaly detection. ImDiffusion employs dedicated grating data masking into the time series data, creating unobserved data points. It then utilizes diffusion models to accurately model the MTS and impute the missing values caused by the data masking. The imputation error is subsequently used as an indicator to determine the anomalies. The imputation-based approach employed by ImDiffusion offers distinct advantages over forecasting and reconstruction methods. Firstly, it leverages neighboring values in the time series as additional conditional information, enabling a more accurate modeling of the temporal and inter-correlated dependencies present in MTS. Secondly, the reference information from neighboring values helps to reduce uncertainty in predictions, and thereby enhancing the robustness of the detection process.

Fig. 1 presents an example in which forecasting, reconstruction, and imputation methods are employed to predict a time series using diffusion models. The forecasting method employs a 50-step MTS for observation and predicts the subsequent 50-step MTS. The reconstruction method recovers the entire 100-step MTS. Meanwhile, the imputation method is carried out using the grating data masking. Observe that while all approaches yield comparable errors during the outlier period, the imputation approach achieves a lower error within the normal range due to its superior MTS modeling ability. This attribute enables it to establish a more distinct decision boundary for anomaly identification. As a result, only the imputation method successfully identifies the period of anomaly. We therefore employ time series imputation for accurate self-supervised modeling of time series, which forms the foundation of our proposed ImDiffusion.

To enhance the performance of anomaly detection, ImDiffusion leverages the exceptional unsupervised modeling capability of diffusion models [24] for imputation. Diffusion models have demonstrated superior performance in unsupervised image generation, surpassing traditional generative models such as GANs [21] and VAEs [33]. They have also been successfully applied to model complex temporal and inter-metric dependencies in MTS, showcasing remarkable abilities in forecasting [58] and imputation [68]. We employ a dedicated diffusion model as the time series imputer, replacing traditional forecasting and reconstruction models. This brings several advantages to anomaly detection, namely (i) it enables better modeling of complex correlations within MTS data; (ii) it allows for stochastic modeling of time series through the noise/denoising processes involved in the imputation; (iii) the step-by-step outputs generated during the imputation inference serve as additional signals for determining the anomaly labels in an ensemble manner. These unique advantages of diffusion models enable precise capturing of the complex dependencies and inherent stochasticity present in time series data, and further enhance the robustness of anomaly detection through ensembling techniques.

By integrating imputation and diffusion models, our proposed ImDiffusion achieves exceptional accuracy and timeliness in anomaly detection for both offline and online evaluation in real production. Overall, this paper presents the following contributions:

- We introduce IMDIFFUSION, a novel framework based on the imputed diffusion model, which accurately captures the inherent dependency and stochasticity of MTS data, leading to precise and robust anomaly detection.

- We develop a grating masking strategy to create missing values in the data for imputation. This strategy enhances the decision boundary between normal and abnormal data, resulting in improved anomaly detection performance.

- IMDIFFUSION leverages the step-by-step denoised outputs of the diffusion model’s unique inference process as additional signals for anomaly prediction in an ensemble voting manner. This approach further enhances inference accuracy and robustness.

- We conduct extensive experiments comparing ImDiffusion with 10 state-of-the-art anomaly detection baselines on 6 datasets. Results show that ImDiffusion significantly outperforms other approaches in terms of both detection accuracy and timeliness.

- We integrate ImDiffusion in the real production of the Microsoft email delivery microservice system. The framework exhibits an 11.4% higher detection accuracy compared to the legacy online approach, which significantly improves the system’s reliability.

To the best of our knowledge, ImDiffusion is the pioneering approach that combines imputation-based techniques with MTS anomaly detection, and it pushes the methodology boundaries by first applying diffusion models to this field.

2. RELATED WORK

2.1. Time Series Anomaly Detection

Time series anomaly detection is an important problem that has received significant attention from both the industrial and research communities [8, 52, 53, 60, 67, 69, 74, 84]. Approaches for this area can be categorized into five main classes based on the underlying detection method [60]. These categories include: (i) forecasting methods (e.g., [51, 88]), which predict future values to identify anomalies; (ii) reconstruction methods (e.g., [11, 70, 85]), which reconstruct the time series and identify anomalies based on the reconstruction error; (iii) encoding methods (e.g., [6]), which encode the time series into a different representation and detect anomalies using this encoding; (iv) distance methods (e.g., [9, 10]), which measure the dissimilarity between time series and identify anomalies based on the distance; (v) distribution methods (e.g., [20, 25]), which model the distribution of the time series data and detect anomalies based on deviations from the expected distribution; and (vi) isolation tree methods (e.g., [13, 41]), which use tree-based structures to isolate anomalies.

Among the various approaches explored in the literature, forecasting and reconstruction methods have gained significant popularity due to their reported effectiveness. For instance, Omnianomaly [66] employs a combination of GRU and VAE to learn robust representations of time series. It also utilizes the PeaksOver-Threshold (POT) method to dynamically select appropriate thresholds for anomaly detection. MTAD-GAT [88] incorporates a graph-attention network to capture both feature and temporal correlations within time series data. By combining forecasting and reconstruction models, it achieves improved anomaly detection performance. MAD-GAN [36] takes advantage of the discriminator’s loss in a GAN as an additional indicator for detecting anomalies. More recently, TranAD [70] introduces attention mechanisms in transformer models and incorporates adversarial training to jointly enhance the accuracy of anomaly detection.

The ImDiffusion introduced in this paper distinguishes from previous approaches by employing time series imputation to improve time series modeling and enhance anomaly detection performance.

2.2. Diffusion Model

Recently, diffusion models [40, 77] have garnered increasing attention in the field of AI generated content [57, 59]. While their potential in the domain of time series modeling and anomaly detection is relatively new, researchers have begun to explore their application in these areas. For instance, CSDI [68] utilizes a probabilistic diffusion model for time series imputation, outperforming deterministic baselines. TimeGrad [58] applies diffusion models in an autoregressive manner to generate future time sequences for forecasting. This approach achieves good performance in extrapolating into the future while maintaining computational tractability. Additionally, diffusion models have been employed in time series generation. In [39], diffusion models are used as score-based generative models to synthesize time-series data, resulting in superior generation quality and diversity compared to baseline approaches.

Diffusion models have also been explored for image anomaly detection. In [75], denoising diffusion implicit models [65] are combined with classifier guidance to identify anomalous regions in medical images. This produces highly detailed anomaly maps without the need for a complex training procedure. Similarly, in [56], diffusion models are used to eliminate bias and mitigate accumulated prediction errors, thereby enhancing anomaly segmentation in CT data. The DiffusionAD [86] formulates anomaly detection as a “noise-to-norm” paradigm, requiring only one diffusion reverse process step to achieve satisfactory performance in image anomaly detection. This significantly improves the inference efficiency.

To the best of our knowledge, our proposed ImDiffusion represents the first utilization of diffusion models specifically tailored for the task of MTS anomaly detection.

3. PRELIMINARY

In this section, we present the problem of MTS anomaly detection and time series imputation, and an overview of diffusion models.

3.1. Multivariate TimeSeries Anomaly Detection

We consider a collection of MTS denoted as X, which encompasses measurements recorded from timestamp 1 to 𝐿. Specifically:

where x𝑙 ∈ R 𝐾 represents an 𝐾-dimensional vector at time 𝑙, i.e., x𝑙 = {𝑥 1 𝑙 , 𝑥2 𝑙 , · · · 𝑥 𝐾 𝑙 }. The objective of MTS anomaly detection is to determine whether an observation x𝑙 is anomalous or not. By employing 𝑦𝑙 ∈ {0, 1} to indicate the presence of an anomaly (with 0 denoting no anomaly and 1 denoting an anomaly), the goal transforms into predicting a sequence of anomaly labels for each timestamp, namely 𝑌 = {𝑦1, 𝑦2, · · · , 𝑦𝐿}.

3.2. Time Series Imputation

ImDiffusion leverages the prediction error resulting from the imputation [68] of intentionally masked values within a time series to infer the anomaly labels. The mask is denoted as M = {𝑚𝑙 ∈1:𝐿,𝑘 ∈1:𝐾 } ∈ {0, 1}, where 𝑚 = 1 indicates that 𝑥 𝑘 𝑙 is observed, while 0 signifies that it is missing. The mask M possesses the same dimensionality as the time series X, i.e., M ∈ R 𝑇 ×𝐾. The application of the mask M to the original time series X yields a new partially observed time series XM, which can be expressed as:

Here, the symbol ⊙ represents the Hadamard product. Let XM0 represent the masked value where𝑚𝑙,𝑘 = 0, and XM1 represent the observed values where𝑚𝑙,𝑘 = 1, the objective of the imputation process is to estimate the missing values in XM, i.e., 𝑝(XM0 | XM1 ). Several tasks, such as interpolation [30, 35, 62] and forecasting [79, 80, 90], can be considered as instances of time series imputation.

3.3. Denoising Diffusion Model

Our ImDiffusion is based on the diffusion models [64], a wellknown generative model that draws inspiration from non-equilibrium thermodynamics. Diffusion models follow a two-step process for data generation. Firstly, it introduces noise to the input incrementally, akin to a forward process. Secondly, it learns to generate new samples by progressively removing the noise from a sample noise vector, thereby resembling a reverse process. During the forward process, Gaussian noise is incrementally added to the initial input sample X0 over 𝑇 steps. Mathematically, this can be represented as:

4. THE DESIGN OF IMDIFFUSION

ImDiffusion relies on time series imputation and utilizes the imputed error as a signal for anomaly detection. The imputation process is carried out in a self-supervised learning manner, where we intentionally introduce masks to the MTS, creating missing values that need to be imputed. We then train a diffusion model using ImTransformer designed specifically for imputation and subsequent anomaly detection tasks. During the inference phase, we leverage the intermediate output of the ImTransformer at different denoising steps 𝑡 as additional information to collectively determine the anomaly label. This ensemble approach enhances the accuracy and robustness of ImDiffusion, further improving its performance.

4.1. Imputed Diffusion Models

Time series anomaly detection often relies on the construction of prediction models that accurately capture the distribution of normal data. These models are expected to exhibit higher prediction errors when anomalies occur, thereby serving as indicators and providing a decision boundary for detecting anomalies. Two commonly used types of prediction models for anomaly detection are (i) reconstruction models, which encode the entire time series into a representation that can be reconstructed using a decoder; (ii) forecasting models, which aim to predict future values of the time series based on historical observations [27]. However, both types of prediction models have their limitations in terms of their capacity for time series modeling. When applying the diffusion model, the reconstruction method involves corrupting the entire MTS into a complete noise vector for reconstruction. However, this introduces a significant level of uncertainty, particularly when there is a lack of conditional information. Similarly, forecasting models face challenges in accurately predicting future values, especially in the presence of anomalies, further contributing to the uncertainty.

To overcome the limitations of traditional reconstruction [48, 66, 85] and forecasting models [32, 51, 88], we propose the use of time series imputation as the underlying prediction model for anomaly detection in ImDiffusion. We further enhance the imputation capacity of the model by incorporating state-of-the-art diffusion models. This approach offers several advantages. Firstly, it enables enhanced estimation of the data distribution by leveraging the availability of unmasked data values. This leads to improved understanding of the underlying data distribution. Secondly, the imputation-based prediction process stabilizes the inference of the diffusion model, resulting in reduced variance in its predictions. This increased stability enhances the reliability of the model’s predictions. Lastly, incorporating imputation-based prediction improves the overall accuracy and robustness of subsequent anomaly detection. By combining diffusion models for time series imputation, ImDiffusion achieves accurate modeling of time series data, resulting in superior performance in anomaly detection tasks.

We begin by introducing the use of score-based diffusion models for MTS data imputation [68]. There are two main categories of diffusion models employed for time series imputation, distinguished by the type of input information they utilized as follows:

- Conditioned Diffusion Models: These models estimate the masked values conditioned on the observed data, specifically 𝑝(XM0 | XM1 ). In this case, the observed values XM1 are not corrupted by noise and are directly provided as input in the reverse process.

- Unconditional Diffusion Models: For unconditional imputed diffusion models introduced in [68], both masked and unmasked values are corrupted by noise in the forward process. Instead of directly providing the observed data, it retains the ground-truth noise added to the unmasked values as reference inputs. This leads to the estimation of 𝑝(XM0 | 𝜖 M1 1:𝑇 ), where 𝜖 M1 1:𝑇 represents the noise sequence added to the unmasked values XM1 during the forward process.

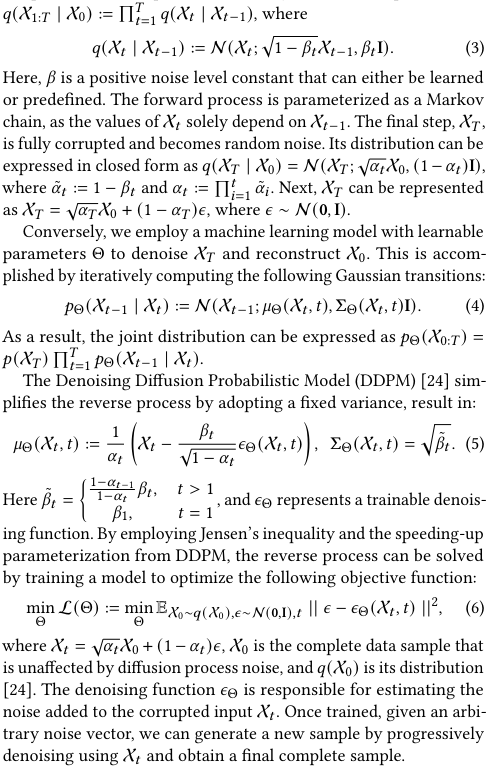

Conditional diffusion models generally outperform unconditional diffusion models in the task of imputation, resulting in lower overall prediction errors [68]. This is because conditional models benefit from the direct inclusion of ground-truth unmasked data as input, which serves as reliable references for neighboring values. However, it is important to recognize the distinction between the objectives of imputation and anomaly detection. While imputation aims to minimize the error between predictions and ground truth for all data points, anomaly detection requires a clear boundary between normal and abnormal points, achieved by minimizing imputation errors only for normal data and maximizing errors for anomaly points. During the inference phase, when anomaly points happen to be unmasked and used as inputs for the prediction model, the prediction error for neighboring anomaly points is also reduced. Consequently, the prediction error becomes indistinguishable between normal and abnormal points, compromising the effectiveness of subsequent anomaly detection. The existence of unmasked anomaly points during inference blurs the clear boundary in the prediction error that is vital for accurate anomaly detection.

To address this issue, we employ unconditional imputed diffusion models, which utilize the forward noise 𝜖 M1 1:𝑇 as a reference for unmasked data input, rather than directly feeding the data values. By using the forward noise, we avoid explicitly revealing the exact values, even when anomaly points are unmasked. However, the forward noise still provides indirect information about the unmasked data, serving as a weak hint for the model. The unmasked data can be perfectly recovered in the reverse process by subtracting the noise from the observed values step-by-step. The lower subplot in Fig. 2 demonstrates the application of an unconditional diffusion model. A notable distinction from the conditional model (upper subplot) is the substantial difference in imputed error between normal and abnormal data points. This significant gap in imputed error values provides a distinct boundary for the thresholding approach, which improves the anomaly detection performance.

We denote the noise added to the unmasked input from step 𝑡 − 1 to 𝑡 as 𝜖 M1 𝑡 . Note that 𝜖 M1 𝑡 is drawn from the same Gaussian distribution in Eq. (3), and serves as the reference for unmasked data in the reverse inference process. Similar to Eq. (4), the unconditional imputed diffusion models estimate the masked values in a reverse denoising fashion but condition on the 𝜖 M1 𝑡 as additional input. Traditional diffusion models lack the capability to incorporate conditional information 𝜖 M1 𝑡 during the denoising process. Consequently, an enhancement is required in order to extend the estimation in Eq. (5) to accommodate conditional information. This can be achieved by modifying the estimation as follows:

By utilizing the denoising function 𝜖Θ and the forward noise for unmasked value 𝜖 M1 𝑡 , we can leverage the reverse denoising process of imputed diffusion models to infer the masked values XM0 . This is accomplished by sampling from the distribution of X M0 𝑡 . In contrast to anomaly detection methods based on reconstruction and forecasting, the integration of additional information offers valuable signals that assist the diffusion model in generating more reliable predictions. This leads to a reduction in output randomness and variance, while maintaining the confidentiality of abnormal data values. Consequently, it enhances the performance and robustness of subsequent anomaly detection.

4.2. Design of Data Masking

The ImDiffusion approach leverages deliberate masking, using a mask M applied to the time series data, to create unobserved points that require imputation. The choice of the masking strategy plays a crucial role in determining the performance of anomaly detection. In this paper, we compare two masking strategies:

- Random strategy: This strategy randomly masks data values in the raw time series with a 50% probability [68]. It provides a straightforward and simple masking technique.

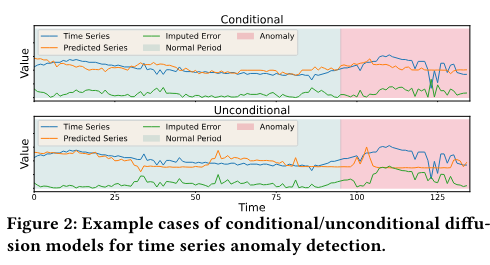

- Grating strategy: : The grating strategy masks the data at equal intervals along the time dimension, as illustrated in Fig. 3. The raw time series is divided into several windows, with masked and unmasked windows appearing in a staggered manner.

For the grating strategy depicted in Fig. 3, two different mask policies indexed by 𝑝 ∈ {0, 1} are applied to the same time series, resulting in two imputation instances. These two masks are mutually complementary, ensuring that the masked values in mask 𝑝 = 0 are unmasked in mask 𝑝 = 1, and vice versa. This guarantees that all data points are imputed by the ImDiffusion approach, enabling the generation of prediction error signals for anomaly detection. After performing imputation on each masked series individually, the imputation results are merged through simple concatenation. During training and inference, the masking index 𝑝 is provided to the model, indicating the masking policy applied to reduce ambiguity. This leads to an additional conditional term 𝑝 on Eq. (4) and (7), while the estimation of ΣΘ in Eq. (8) remains unchanged, i.e.,

The grating strategy introduces a unique characteristic to the imputation, as it can be considered a “partially” reconstruction task. This approach offers several advantages: Firstly, it provides additional information that aids in modeling the time series more effectively. By incorporating the partially reconstructed data, the model gains a better understanding of the underlying patterns and correlations. Secondly, the utilization of the grating strategy allows for a partial glimpse into the future values of the time series within the masked window, akin to forecasting techniques. This enables to improve timeliness in detecting anomalies, as it provides insights into the potential future trajectory of the time series data.

4.3. Training Process of IMDIFFUSION

4.4. Imputation with IMTRANSFORMER

Drawing inspiration from the studies conducted in [22, 34, 68], which employ hierarchical structures of transformers [71] to capture temporal correlations and interactions among variables, we introduce ImTransformer, a specialized architecture designed for MTS imputation, as illustrated in Fig. 5. It comprises a series of stacked residual blocks, with each containing dedicated components that process the feature and temporal dimensions separately.

The ImTransformer model incorporates four distinct groups of input data: (i) the input time series X𝑖𝑛 𝑡 , (ii) diffusion embedding that encodes information related to the current diffusion step 𝑡, (iii) masking embedding that encodes the masking group 𝑝 of the current data, and (iv) complementary information that embeds the dimensional information of time 𝑙 and feature 𝑘. Each of these groups of data is individually processed by convolutional and/or multilayer perceptron layers to ensure a consistent dimensionality. The embeddings of the inputs are then combined into a single tensor and further processed by a temporal and a spatial transformer layer.

The temporal transformer plays a crucial role in capturing the temporal dependencies within the time series [90]. It enables the dynamic weighting of feature values at different time steps and takes into account the masked status of features. The attention mechanism employed in the temporal transformer provides the necessary flexibility for this purpose. Additionally, a 1-layer spatial transformer is employed to capture the interdependencies between different variables at each time step. This spatial transformer allows for adaptive weighting and facilitates interaction between variables. The output of the spatial transformer is combined with the complementary information, creating a residual head for skip connection, as illustrated in Fig. 5. Both the spatial and temporal transformers play crucial roles in the imputation and anomaly detection tasks, as the feature and temporal dimensions may contribute differently to the predictions [70], which can be learned by the attention mechanism [71]. The incorporation of a residual structure [23] further enhances the model capacity by facilitating gradient propagation.

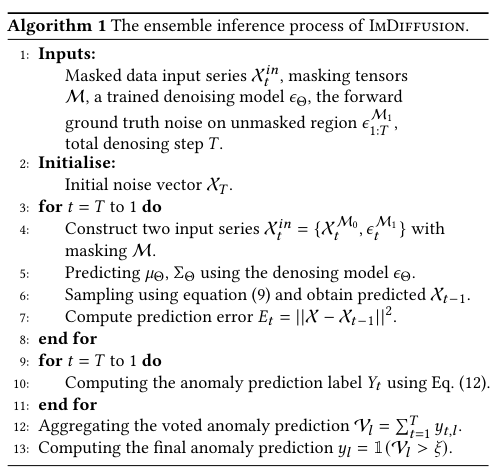

4.5. Ensemble Anomaly Inference

Traditional anomaly detection models typically rely on a single signal, i.e., the prediction error, to determine the anomaly label for testing data. However, relying solely on one signal can lead to unrobust predictions, as the prediction error can be subjective to stochasticity and affected by various random factors. The presence of anomalous data within the training set further raises concerns about the robustness requirement. To address this limitation, we leverage the unique advantage of diffusion models. Unlike traditional models that provide a single-shot prediction, imputed diffusion models progressively denoise the masked data over 𝑇 steps. This results in at least𝑇 intermediate outputs, each having the same dimension as the original time series, which is not available in traditional models. Although these intermediate outputs are not fully denoised, they converge towards the same imputation objective and offer different perspectives on the time series modeling. By appropriately utilizing these outputs, we can uncover the step-by-step reasoning of ImDiffusion and utilize them as additional signals to enhance the robustness and accuracy of anomaly detection.

ImDiffusion utilizes the prediction error at each denoising step 𝑡, denoted as E = {𝐸1, 𝐸2, · · · , 𝐸𝑇 }, as input and ensembles them using a function 𝑓 (E) to determine the final anomaly labels. 𝐸𝑡 denotes the prediction error tensor for the imputed output at denoising step 𝑡, and it has the same dimension as the original time series X. The ensemble anomaly inference algorithm is presented in Algorithm 1, and Fig. 6 provides an illustration of the process. At each denoising step, ImDiffusion generates a prediction using the denoising model 𝜖Θ and computes the prediction error 𝐸𝑡 with respect to the ground truth time series X. The set E collects the prediction errors at each denoising step, and an ensemble function 𝑓 (E) is employed to leverage the all-step errors to obtain the final voting signal V for determining the anomaly label, i.e., V = 𝑓 (E).

The design of the ensemble function. ImDiffusion utilizes a voting ensemble mechanism [16] to strengthen the overall anomaly detection process by aggregating anomaly predictions from each denoising step. At each denoising step 𝑡, the anomaly prediction label 𝑌𝑡 is determined using the following equation:

Here, 𝜏𝑇 represents the upper percentile of imputed errors at the final denoising step 𝑇 . The rationale behind this design is to utilize the imputed error at the last step as a baseline and use it as an indicator of imputation quality. The rescaling ratio Í Í 𝐸𝑇 𝐸𝑡 measures the imputation quality at each step 𝑡. If the ratio is small, it indicates poor imputation quality, and therefore the upper percentile of imputed errors for determining the anomaly label is reduced. In this case, only the label for the timestamp with the highest imputed error and high confidence is retained. Conversely, if the ratio is small, it suggests good imputation quality, and the error threshold for anomaly detection is relaxed. This dynamic adjustment of the threshold allows for adaptability based on the quality of imputation.

Using Eq. (12), we derive the step-wise anomaly predictions 𝑌𝑡 = {𝑦𝑡,1, · · · , 𝑦𝑡,𝐿}, where 𝑦𝑡,𝑙 = 1 indicates that the data at time step 𝑙 is predicted as an anomaly using the imputation at diffusion step 𝑡, and 𝑦𝑡,𝑙 = 0 otherwise. To determine the final anomaly prediction at each time step 𝑙, we employ a voting mechanism. The voting signal V𝑙 represents the total number of anomaly votes received at time step 𝑙, given by V𝑙 = Í𝑇 𝑡=1 𝑦𝑡,𝑙 . If a time step receives more than 𝜉 votes as an anomaly across all denoising steps, it is marked as a final anomaly, denoted as 𝑦𝑙 = 1(V𝑙 > 𝜉). To optimize inference efficiency and ensure correctness, we sample every 3 steps from the last 30 denoising steps for the voting process. This voting mechanism strengthens the ImDiffusion framework by utilizing the intermediate imputed outputs as additional signals. This is unique to diffusion models, as they generate predictions progressively.

5. OFFLINE EVALUATION

We conducted a comprehensive offline evaluation of the ImDiffusion for MTS anomaly detection. The evaluation aimed to address the following research questions (RQs):

- RQ1: How does IMDIFFUSION perform compare to state-of-the-art methods in MTS anomaly detection?

- RQ2: How effective are each specific design in IMDIFFUSION?

- RQ3: What insights can be gained from each mechanism employed in IMDIFFUSION?

Implementation. The ImDiffusion framework is implemented using the PyTorch framework [55] and trained on a GPU cluster comprising multiple NVIDIA RTX 1080ti, 2080ti, and 3090 accelerators. Table 1 presents the key hyperparameters used in ImDiffusion. The detection thresholds 𝜏 for the MSL dataset vary across different subsets, while a fixed value of 0.02 is employed for the other datasets. The voting threshold 𝜉 is dataset-dependent and is specified in the provided code link. As for the baseline models, their hyperparameters and detection thresholds are set based on the information provided in their respective original papers. In cases where these details were not explicitly mentioned, a grid search was conducted to determine the optimal values.

5.1. Datasets, Baseline & Evaluation Metrics

We test the performance of ImDiffusion using 6 publicly available MTS anomaly detection datasets, namely SMD [66], PSM [1], MSL [27], SMAP [27], SWaT [50] and GCP [46]. In order to ensure the completeness of the experiment, we trained and evaluated the ImDiffusion on all subsets of the aforementioned dataset, rather than selectively choosing non-trivial sequences as done in [70]. This may lead to differences in the evaluation metrics compared to the results reported in the original paper.

We evaluate the performance of ImDiffusion by comparing it with 10 state-of-the-art MTS anomaly detection models: (i) Isolation forest (IForest) [42] separates the anomaly data point with others for detection. (ii) BeatGAN [89] utilizes generative adversarial networks (GANs) [21, 82] to reconstruct time series and detect anomalies. (iii) LSTM-AD [49] employs LSTM [26, 79] to forecast future values and uses the prediction error as an indicator of anomalies. (iv) InterFusion [38] captures the interaction between temporal information and features to effectively identify inter-metric anomalies. (v) OmniAnomaly [66] combines GRU [14] and VAE [54] to learn robust representations of time series and utilizes the Peaks-Over-Threshold (POT) [63] method for threshold selection. (vi) GDN [15] introduces graph neural networks into anomaly detection and leverages meta-learning methods to combine old and new knowledge for anomaly identification. (vii) MAD-GAN [36] employs GANs [21, 82] to recognize anomalies by reconstructing testing samples from the latent space. (viii) MTAD-GAT [88] utilizes Graph Attention Network (GAT) [72] to model MTS and incorporates forecasting-based and reconstruction-based models to improve representation learning [81]. (ix) MSCRED [85] uses ConvLSTM networks [61, 80, 83] to capture correlations among MTS and operates as an anomaly detector. (x) TranAD [70] leverages transformer models to perform anomaly inference by considering the broader temporal trends in the data. We benchmark ImDiffusion against these models to evaluate its performance in MTS anomaly detection.

In line with previous studies [38, 70, 88], we evaluate the anomaly detection accuracy of both the baseline models and our proposed ImDiffusion using precision, recall, and F1 score. Note that we conducted 6 independent runs for each baseline model and ImDiffusion, and report the average performance. Additionally, we provide the standard deviation of the F1 score (F1-std) in the 6 runs to assess the stability and robustness of all methods examined in this investigation. We also utilize the R-AUC-ROC evaluation metric introduced in [52] to provide a threshold-independent accuracy assessment tailored to range-based anomalies. This metric mitigates the bias introduced by threshold selections and offers a different perspective on the performance of anomaly detection methods by using continuous buffer regions. Further, we utilize the Average Sequence Detection Delay (ADD) metric proposed in [17] to evaluate the speed and timeliness of anomaly detection provided by each approach. The ADD metric is defined as follows:

where 𝜚𝑖 represents the start time of anomalous event 𝑖, T𝑖 ≥ 𝜚𝑖 denotes the corresponding detection delay time by the anomaly detector, and 𝑆 indicates the total number of anomalous events. A smaller value of ADD indicates a more timely detection of anomalies, which is crucial in real-world detection scenarios.

5.2. Anomaly Detection Performance (RQ1)

5.2.1 Accuracy Performance. We first present the precision, recall, F1 and R-AUC-PR performance of ImDiffusion and the baseline methods in Table 2 for each of the six datasets considered in this study. Please note that all the results presented in the table are the average values obtained from 6 individual runs, which allows us to assess the robustness of each detector. Additionally, the F1-std. (standard deviation) provides an indication of the variability of the F1 scores across these runs. represents the standard deviation across the 6 runs. Additionally, Table 3 displays the average performance across all six datasets. Notably, ImDiffusion overall demonstrates exceptional performance in terms of all evaluation metrics, namely precision (92.98%), recall (93.01%), and F1 score (92.84%) and RAUC-PR (29.86%). It achieves the highest average scores across six datasets, surpassing the performance of the other baseline methods. In particular, ImDiffusion exhibits at least a 2.4% increase in precision, a 4.67% increase in recall, a 3.97% increase in F1 score and a 4.85% increase in and R-AUC-PR compared to the other baselines. These results demonstrate the effectiveness of the imputation approach and diffusion models employed in ImDiffusion.

Furthermore, despite that diffusion models require sampling at every denoising step, introducing randomness, the F1-std (0.0083) calculated from 6 independent runs remains relatively small compared to other baselines, ranking second lowest among all approaches. This indicates the remarkable robustness of ImDiffusion. It can be attributed to two key design elements in ImDiffusion: (i) the imputation methods leverage neighboring information for self-supervised modeling, reducing prediction uncertainty, and (ii) the dedicated ensemble mechanism aggregates votes for step-wise anomaly inference, further reducing prediction variance. We provide a more detailed ablation study in Sec. 5.3.1 and 5.3.2.

Upon closer examination of the dataset-specific performance in Table 2, we observe that ImDiffusion achieves the highest F1 score in 5 out of the 6 datasets. The exception is the MSL dataset, where TranAD outperforms ImDiffusion. This can be attributed to the fact that it is specifically designed to capture the internal correlations across different dimensions, which are the prominent characteristics of the MSL dataset. A plausible solution to reinforce ImDiffusion is to explicitly model these dependencies through hierarchical inter-metric embedding, as employed in InterFusion [38]. However, ImDiffusion also takes a different approach by leveraging the exceptional self-supervised learning ability of diffusion models and the spatial transformer in ImTransformer to capture correlations and provide a more general solution across various datasets. This enables ImDiffusion to achieve competitive performance in most datasets and surpass other baselines. Furthermore, we observe that ImDiffusion also achieves the highest R-AUC-PR in 4 out of the 6 datasets. This highlights the robustness of ImDiffusion to threshold selection and its consistent ability to deliver accurate predictions in detecting range anomalies.

However, in the SWaT and SMAP datasets, we observe a notable reduction in precision for ImDiffusion compared to several baselines. This can be attributed to a slight overfitting exhibited by ImDiffusion on these specific datasets, which leads to increased errors in normal data. Consequently, applying a fixed error threshold results in the identification of more false anomalies, thereby compromising precision. A potential solution could involve the implementation of dynamic thresholding approaches [27] to achieve a better balance between precision and recall. Moreover, mitigating overfitting can be achieved by reducing the complexity of the ImTransformer. These considerations are reserved for future work.

Notably, the performance improvements achieved by ImDiffusion are particularly remarkable in the SMD and PSM datasets, where it outperforms other baselines by at least 6.8% and 5.9% in terms of F1 score and 6.21% and 2.19% in terms of R-AUC-PR, respectively. These two datasets exhibit small distribution deviations between anomalous and normal data [70], and ImDiffusion’s unconditional imputation design effectively amplifies the gap in imputed error between normal and abnormal data, contributing to its superior performance. Furthermore, ImDiffusion consistently outperforms other baselines with low F1-std. in the SWaT, SMAP, and GCP datasets, which demonstrates its remarkable robustness. Interestingly, we observe that all approaches demonstrate comparatively lower performance in the SwaT dataset. This can be attributed to the intricate and diverse MTS patterns present in the SWaT dataset, underscored by the dataset’s expansive training set size and high dimensionality (51). This leads to challenges in achieving accurate modeling, consequently resulting in inferior anomaly detection performance across all approaches.

5.2.2 Timeliness Performance. In Table 4, we present the ADD (mean±std.) performance comparison on all datasets over 6 runs, along with their average values. Observe that ImDiffusion demonstrates remarkable performance in this aspect as well. Overall, ImDiffusion achieves the lowest average ADD values (104) with low variance, surpassing other baselines by at least 39.9%. Upon closer examination of dataset-specific performance, it is observed that ImDiffusion consistently outperforms other baselines in 4 out of 6 datasets. This indicates that ImDiffusion is highly sensitive to abnormal points and can capture them at the earliest detection timing. This superior performance can be attributed to the grating masking design employed by ImDiffusion, which enables to partially envision the future values of the time series in the masked regions. A more detailed ablation analysis on the masking strategy is presented in Sec. 5.3.4. The ADD metric holds significant importance in industrial practice, as early detection of anomalies allows for prompt mitigation of failures [19], potentially preventing more severe consequences. The advantage of ImDiffusion in achieving faster anomaly detection makes it a suitable choice for real-world deployment in systems with high reliability requirements.

5.3. Ablation Analysis (RQ2, RQ3)

Next, we conduct a comprehensive ablation analysis to evaluate the effectiveness of each design choice in ImDiffusion, shedding light on how these design choices contribute to enhancing the anomaly detection performance. The aggregated results specific to each dataset are presented in Table 5, while Table 6 showcases the average results across all datasets. The reported results are the average of 6 independent runs. Note that in the tables, “ImDiffusion” represents the combination of the following designs: Imputation, Ensembling, Unconditional, Grating Masking and full ImTransformer.

5.3.1 Imputation vs. Forecasting vs. Reconstruction. First, we compare the anomaly detection performance of different MTS modeling approaches, namely imputation, forecasting, and reconstruction. In the case of forecasting and reconstruction, we adopt the same configuration as ImDiffusion, with the only distinction being the forecasting method that predicts future values given historical observations, while the reconstruction method corrupts all values with noise vectors and reconstructs them. Overall, the ImDiffusion framework, which utilizes the imputation method, achieves the highest performance in terms of accuracy and timeliness on average, outperforming other MTS modeling methods by at least 3.18% on F1 score, 1.78% on R-AUC-PR and 26.2% ADD. In addition, we observe that forecasting outperforms reconstruction, indicating that incorporating historical information leads to improved performance of the self-supervised model. Moreover, as shown in Table 5, ImDiffusion achieves the highest F1 score and ADD in 5 out of 6 datasets, and the highest R-AUC-PR on 4 out of 6 datasets, highlighting the accuracy and robustness of the imputation approach.

The performance improvement can be attributed to the superior self-supervised modeling quality achieved through the imputation approach. Fig. 7 illustrates the predicted error of each modeling approach, along with their average values. A lower prediction error signifies a more accurate modeling of MTS, which in turn enhances the performance of anomaly detection. Notably, the imputation approach consistently exhibits the lowest predicted error across all datasets, significantly outperforming the forecasting and reconstruction approaches. These results indicate the imputation approach’s superior self-supervised modeling capability. They further validate that enhancing the self-supervised modeling ability contributes to the anomaly detection performance for MTS data.

5.3.2 Ensembling vs. Non-ensembling. Next, we investigate the impact of the ensembling voting mechanism on the anomaly detection performance. The non-ensembling approach solely relies on the final denoised results and applies thresholding on the imputed error for anomaly detection. In comparison, ImDiffusion on average achieves a 0.73% higher F1 score, 6.06% higher R-AUC-PR and a lower 35.8% ADD compared to the non-ensembling approaches. This suggests that the utilization of the ensembling approach enhances both the accuracy and timeliness performance of anomaly detection, particularly for ranged anomalies. Furthermore, ImDiffusion consistently outperforms its counterpart across all datasets. Upon closer examination of Table 6, we observe that ImDiffusion exhibits a greater advantage in terms of precision over recall. This indicates that the anomalies detected through ensembling are more likely to be true anomalies, thereby reducing the false positive rate.

Fig. 8 illustrates an example on the SMD dataset, demonstrating how the ensembling mechanism improves the anomaly detection performance. The first 10 subplots depict the time series, imputation prediction, predicted error, and anomaly prediction for each of the 10 denoising steps used in the ensembling voting. The final subplot showcases the aggregated voting results for anomalies. Several key insights can be derived from the figure. Firstly, the imputation results progressively improve with each denoising step, aligning with our expectations as diffusion models perform step-by-step imputation. Secondly, relying solely on the final step can lead to false positive predictions (blue shaded area). However, the ensembling voting mechanism plays a crucial role in correcting these false positives. From step 23 to 32, the false positive data receives fewer votes compared to the true positive region (red shaded area), causing them to fall below the final voting threshold (8 votes) and be eliminated from the ensemble’s predicted anomalies. This case study provides a clear illustration of how ensembling can enhance accuracy and robustness, showcasing the unique capabilities offered by diffusion models.

5.3.3 Unconditional vs. Conditional Diffusion Models. We now shift our focus to evaluating the effectiveness of the design of unconditional diffusion models described in Sec. 4.1. In Table 6, we observe that ImDiffusion, which utilizes unconditional diffusion models, achieves superior accuracy and timeliness performance compared to its conditional counterpart, with a 2.1% higher F1 score and a 21.1% lower ADD. This gain is particularly pronounced in the SMAP dataset, which comprises shorter time sequences. The R-AUC-PR values obtained from both approaches are comparable, with the conditional method exhibiting a slight advantage.

6. PRODUCTION IMPACT AND EFFICIENCY

The proposed ImDiffusion has been integrated as a critical component within a large-scale email delivery microservice system at Microsoft. This system consists of more than 600 microservices distributed across 100 datacenters worldwide, generating billions of trace data points on a daily basis [73]. ImDiffusion serves as a latency monitor for email delivery, for detecting any delay regression in each microservice, which may indicate the occurrence of an incident. The online latency data for each microservice are sampled at a frequency of every 30 seconds. In order to assess the performance of ImDiffusion, we deployed it online and operated over a period of 4 months. We compared the results obtained by ImDiffusion with a legacy deep learning-based MTS anomaly detector, which has been in operation for years.

Table 7 presents the online improvements achieved by ImDiffusion compared to the legacy detector over a period of 4 months1 . The evaluation of efficiency was conducted on containers equipped with Intel(R) Xeon(R) CPU E5-2640 v4 processors featuring 10 cores. Observe that the replacement of the legacy detector with ImDiffusion has resulted in significant enhancements in anomaly detection accuracy and timeliness, as evidenced by the substantial improvements across all evaluation metrics. Specifically, compared to the previous online solution, ImDiffusion exhibits a performance improvement of 11.4% in terms of F1 score, 14.4% improvement in terms of R-AUC-PR, and 30.2% reduction on ADD. Despite the requirement of multiple inferences to obtain the final results, the online efficiency of ImDiffusion remains well within an acceptable range. Considering that the latency data are sampled every 30 seconds, performing inference at a rate of 5.8 data points per second is more than sufficient to meet the online requirements.

The reliability assessment of a cloud system encompasses two aspects: (i) detection accuracy and (ii) detection timeliness of anomalies or incidents [19]. The notable performance improvements achieved by ImDiffusion have made a significant impact on the Microsoft email delivery system from the above perspectives, as they have led to considerable time savings in incident detection (TTD), reduced the number of false alarms triggered by the legacy approach, and ultimately enhanced the system’s reliability.

7. CONCLUSION

This paper presents ImDiffusion, a novel framework that combines time series imputation and diffusion models to achieve accurate and robust anomaly detection in MTS data. By integrating the imputation method with a grating masking strategy, the proposed approach facilitates more precise self-supervised modeling of the intricate temporal and interweaving correlations that are characteristic of MTS data, which in turn enhances the performance of anomaly detection. Moreover, ImDiffusion employs dedicated diffusion models for imputation, effectively capturing the stochastic nature of time series data. The framework also leverages multistep denoising outputs unique to diffusion models to construct an ensemble voting mechanism, further enhancing the accuracy and robustness of anomaly detection. Notably, ImDiffusion is the first to employ time series imputation for anomaly detection and to utilize diffusion models in this context. Extensive experiments on public datasets demonstrate that ImDiffusion outperforms state-ofthe-art baselines in terms of accuracy and timeliness. Importantly, ImDiffusion has been deployed in real production environments within Microsoft’s email delivery system, serving as a core latency anomaly detector and significantly improving system reliability.

'Generative Model > Generative Model' 카테고리의 다른 글