-

[Lecture 8] (1/2) Planning and ModelsResearch/RL_DeepMind 2024. 8. 3. 17:11

https://www.youtube.com/watch?v=FKl8kM4finE&list=PLqYmG7hTraZDVH599EItlEWsUOsJbAodm&index=8

If we look back at dynamic programming and model-free algorithms, we can roughly sketch the underlying principles and differences between the two in the following way.

So in dynamic programming, we assume we're given a previleged access to a complete exact specification of the environment dynamics that inclues both the state transition and the reward dynamics. We then just need to solve for this perfect model and we don't need to interact with the real environment at all.

In model-free algorithms instead, we do not rely on any given form of model. Instead we learn values and policies directly from interaction with the environment.

In between these two extremes, there is a third the prototypical family of algorithms which is typically referred to as model-based reinforcement learning.

Here we are not given a perfect model but we do attempt to learn at least an approximate model but while interacting with the environment. And then we use this learned model to derive values and policies.

A visual depiction of the Model-free algorithm would be seen as implementing this loop where we act according to our current value estimates and our policies to generate experience but then we use this experience to directly update our values and our policies.

Instead, the Model-based algorithm would add a step of indirection in this loop whereby we act according to our current value estimates and policies just like in model-free, but we use the resulting experience to learn a model of the state transition and the reward dynamics. Then we can use the learned model instead of the experience directly to update the values and policies thereby closing still the loop.

Boundaries between these two families of algorithms don't need to be extremly rigid and we can imagine to combine the two ideas. We use experience to both update values and to learn the model. We can still then use the model to perform additional updates and to refine our value estimates.

We'll discuss the popular instantiation of this idea which is the Dyna algorithm.

If we first learn a model then we use the model to plan a value function, there are two sources of errors because whatever model we learn from data is likely not to be perfect. It will incorporate certain approximation or certain errors and these will compound with additional errors that arise when planning with the model to estimate values.

In contrast if you consider model-free algorithms, they use real data that is always correct to update the values. And therefore there is only one source of approximation which is the value estimation process itself.

Despite this though, there are reasons to believe that model-based reinforcement learning could be quite effective. The first of these reasons is that, models can be learned with supervised learning methods which are very well understood and very powerful and effective.

Additionally, explicitly representing models can give use access to additional capabilities we could use them to represent uncertainty or to drive exploration.

And finally but not less importantly, in many real world settings, generating data by interacting with the environment is either expensive or slow or both of these. Instead computation is getting cheaper and cheaper by the year, therefore using a model to perform additional updates could allow us to trade-off compute for a reduction in the number of interactions that we need with the environment and this will make our algorithms more data efficient and therefore extend the range of problems we can sucessfully apply them to.

A model will be an approximate representation of an MDP. We will assume that the dynamics of the environment of this MDP will be approximated by a parametric function with some parameters. And that states and actions in the model are the same as in the real MDP.

And also we will assume that given states and actions, sampling from the model will estimate the immediate sucessor states and the immediate rewards that we would get by executing those same actions in those same states in the real environment.

And it's good to realize that this is not the most general characterization of a model. There are other types of models we could consider. They are quite related to this but different. We will discuss a few of these, for instance, non-parametric models where we use the data directly to sample transitions in the environment and also backward models that models the dynamics of an inverse MDP rather than the MDP. And also Jumping models where the actions do not directly map on the set of primitive actions in MDP.

With this premises, the problem of learning model from experience can be formulated as a supervised learning problem where our dataset contains a set of state, action pairs. And the labels of these are the true states that we add up the rewards that we will observe when executing those actions in those states.

And the parameters of the model will then have to be chosen as in standard supervised learning. So as to minimize some prediction error on this data.

Defining a procedure for learning a model will then amount to picking a suitable parametric function for the transition and rewards functions, picking a suitable loss to minimize and choosing a procedure for optimizing the parameters it does so as to minimize this loss.For instance, we could choose to parameterize transitions and rewards using some linear mapping from a suitable encoding of states and actions onto the rewards and the some encoding of the successor state. And then minimizing the parameter errors could be achieved by using least square. This would give us an expectation model and specifically will give us a linear expectation model.

Note that this is not the only kind of expectation all we could consider. So for instance using the same kind of mean squared error, we could parameterize the model itself both the reward and transitions using a deep neural network. And maybe use gradient descent instead of leat squares to optimize the parameters.

Regardless of the specific choice of the parameterization though, it's good to understand what are the pros of, cons of expectation models in general.

So some disadvantages are quite obvious. Suppose you have some macro action that randomly puts you either to the left or to the right of some wall. An expectation model will put you inside of the wall which might not be a physically meaningful thing to predict in the first place.

Interestingly though, the values could still be correct so if the values specifically are a linear function of the state or the encoding of the state, then actually the linear transformation will commute with expectation and therefore the expected value of the next state will be the same as the value of the expected next state. Even though the expected state might have some peculiar features like placing you in the middle of the wall.

And this actually applies regardless of the parametrization of the model itself. So it only requires the values to be a linear function of their state representation.

Additionally if both the value and the model are linear, then this even applies to enrolling the model multiple steps into the future which is quite powerful and interesting property.

It might not be possible to make either the value or the model linear. And in this case, maybe expected state might not be the right choice. Because the values that we can expect from expected states might not have the right semantics. We might not be able to derive expected values from expected states. And in this case, we could consider a different type of model.

So we could consider what's called a stochastic or generative model. So, generative model generates not expectations like an expectation model, but samples.

It takes as input a state, an action and a noise variable and it will return you a sample of a possible next state and a possible reward. And if we can effectively train a generative model, then we can plan in our imagination. So we could generate long sequences of hypothetical transitions in the environment and compare them to choose how to best proceed.

And this could be done even if both the model and the values are not linear.

The flip side of course is that, generative models add noise via the sampling process.

There's also a third option which is to attempt to model the complete transition dynamics. So model the full transition distribution and full reward distribution. And if we could do so, both expectation and generative models, and even values themselves could be all derived as a sub-product of our full transition and reward model.

The problem is that it might be hard to model the complete distribution and or it might be computationally intractable to apply it in practice, due to the branching factor.

So for instance, if we were to try to estimate the value of the next state with a one step look ahead, this would still require two sum overall actions and for each action, sum over all possible successor states and things get exponentially worse if we even want to look multiple steps ahead and as a result, even if we had this full distribution model, some form of sampling would probably be needed for most problem of interest. And in that case, the advantage of the complete distributions compared to just a generative model might be smaller.

In addition to the type of model, we might want to train. There is the second additional important dimension which is how we parametrize the model itself. So a very common choice is to first of all, decompose the dynamics of the rewards and the transitions into two separate parametric functions. And then for each of these, we can choose among many different parameterizations.

We'll consider Table Lookup models or Linear Expectation models and finally also Deep Neural Network models.

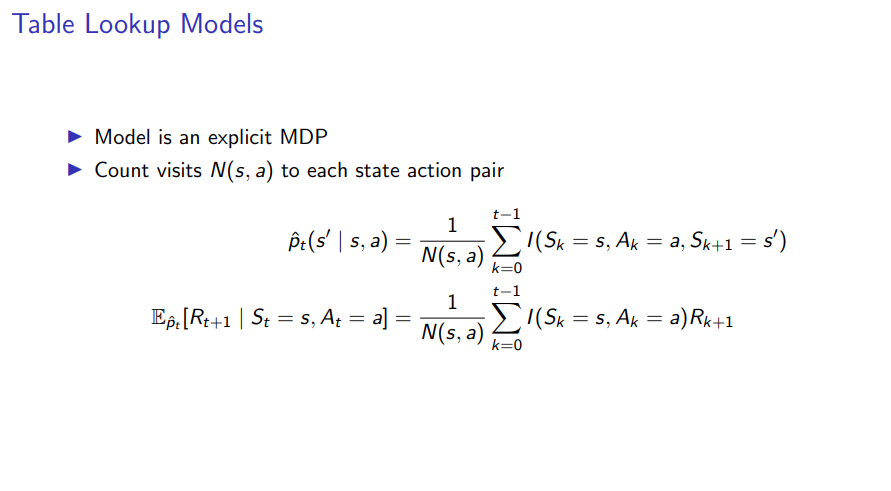

In the Table Lookup case, the model is an explicit representation of MDP wehre we may estimate the probability of observing a given successor state for a given state action pair as the empirical probability based on past experience. Similarly, we could parametrize the reward function as the empirical mean of the rewards that we did actually observe in practice when executing a given action in a given state.

In this case, we would actually get a mixed model, because our table account model would use a full distribution for the transition dynamics and an expectation model for the rewards.

It is of course possible also to model the complete distributions for the rewards themselves.

A table lookup model would model the complete distribution of the environment dynamics as in the picture.

If instead, we consider a linear expectation model, it's good to remember that first of all, we need some feature representation to be given to us. Because the linear model doesn't have the capability to build its own state representation.

But given this some feature representation, we could encode any state s as some feature set, for instance a vector. And then parametrise separately reward and transitions as two linear functions of the features of the state.

So for instance, expected next state could be parametrized by a square matrix T_a, one for each action a. In such a way then, the matrix times the features of the current state give us the expected next state.And similarly rewards could be parametrized by a vector w_a for each action a. So that the dot product between the vector and the current state representation gives us again the expected reward.

If we do so, then on each transition where we execute some action a some state s, then observe some reward r and next state s', there we can apply a gradient step to update both w_a and T_a. So as to minimize the mean squared error on both the reward and the expected state.

How to use models to plan and specifically how to do so for the purpose of improving our value function estimates and our policies.

In dynamic programming, we're given a privileged access to a perfect specification of the environment but in reinforcement learning in general, we are actually interested in planning algorithms that do not make this assumption and instead perform planning with learned models.

The simplest approach to get around this limitation of dynamic programming is maybe to just directly mirror dynamic programming algorithms but plugging in a learned model in place of the privileged specification of the environment.

If we do so then, we can just solve for the approximate MDP with whatever dynamic programming algorithm you like. So you could use value iteration or policy iteration.

And by doing so, we still require some interactions with the environment, because we will still need to learn the model. But at least hopefully we can reduce quite a bit of reliance on the unreal interactions and therefore be more data efficient.

So this approach can work and it's a reasonable things to consider.

There's another route we could take. We could combine learned models not with dynamic programming but instead with model-free algorithms. This is referred to as sample-based planning. It works by using the learned model to sample imagined transitions, imagined trajectories. And then applying your favorite model-free algorithms to the imagined data just as if it was real datas.

So for instance you could apply Monte-Carlo control, SARSA, Q-learning. And just apply the same updates on the imagined data that sampled from the approximate MDP that you have learned as if it was real data sampled from the environment.

What are the potential limitations with both of these ideas? The greatest concern is that the learned model might not be perfect. In general, we know it will incorporate some errors and some approximations. And in this case, the planning process may compute a policy that is optimal for the approximate MDP but that is sub-optimal with respect to the real environment.

And there are many ways we could go for this. One approach would be very very simply whenever the model is not good enough, it's not reliable enough, just use model-free algorithms.

Another idea would be, let's try to reason explicitly about our uncertainty over our estimates of the model. So this would lead us more towards the Bayesian approach.

And finally the third approach which is, to combine model-free and model-based methods. Integrating learning and planning in a single algorithm. How can we do this?

There are two sources of data. Two potential sources of experience. So one is real experience. This is data which is transitions, trajectories that you generate by actually interacting with the environment.

And another source is simulated experience where the data is sampled from a learned model. Therefore is sampled from some approximate MDP.

The core idea behind Dyna which is a powerful approach to integrate learning and planning is, let's just treat the two sources fo experience as if they were the same thing.

So while in model-free, we learn values from real experience, and in sample-based planning, we plan values from simulated experience. In Dyna, let's do both. So let's apply the same updates to values and policy regardless of whether data comes from real or simulated experience.

We could act according to our value estimates, policies to generate experience, and then this experience could be used both to update the values and policies directly but also used to learn a model that could then generate an additional simulated update, simulated experience.

'Research > RL_DeepMind' 카테고리의 다른 글

[Lecture 9] (1/2) Policy Gradients and Actor Critics (0) 2024.08.04 [Lecture 8] (2/2) Planning and Models (0) 2024.08.04 [Lecture 7] (2/2) Function Approximation (0) 2024.08.03 [Lecture 7] (1/2) Function Approximation (0) 2024.08.03 [Lecture 6] (2/2) Model-Free Control (0) 2024.08.02