-

[Lecture 6] (2/2) Model-Free ControlResearch/RL_DeepMind 2024. 8. 2. 23:05

https://www.youtube.com/watch?v=t9uf9cuogBo&list=PLqYmG7hTraZDVH599EItlEWsUOsJbAodm&index=6&t=1068s

So that's one way to go. Monte Carlo learning and Temporal Difference learning. And in both cases what we're doing is, we're interleaving policy evaluation and policy improvement step.

Now we're going to turn to a new topic which is Off-policy learning which is about learning about a policy difference from the one that we're following. There's a specific very popular algorithm that is an Off-policy algorithm called Q-learning.

There's different ways to find the value of the optimal policy. We've discussed how we could use policy evaluation in policy iteration if we interleave the policy evaluation with a policy improvement step. There are also algorithms in Dynamic programming which we can use to immediately estimate the value of the optimal policy in a more direct way.

And one way to think about this is that we're interleaving the greedification very tightly with the evaluation. So this is what value iteration does. And if we look at the second equation, what we see is, we immediately consider a greedy action with respect to one step look ahead according to our model. This is Dynamic programming.

We'll turn to the model-free approaches in a second, so here we're just assuming that we have a model so we're just going to consider the maximum value if we roll the model forward one step and then bootstrap on our current estimates that we have.

So you can view this as immediately doing a greedification of this estimate in one time loop.

And value iteration will also converge and it will converge to the optimal value function and hence you can infer the optimal policy.

For both these categories, policy evaluation and value iteration, there are two variants, one for state value learning and one for state action value learning.

Many of them can be turned into Model-free algorithms which is what we're going to do here.

We already talked about Temporal Difference learning and SARSA which can be viewd as sampling the policy evaluation algorithms.

There's an expectation which we can sample according to our current policy and then we can use this as a target to update slightly towards.

In doing so, we introduce a step size to only make a small step because we will have a noisy target unlike in dynamic programming and hence we want to somehow average out the noise and for this we use a small step size to make sure that we don't jump too far that the updates becomes too noisy.

And then we can see that TD corresponds to sampling this first one, whereas SARSA corresponds to sampling this third one. (previous slide equations (1), (3))

There's a third algorithm called Q-learning. It corresponds to sampling this last equation where there's also an expectation outside but there's a value iteration equation here which has a maximization over the actions inside.

So we see that Q-learning takes our state action value and updates it very analogously to SARSA where the only difference is how we bootstrap instead of considering the next action in the next state, we consider the best action that we could possibly take according to our current estimates and then use that as our estimate for the greedy value of our current value function and back that up.

Note that there's no trivial analogous sampled version of this algorithm which is the value iteration algorithm for state values.

Why would that be the case? Why are there four dynamic programming algorithms and only three model-free algorithms?

It's some sense a convenience thing where out of these dynamic programming equations, three of them have the expectation on the outside so it's very convenient to sample that, but the value iteration for state value functions has a maximization outside the expectation and that means that it's non-trivial to sample this. It's not immediately obvious what the model-free algorithm should look like if you wanted to do this. So that's why we don't have an analogous model-free algorithm here.

On-policy learning is about learning about a behavior policy from experienced samples from that policy. That's what we've been discussing so far, the Monte Carlo control algorithm and for SARSA. There was just one policy and that policy would be used to generate your behavior because you want to make predictions about the value of that policy.

We call that On-policy learning because we're learning about the policy that we're following.

We could also consider Off-policy learning which refers to everything else. So, On-policy learning is in some sense a specific case and Off-policy learning is everything else which means that we're learning about a target policy pi but the experience sample is from a different policy which we could call mu.

So keep that in mind that mu is also just a policy to select actions. It's a distribution of actions in each state. And then we want to learn about a potentially different policy, a target policy pi.

This refers to learning counterfactual about what ifs about things you could be doing.

Think about these as valid predictive questions that could be helpful for an agent in the world. These are Off-policy questions because they refer to asking questions about what if behavior that I'm not currently doing all th time.

So in general, we want to evaluate a target policy to compute the estimated value of that policy either the state value or state action value. And we're using a different policy mu to generate the actions.

So why is this important? One reason why you might want to do this is, you might want to learn from a database. Let's say you have some stored experience for instance from observed from human behaviour or from other agents from for instance data that was logged somewhere.

The behaviour there is not under your control so there is just some frequency of actions being selected in the data. And maybe you have a what-if question. What if we would have instead followed this strategy? Of course, the strategy should be supported by the data there should be some samples in the data that will support and give you data about that question. Otherwise, you can't learn. But this is a valid question that you could have where the experience might differ slightly, for instance all actions might be selected under the database but not on the same frequencies as you want to consider them. And then, learning Off-policy is kind of a natural thing to be doing.

somewhat similarly, you might want to reuse your own old experience. So far we've considered algorithms that learn online, so they consume data and update themselves or they wait until the end of the episode like Monte Carlo does and they update everything at the end of the episode, but then the data is thrown away and you move on to the new episode. And you only use the information from the new episode to update your policy from that point onwards.

This is a little bit wasteful and it's a fairly popular approach to store the data that you've seen and try to reuse that to learn more. But that means, if we're doing control, your policy may have changed in the meantime when you were collecting the data you may have had a worse policy for instance or just a different policy or you could have been exploring more at the beginning.

So that means that, in general, the behavior policy, the probabilities of selecting certain actions might be quite different in your old data than it is current. But you might still want to learn about it. You might still want to use it.

Separately, you could also want to learn about multiple things at the same time. So this is related to these what-if questions where even if we're following one policy and we're not trying to learn from past data or from other sources of data, we might still want to learn about many things, many what-if questions.

Because this is a way to accumulate knowledge about the world. So that refers to learning about multiple policies.

Therefore, all but one of these policies should be Off-policy. At most one can be exactly your behavior policy, so any other policies, you would require Off-policy learning to learn about them.

You might want to learn about the greedy policy while following an exploratory policy. This one is maybe obvious in the context of policy iteration where we're always interested in this policy improvement step which can be implemented by making your value functions or your policy greedy with respect to your value functions. So it's a valid question to ask what is the value of the greedy policy because, that's the policy that we're going to consider next. But we might not just want a sample from that greedy policy because you might not explore sufficiently often. This brings us back to this Q-learning algorithm.

Q-learning estimates the value of the greedy policy. It's conditioned on the state action pair. So we're considering a specific state and action pair. And then we're taking one-step reward and then in the next state, we consider acting greedily. So we are learning about the value if you take this action. But then in the next step you would be greedy and that's a valid target to update. And it would be learning about the greedy policy.

But you don't want to necessarily act all the time according to that policy, bacause that wouldn't explore sufficiently.

Now fortunately, it turns out if you do this Q-learning algorithm, if you do the tabular version specifically then, you will converge to the optimal action value function as long as we take each action in each state infinitely often.

This might similar to SARSA, but there's an important difference here which is that you don't need your behavior to become greedy. Q-learning can learn the optimal value function eventually even when you explore uniformly at random throughout your lifetime. Of course, to have actual convergence because this is a random process and you'll have random data. This is only true in the limit, so you do need to get infinite data in the limit. But you don't have to make your policy greedy anymore. That's a big difference between Q-learning and SARSA.

So this works for any policy that eventually selects all actions sufficiently often. The uniform policy is one example. Epsilon greedy will be a different example. But we no longer have to decay the epsilon.

You do require appropriately decaying step sizes, this is true for all of these algorithms including SARSA but also for policy evaluation with Temporal Difference learning.

Your step size is chosen to be such that if you sum over all time steps until infinity, that in total your step size will be infinitely large. This ensures that from any state action value estimates that you currently have you can always move far enough to find new estimates that are more more optimal.

And then in addition to that, we want that the sum over all time steps of the squared step size is less than infinite. It's a finite number. This ensures that eventually your step size becomes small enough that eventually you cancel out all the noise that you eventaully start averaging enough data in that you can get rid of all of your undertainties.

These two conditions together ensure convergence of the Q-learning algorithm if you also explore indefinitely.

For instance, a specific choice that you can consider is one divided by the time step to the power omega, where omega is somewhere between half and one. So the extremes here are one over t or one over square root of t. On the other hand, you would pick anywhere between those you would have these conditions so that would be a suitable step size parameter and we can see that the step size would then decay over time and eventually you make smaller and smaller updates.

Now we want to learn optimal value function and we want to have the optimal behavior but we can't explore because we are assuming that the agent doesn't know the structure of the problem. Otherwise we could use dynamic programming. So instead we're just going to learn from experience and we're not going to assume that the agent knows everything. So it has to try each action in each state.

We'll try these two different algorithms, SARSA or Q-learning to learn in this example. And we're going to use an epsilon greedy policy here.

The acual rewards per episode that these different algorithms attain throughout learning, after less than 100 episodes, maybe after even 50 episodes or so, the algorithms have both stabilized to a certain policy which gives us them a certain amount of reward per episode.

Interestingly, SARSA has stabilized around -25 which means that it takes roughly 25 steps to reach the goal whereas Q-learning has saved lives around -50 that mean Q-learning also takes roughly around 50 steps.

It means that Q-learning regularly falls into the Cliff. So why does this happen? Why does Q-learning perform worse here than SARSA? Didn't I explain that both of them are optimal in the sense that they converge to the optimal policy and in fact I didn't say that we're decaying the epsilon here which we're not.

And didn't we say that Q-learning can converge to the optimal value function when we don't decay the epsilon whereas SARAS cannot.

So why is SARSA performing better than Q-learning?

The reason is that SARSA is an On-policy algorithm and being an On-policy algorithm means that SARSA will evaluate the policy that we're actually following and will give us valid estimates, acccurate estimates for that policy.

So at first the policy is changing but at some points, it will be stabilized even though we still have an epsilon. So there will be some randomness to the policy, but the policy will be stable because the epsilon is not changing but also because the action values won't change that much anymore. And that means that even though the epsilon greedy policy depends on your action values, if your action values don't change that much anymore, the policy can become quite stable.

But this policy does explore and that means that there's some non-zero probability that the policy will fall into the Cliff and that's captured here in these average rewards per episode.

So what does SARSA do? SARSA being On-policy algorithm, accurately estimates that if you have this epsilon, if there's a probability to explore, then if you would be very close to the Cliff, there's a pretty high probability that you'll fall in. Let's say the epsilon is 0.1, that means that, 10 percent of the time you pick a random action from this state. So this could mean that you either go back or you go up or you go right or you follow to the Cliff. So if you try to walk along this optimal path, there are many opportunities for the algorithm to step into the Cliff and get this reward of -100. SARSA is estimating these values. So it starts to say, well if I have a choice, maybe I shouldn't walk so close to the Cliff because I know I will explore. I know that there will be some randomness in my policy I know what the value is all that policy and turns out maybe then if I'm very close to the Cliff, the higher value action is to actually step away from the Cliff first to this safer path which is a couple of steps away from the Cliff in which you can reliably get to the goal. Because if on the safe path, you pick a random action you could step down but then you have an opportunity to step up again on the next step of course occasionally it can happen that you explore twice in a row it could be that you step down randomly and the step down again but then if you're on the safe path at least you can recover again immediately, whereas if you're immediately next to the Cliff, just one exploratory action will toss you in.

So why then is Q-learning performing worse? What we're evaluating here is the actual rewards per episode which does include this exploration. This means that Q-learning has estimated by now accurately the values of the optimal policy and, maybe the values of the optimal policies will tell you to walk along this optimal path.

But then if you execute epsilon greedy algorithm, will try to walk along the path but occasionally will be bumped back or up but then location will also fall into the Cliff. So on average, because Q-learning is not taking into account that it's actually exploring, it will do worse.

However, if you take both these algorithms, let's say here at the end of learning and then you would look ate the action values and then execute the greedy policy according to both these algorithms, then Q-learning would actually have found a shorter path and it would actually walk very reliably next to the Cliff without any exploration towards the goal.

SARSA does better because it takes into account the exploration that we're doing but Q-learning has actually found a more optimal policy if we would only execute that policy instead of the exploratory one.

This is more meant to illustrate differences between On- and Off-policy learning than it is to say SARSA is better than Q-learning or vice versa.

Bootstrap target used in Q-learning: we're picking the policy that is greedy with respect to these action values. There's a policy that picks the highest valued action, and then we consider evaluating the value of that action. This is equivalent to just taking the maximum action value.

We can notice that we're using the same value function to select this action and then to evaluate the action.

But these action values are approximate, they could be wrong.

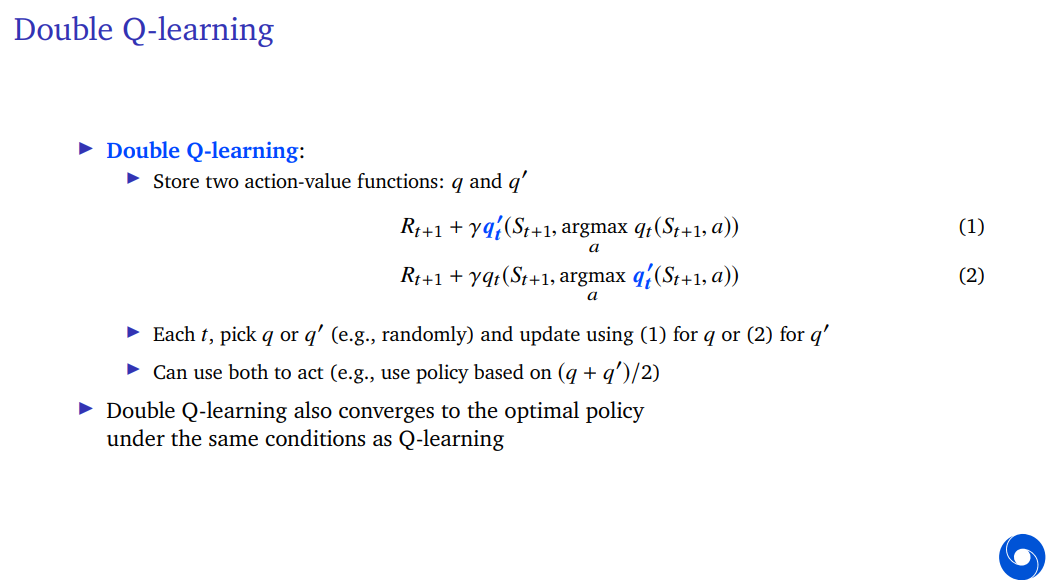

So the double here refers to the fact that we're going to maintain two different state action value functions. And we're going to introduce a new action value function q'.

So in equation (1), we see that we have our standard thing with the one step reward and then discounted value of the next state, but the value of the next state is defined by picking an action according to our normal action value function q, but then evaluation the value of that action with q'.

We could do the inverse. We could pick according to q' and then evaluate according to q.

Then we can use each of these as targets for these two different action value functions.

So on every time step what we'll do is, we'll pick one of the action value functions to be updated. So either q or q' and then, if we pick q, we use equation (1) to update it and if we pick q', we use equation (2), but we never update both at the same time, because this would correlate them. And then we would just be doing Q-learning again.

But if instead we pick either one or the other, this de-correlates the action values, they see similar data but different sub-slices of the data and this means that we can get a somewhat unbiased estimate of the greedy action according to one of them.

So we're still picking greedy. This greedificaiton step, this selection process is still likely to pick an action with a high value, but in as much as that value is purely high, because of for instance random noise. Then having a separate evalution learned on different data might help us to get an unbiased estimate for the value of that policy.

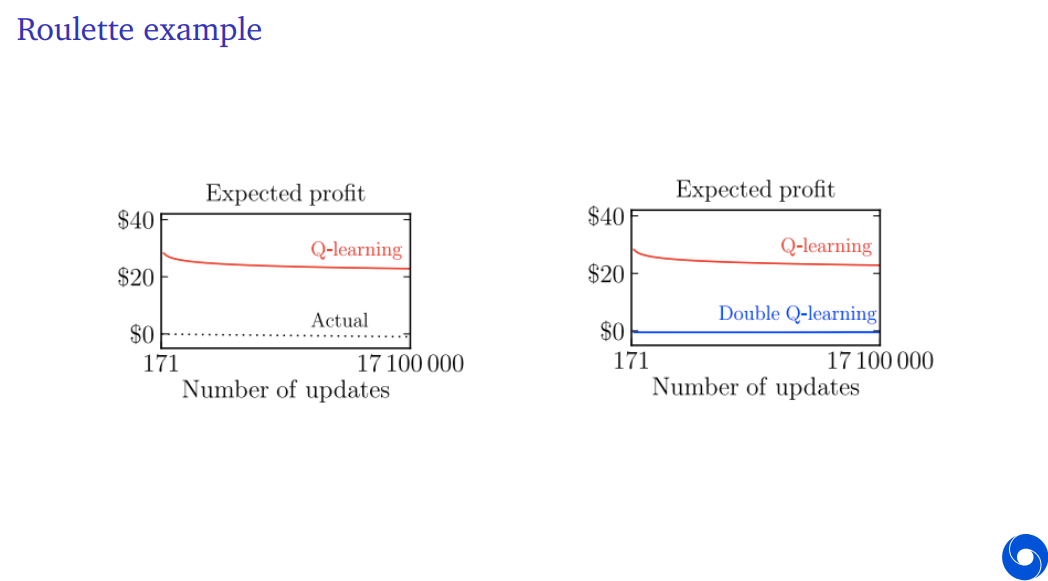

Double Q-learning might be important in practice and indeed it's quite a common component these days of many deeplearning algorithms and the reason why it's important is, because these over-estimations do actually happen. They don't just happen because of random noise as in the roulette example, they also happen because of function approximation inaccuracies. So there's various reasons why your value estimates might be inaccurate and then, double learning can be quite useful to help mitigate that.

It's more general than double Q-learning. And it can be generalized to other updates. For instance, if we're doing SARSA, you might think you're safe because there's no max in the SARSA updates, we're just looking at the actual high of the next action, but turns out that's not quite sufficient to be safe. Because we're still actually picking that action beause it has a high value if we're doing something like greedy or epsilon greedy.

So that means that SARSA can also overestimate and then therefore we can also think about using the same solution. This could lead to something we could call double SARSA where we still use two action value estimates.

Q-learning: pi is the greedy policy according to your current action value estimates and mu can be any exploratory policy.

There's one important caveat you keep in mind thoughout which is you can never expect to learn about things that you never do. You can't expect to learn about policy if this policy contains actions that you would never take according to your behavior policy. Then you simply don't have the data to support this.

The goal is to find some function f with random inputs x and distribution d', we want to estimate the expectation of f of x under a different distribution. And the solution to this is to re-weight the function.

So the idea is that, this function is going to be update to our weight. We have some sort of an update to our weights under some sort of distribution. And we want to re-weight this as if we're doing the update under a different distribution.

We want to sample f(x) under some distribution d, but we don't have d. We're generating the data under a different distribution.

What does this mean, this means if we sample this final thing, we would get an unbiased estimate of the thing that we were looking for on the LHS.

There is an assumption here, which is that the data should support. In the ratio, whenever d is non-zero, d' also needs to be non-zero. Otherwise, this is not well-defined, and you can't do this.

The intuitive explanation was, if you never do anything, if you've never sampled a certain x under d', you can't expect to learn from it.

Now we're going to apply this to reinforcement learning. And we're going to start simple. So we're first going to apply this to one-step rewards.

Let's say you are doing policy evaluation for a bandits, but you're sampling according to a different policy that you are interested in. So our behavior will be mu as before and we're going to consider the expected reward.

When following a policy mu, we can use this re-weighted sample as an unbiased sample for the expected reward under policy pi.

Now we can apply this to estimating a value function estimate. So we're going to generate some trajectory. W're going to use tau to refer to this trajectory generated with our behavior policy mu.

We should have the probability of the full trajectory under our policy pi divided by the probability of the trajectory under our policy mu. This would be the appropriate re-weighting in order to get an unbiased estimate of the return under policy pi.

For instance, pi could be our greedy policy, mu could be our behavior policy. And we want to estimate the value of the greedy policy.

We get ratios of policies on every time step. We just divide them with each other. So we divide the target policy by the behavior policy. We multiply all of those together and then we can multiply those with the return. And this will give us an unbiased estimate of the value under pi.

It can be a noisy estimate and we do need this condition that the behavior policy will have some probability of selecting all of the actions that the target policy does. Because we never want to divide by zero. But if we have that then we can use this as an estimate.

For instance, if we want to estimate the greedy policy, and we have a uniformly random policy that we're acting with, we could re-weight the samples that we get and then we could use those as unbiased estimate for our target policy, our greedy policy.

In fact, if we consider a greedy target policy, we can see that, if any of the actions selected along the way were not greedy, then this total sum would just be zero. We're just going to ignore returns that don't correspond to the greedy policy in that case.

And then anyone where all of the target policies are one, so in the case where we are considering estimating the value of greedy policy, and we have found a trajectory where we always select that action corresponding to the greedy policy, then we would upscale this return.

So on average compared to all those zeros that we also accumulate on average we get an unbiased estimate.

So this is generic technique for Off-policy learning. We can generate data under some policy and then evaluate any other policy.

Now this might be a bit unreally, for the greedy policy you would have to select all of the actions exactly right in order to learn a valid greedy policy and that might not happen that often. A different way to say that is, this is a very high variance estimate. So what do we do when we have high variance? We turn to Temporal Difference learning.

And we can use this to construct Temporal Difference targets that will also do the same thing.

So what we're going to do is, we're going to use our Temporal Difference target where v is going to be an estimate of the value of policy pi. So we're going to re-weight this target with important sampling but then we only need one step.

We only need one ratio of the probability of the target policy divided by the probability of the behavior policy. This has much lower variance on Monte Carlo important sampling.

'Research > RL_DeepMind' 카테고리의 다른 글

[Lecture 7] (2/2) Function Approximation (0) 2024.08.03 [Lecture 7] (1/2) Function Approximation (0) 2024.08.03 [Lecture 6] (1/2) Model-Free Control (0) 2024.07.31 [Lecture 5] Model-Free Prediction (0) 2024.07.30 [Lecture 4] Theoretical Fundamentals of Dynamic Programming (0) 2024.07.30