-

[Lecture 4] Theoretical Fundamentals of Dynamic ProgrammingResearch/RL_DeepMind 2024. 7. 30. 14:47

https://www.youtube.com/watch?v=XpbLq7rIJAA&list=PLqYmG7hTraZDVH599EItlEWsUOsJbAodm&index=4

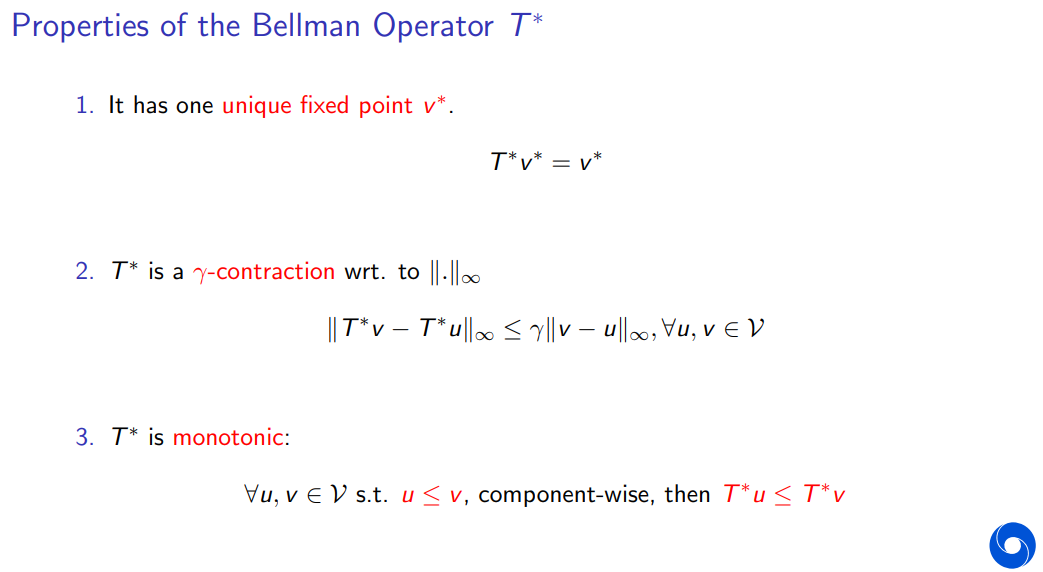

For any two points in this vector space, if you apply this contraction mapping to this point, the distance between two points shrinks at least by alpha.

For any sequence xn in the space that converges w.r.t. this norm to a point x, if we apply this mapping to each element of this sequence, the resulting seq will also converge in the same norm to T of x.

If you apply this operator to this point, you get the point back. So it doesn't change...

갑자기 든 생각인데 말야,

이거슨 마치.. 지도 딱 펼쳐놓고, 이 지도가 놓인 이 지구가 점점 shrink한다고 생각해본다면.. 반드시 어느 한 점은.. shrink하지 않고 불변할텐데, 그것과 같네..?!?? ㅎㅎ

* complete norm space: every convergence seq in that space, its limit is still containing the space.

Distance between an element in the seq xn and the fixed point shrinks with this rate gamma.

If you let n goes to infinity, you get the upper bound goes to zero. So, seq convergent to unique fiexed point.

We can prove the convergence of this algorithm in particular as the the number of iterations goes to infinity, we can show that this induced seq of value ft converges in infinity to the fixed point of this operator which happens to be v* (T*v* = v*)

Value iteration algorithm always converge as long as gamma is less than one. So it's a full contraction.

'Research > RL_DeepMind' 카테고리의 다른 글

[Lecture 6] (1/2) Model-Free Control (0) 2024.07.31 [Lecture 5] Model-Free Prediction (0) 2024.07.30 [Lecture 3] Markov Decision Processes and Dynamic Programming (0) 2024.07.29 [Lecture 2] Exploration and Exploitation (0) 2024.07.29 [Lecture 1] Introduction - Reinforcement Learning (0) 2024.07.29