-

[Lecture 3] Markov Decision Processes and Dynamic ProgrammingResearch/RL_DeepMind 2024. 7. 29. 18:11

https://www.youtube.com/watch?v=zSOMeug_i_M&list=PLqYmG7hTraZDVH599EItlEWsUOsJbAodm&index=3

Anything that has happened in this interaction process is summarized in the current state. In terms of its information about the future transition is summarized in the current state (current observation).

The principle here is that, the state at current time step contains all the information necessary for all future predictions. Anything that has happened prior to time t is not relevant, because all the informaiton is capture in St.

(3) is False. (1), (2), (4) is True.

If we know the optimal value function, in particular if we know the q optimal value function, we can derive an optimal policy which is just the greedy with respect to that. So this policy will choose with probability one, the maximum action according to q* and put the zero probability on all other actions. So it's going to look at the values of q* and it's going to act greedily with respect to that. This will give us an optimal policy.

If we act greedily w.r.t. the values here, we can get the pretty good policy. We can get the optimal of behavioring in this environment.

Based on that evaluation, based on those values, doing greedy improvement step gives me a very good policy. In this particular case, this is an optimal policy.

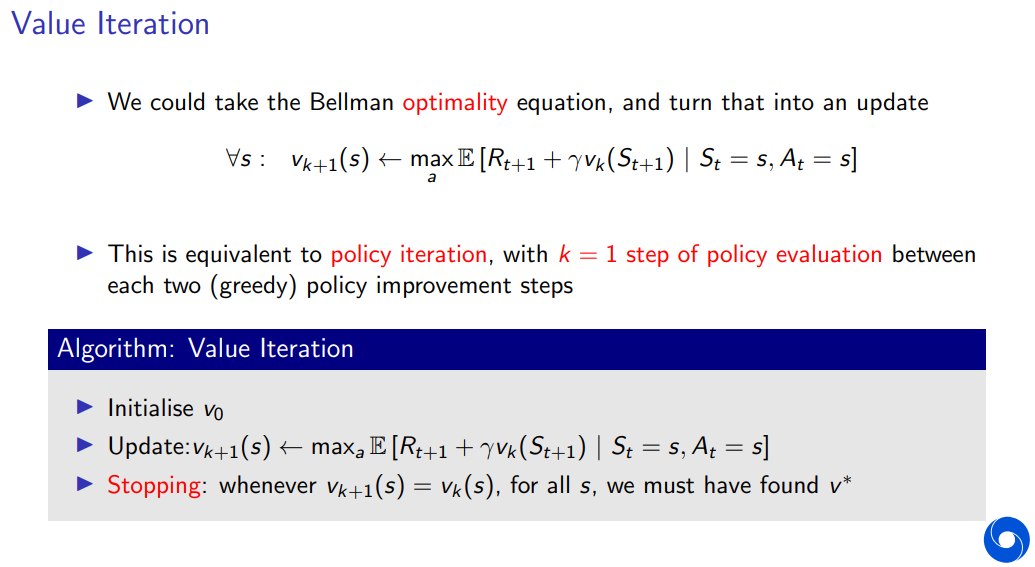

This is a very general principle in reinforcement learning. And in dynamic programming of evaluating a policy and then, taking greedification step, where the new policy that we're getting out of this greedification step is guranteed to improve base upon the policy that we started with.

We start with the policy pi, we evaluate that one and we get the policy pi_new which is the greedification with respect to these values, what we're seeing is, if we're doing this step, we are guaranteed to get the value that to get the policy that improves for each state over the policy on which the improvement was made.

Policy Iteration Algorithm is this iterative procedure between policy evaluation building an estimate of v_pi or q_pi and taking an improvement step with respect to this estimate such as to produce a new policy thta is better than the one we've seen before.

In one instance of policy improvement step is the greedification step, there are other ways of improving your policy, but this is one of the most used principle and well-known principles in reinforcement learning and dynamic programming.

We start with a value function and the policy. We evaluate that policy, then we greedify with respect to that evaluation to get a different policy. Once we have this policy, we are doing this evaluation step again and this greedification again. This step can be just improvement in general. We're doing this step til we converge.

The greedy with respect to the evaluation problem gives you the same policy back and at that point, we've converged. And that point, we do have the optimal value function and the greedification with respect to the optimal value function would give us an optimal policy.

'Research > RL_DeepMind' 카테고리의 다른 글

[Lecture 6] (1/2) Model-Free Control (0) 2024.07.31 [Lecture 5] Model-Free Prediction (0) 2024.07.30 [Lecture 4] Theoretical Fundamentals of Dynamic Programming (0) 2024.07.30 [Lecture 2] Exploration and Exploitation (0) 2024.07.29 [Lecture 1] Introduction - Reinforcement Learning (0) 2024.07.29