-

[Lecture 2] Exploration and ExploitationRL/RL_DeepMind 2024. 7. 29. 17:58

https://www.youtube.com/watch?v=aQJP3Z2Ho8U&list=PLqYmG7hTraZDVH599EItlEWsUOsJbAodm&index=2

One interesting property that we're going to talk about a lot is that it's an active learning setting. This means that these actions they don't just change the reward. They don't just tell you how well you're doing but they also influence the data that you see and this is going to be important. And this is different from some other types of machine learning because normally in other types of machine learning we might assume that a data set is given to us and we want to answer some questions given that data set. We want to learn something from the data set, but the data is given and is not under our control.

In reinforcement learning in the full setting, this is different. The data is in some sense under our control but in some indirect sense. We can take actions to change the data, we can't deliberately pick out certain data points and this means that the agent has to actively seek out information that it can use to learn from.

Reward distribution per action.

We want an algorithm, a learning algorithm that trades off exploration and exploitation in such a way that if you apply this algorithm throughout your life that you get reasonable high reward.

Of course this reward can't be optimal all of the time because you don't know what the optimal action is yet but this way of writing down the goal including all the steps that you ever take allows us to reason about which learning algorithms are more effective than others.

And we will specifically optimize this cummulative reward of your lifetime by learning a policy which in this case is some distribution on the set of actions. During learning it's useful to have stochastic policies which pick actions according to some random distribution and the policy will change over time as we gather more data.

For all the other actions, this is a positive quantity. So regret enumerates how bad we're doing. The more regret, you get the worse you're doing. If your regret is zero or close to zero, you're doing quite well.

This notion of regret is quite a common one in multi-armed bandits, because it allows us to reason about how quickly does this regret grow over time and turns out this growth rate is very indicative of how good an algorithm is. So we go to algorithms that for which the regret doesn't grow too quickly over the full lifetime of learning.

Greedy, e-greedy, UCB: these approaches use action value estimates.

So we are going to keep track of some big q of t (Q_t), I use big q (Q) there, because it's a random quantity because it depends on your past observations and this big q of a (Q_t(a)) at some time step t is supposed to approximate the actual true value of that action a. So little q(a) is a true expected reward given a and big q (Q) is an approximation thereof.

We take our action value at time step t and we define this to be the action value of the previous time step t-1 plus some learning rate alpha or step size parameter alpha times an error term.

And the error term is the reward that you've received given that you've taken this action minus the average for that action so far.

And all of the other actions, we simply don't update them because we haven't taken those actions at this time step, so we don't update the mean.

And then we also increment the count for the action that we've taken.

And we define the step size parameter to be one over the count.

Then this is just a different way to write down the same average we had on the previous.

A notation of pi to the policy and, pi of action a is the probability of selecting that action which in this case is just an indicator wich checks that the action is the one that maximizes the value.

Greedy algorithm: the greedy policy doesn't explore enough. It keeps on exploiting the knowledge that it thinks it has. But it doesn't realize or doesn't reason about uncertainty about the value estimate S. There was this one action which we only selected a single time so we should have some very high uncertainty about having a valid accurate estimate of its value.

So an alternative is to add some noise that keeps on exploring and this is what epsilon greedy does.

The way a softmax policy works is that you exponentiate these preferences and then you just normalize. So we see an exponentiated preference for action a divided by the sum of all of the exponentiated preferences for the other actions.

By exponentiating we get a positive number so we know that all of these probabilities must be positive and we know because we're normalizing with the sum that they must sum to one so this is a well-defined policy on these actions.

And then we just select an action according to this policy. Note that the preferences themselves are not supposed to be action values per se. We could plug in action values into a softmax policy for sure but now we're considering them just to be learnable policy parameters and we're going to ask the question how could we update these preferences in such a way that the policy gets better over time.

So the goal is to optimize the preferences so that the policy becomes very good.

What we're going to do is we're going to consider the policy parameters, these preferences to be something that we can just learn and we're going to try to learn them with gradients.

We want to do gradient ascent on the value of the policy. The value of the policy is the expected reward given that you're following this policy. Note that the softmax policy is a stochastic policy. So this means that it might select different actions under different probabilities.

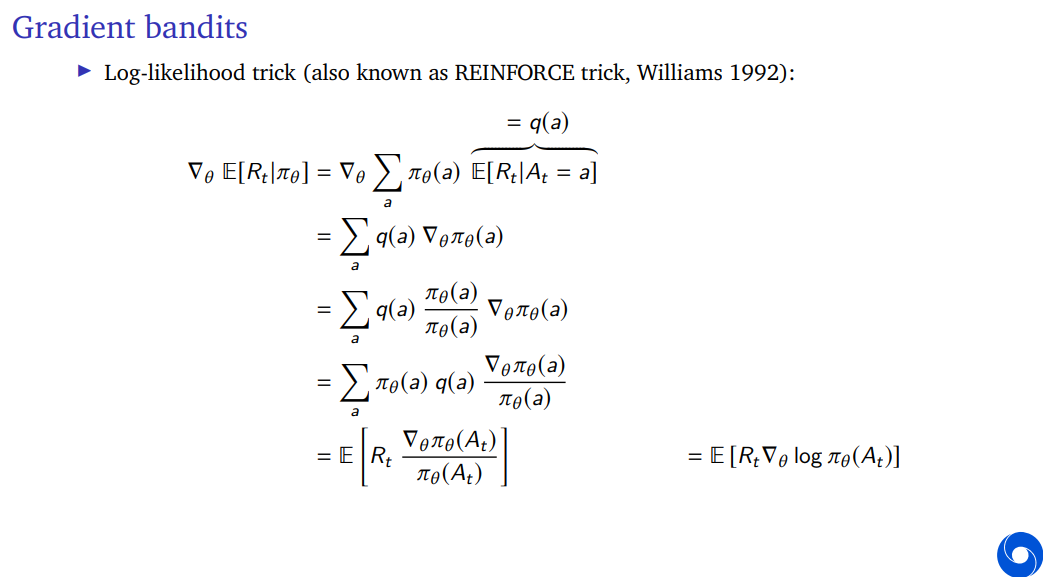

The gradient of the expectation of the reward given that policy -> expectation of reward times the gradient of the logarithm of your policy.

(policy: probability of selecting action At).

Now we have something that we can sample. We can get rid of the expectation in some sense in our updates by just sampling the thing inside. And then we would be following the stochastic gradient rather than the true gradients. But we know from practice that stochastic gradient descent and stochastic gradient ascent are quite useful algorithms and they work quite well.

We are now able to rewrite the gradient of the expectation of the reward under the policy parameters as the expectation of the reward times the gradient of the logarithm of the policy of taking that action At.

So we can sample this and turn this into a stochastic gradient ascent algorithm which means we're going to update our policy parameters theta, again this can be thought of as a vector containing your action preferences.

And we're going to add a learning rate times the reward times the gradient of the logarithm of the probability of selecting an action.

We can just execute this over and over again and then we would be performing stochastic gradient ascent on our values which means the policy should be getting better and better over time.

We can use the sampled rewards. We don't need to make any value estimates.

====> 이거 수식 도출 못 풀었다 ㅋㅋ

The preference for action At, the one that you selected gets updates by adding learning rate times reward times 1 - probability of selecting the action.

Whereas the preferences for all the other actions get updated by subtracting a learning rate times the reward times the probability of selecting that action.

Let's plug in a specific value. Let's say we saw a reward of +1. What now happens to these preferences? The action that we selected will get updated to be higher because the step size is a positive quantity, the reward is plus one and this 1 - probability of selecting the action is also the positive quantity. So the preference for selecting action At will increase if the reward is positive.

If the reward is +1, at the same time, the preference for all the other actions will go down a little bit and note also that how much they go down depends on how likely they were to be selected. Actions that were very unlikely to be selected don't go down that much, actions that were very likely to be selected go down more because we saw a different action that was quite good at this point.

How much do they go up or down that depends on your step size parameter.

So what then happens is that the preference increased for the action that had +1 and if you get a reward of -1, the preference for the action that you've selected will go down and the preference for all the other actions will go up.

If you have only positive rewards, then always whenever you take an action, the preference will increase but the actions with the higher average reward will increase faster which means that over the long run we're still following a valid gradient the policy will converges to the right policy.

The exploration here is not very explicit though. The exploration is purely due to the fact that the policy happens to be stochastic. This does work often if your policy is stochastic enough for a long enough amount of time. You'll learn enough about your policy and it's a valid gradient algorithm which means that it will continue to go up.

The only problem is that the gradient itself. Because it's a gradient algorithm, it can get stuck in a local optimum. So there's no guarantee that this algorithm will converge to the right policy. And there's no guarantee that it won't also suffer from linearly increasing regret over time because it could get stuck at a sub-optimal policy similar to the greedy policy. But it turns out to be a lot better in practice often.

And it's quite a common approach also because we can extend this algorithm quite easily to deep neural networks but it's good to keep in mind that this doesn't solve the full exploration problem just by itself.

We can modify the Policy gradient algorithms slightly by changing the mean of the reward in some sense. And the way that works is, we first know that the probabilities sum to ones. This is a valid probability. So they must sum to one and what this means is that for any number b, which is just some number, we can multiply the gradient of the policy with this b and what we see is that, we have b times the gradient of one, but the gradient of one is simply zero.

So this whole quantity on the LHS is always zero and it doesn't matter what b is as long as b does not depend on your actions.

Now why is this important turns out, we can use that any b and subtract it from the reward and this can change the weight of the algorithm works in practice.

For instance, instead of having a reward of +1, +2, we can now effectively get rewards of 1/2, -1/2. So this is not important turns out for the expected value because of this derivation above. It does not change the expected direction of the gradients, but it can change the variance of the update and this is a specifically useful in full case.

Later on we will have different states and then this value b is not allowed to depend on your actions but it is allowed to depend on state that means it can covariate a little bit with the rewards that you might get and might therefore reduce variance quite a lot.

The definition of delta is the maximum action value of v* minus the action value for action a.

(1) total regret at time t = summation over time where we count in n is one all the way up to the current time step t. And we just count the regret that we get at every time step. Out regret is going to be equal to the summation of all the instantaneous regret that you get at every time step.

(2) Instead of over time, we're going to sum over all of the actions and then simpley look at the count for each of these actions up to time t. So we can just for each action, consider how often did we take that action and how bad was it. We want that to be low.

Our goal is to have the total regret to be small. So a good algorithm will ensure small counts whenever the regret is large. And the regret is small, the count can be a bit bigger.

The regret for the optimal action is always zero no matter what the count is. But for all the other actions we do care.

The algorithm that will be able to do well in this regime can in some sense all be considered to implement this strategy which is called optimism in the face of undertainty.

Whenever we're uncertain about the value of an action, we want to be optimistic about how good it could be and then we want to pick it more often if we're more uncertain about its value. So we want to be optimistic that it could be good.

If we have more uncertainty about its value, maybe it's more important to explore that action because the mean could actually be high we're not sure where the mean is and it could be that it's higher than we think it currently is.

We're going to have some notion of uncertainty and we're going to estimate the upper confidence for each action value such that we're pretty sure that the actual action value q(a) will be smaller or equal to our current estimate of the action value plus this upper confidence bound.

We want to pick this large enough that if we're very uncertain that we're still certain that the mean is lower than this number but we want to also pick it small enough that this eventually converges to the true mean.

And then we're going to select our actions greedily but not really greedy with respect to just the action value estimate Q, but with respect to Q plus this bound U.

So we're going to define some sort of a time dependent and action dependent bound that depends on the uncertainty of the action. And we're going to define it in such a way that if we're very uncertain about an action then we'll pick it. If we're quite certain about the action, the only other reason why we might pick the action is because the estimate itself is high.

We're going to use something called the concentration inequality. It gives us a bound on how wrong our estimate will be.

What does this capture? If we don't have a lot of samples, if N is pretty small, then this bound will be pretty large. If N grows larger, this bound will go down.

In addition to that, the bound grows over time. What does this mean? This means that indefinitely we're going to continue to select each action but maybe less and less so, if it's really not a great action.

So the fact that this grows with the square root of log of t ensures that we keep exploring every action but not that much.

Because if the action is really not that great, at some point the uncertainty starts growing growing because of this log t term. So whenever we don't select an action slowly the bound is creeping up but quite slowly because log t uses slow growing function and the square root of log t is even slower but then when we do select the action, the bound goes down. So then the only reason to keep on selecting that action is if the estimate the mean is large enough.

We can prove that in total, the products of this gap times the count will be bounded by the logarithm of t for all actions.

That means, we have now established an algorithm which has logarithmic regret as an upper bound.

Because we know that the regret is also logarithmic as a lower bound, this means that we now know that the algorithm will have logarithmic regret.

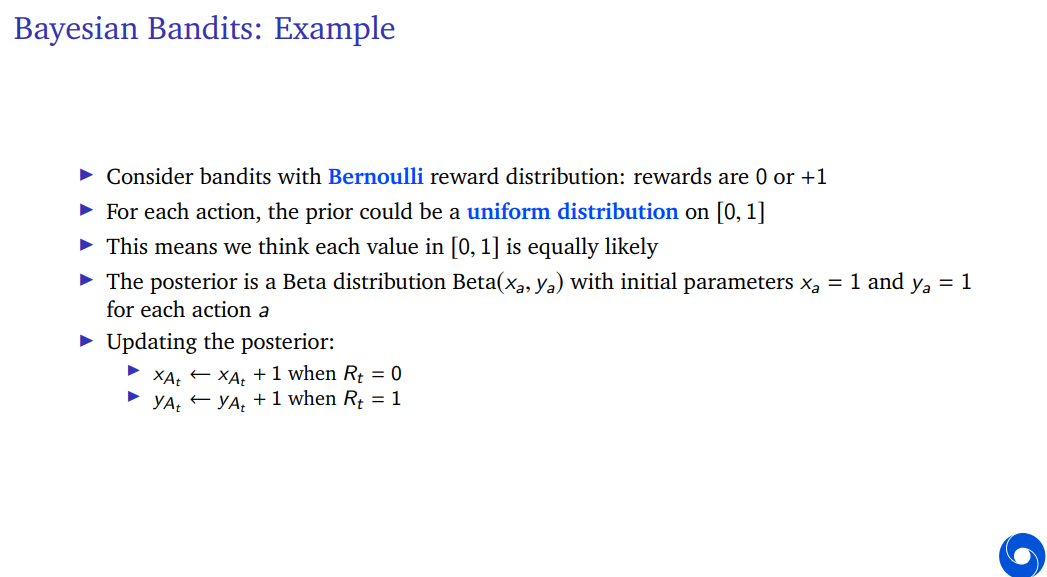

In the Bayesian approach, we keep track of a distribution and in particular, we're going to keep track of a distribution over the expected value for each action.

We're not trying to model the distribution of the rewards, instead we're going to quantify our uncertainty about where the expected reward is and then update that over time as we go.

We could again go back to principle of optimism in the face of uncertainty and one simple thing we could do is we could look at the standard deviation of each of these beliefs and pick kind of like an upper confidence bound.

It is optimistic in the face of uncertainty, because if you have a large uncertainty about where your action will be, then the probability of it being maximal also goes up.

'RL > RL_DeepMind' 카테고리의 다른 글

[Lecture 6] (1/2) Model-Free Control (0) 2024.07.31 [Lecture 5] Model-Free Prediction (0) 2024.07.30 [Lecture 4] Theoretical Fundamentals of Dynamic Programming (0) 2024.07.30 [Lecture 3] Markov Decision Processes and Dynamic Programming (0) 2024.07.29 [Lecture 1] Introduction - Reinforcement Learning (0) 2024.07.29