-

[Lecture 6] (1/2) Model-Free ControlResearch/RL_DeepMind 2024. 7. 31. 00:02

https://www.youtube.com/watch?v=t9uf9cuogBo&list=PLqYmG7hTraZDVH599EItlEWsUOsJbAodm&index=6

Policy iteration refers to interleaving two separate steps which are called policy evaluation and policy improvement.

We start with some arbitrary initial value function for instance they could all be zeros and some arbitrary policy for instance uniformly random and then the first step could be to evaluate this policy.

Then the value function is now fully accurate for the value of that policy. That would be the policy evaluation phase.

Then we've reached this value function, we can do a policy improvement step. For instance we can make the policy greedy and this policy improvements that will change the policy in such a way that we get a new policy pi' such that the value of that policy in every state is at least as high as the value of the policy that we were just evaluating and picking a greedy policy is one way to achieve this.

But because we're changing the policy, that means that our value function will become inaccurate because it's an evaluation of the previous policy but we've now changed our policy which means we can do the evaluation phase again for this new policy and again find the true values for that policy.

And then because the value function has changed, greedifying the policy with respect to that new value might also change the policy and so on.

When this ceases to change anything, that means that by definition, we must have found the optimal value function because it means that the true value of the policy is equal to when you then consider the greedy value and that by definition will be the optimal value function.

We're going to use this to build model-free algorithms because before with dynamic programming, the policy evaluation phase, we consider dynamic programming algorithms which can draw upon knowledge of the precise Markov Decision Process but we are not wanting to make that assumption anymore, so instead we'll come up with approximate methods that sample and then do this evaluations phase approximately and turns out this will still be okay. This can still lead to converging algorithms.

We see an update which could be taken to be a Monte Carlo value update where we're in some episode depicted n and, there was a time step t inside that episode in which we saw a state St and from that state on what we saw a return Gt and then we just incrementally update the value of that state towards that.

Monte Carlo would use a full return. So the reward plus the discounted next reward plus the doubly discounted reward after that and so on which we can write on recursively as well where the return is the first reward plus the discounted rest of the return. That would be our Monte Carlo target.

This is a fine target to use although it might be quite high variance in some cases.

Alternatives might have lower variance like TD0. TD0 is exactly the same algorithm which we could also call one step TD. This refers to the fact that we're taking one step reward. We see one reward and then we use bootstrapping which refers to the usage of the value function estimate itself to take the place of the random return.

So if we compare it on Monte Carlo, we saw Monte Carlo we were using the random rest of the return whereas in Temporal Difference learning, we replaced that with our current estimate of the expected return.

This tends to be much lower variance. It can suffer from bias in some cases.

So we also discuss intermediate approaches that one can use for instance, n-step approaches where we just take a fixed number of steps in this case n different steps which means we look at n different rewards and then we bootstrap the state n steps in the future which can be written down semi-recursively as well where the n step return takes one step and then looks at the n-1 step return.

There's different mechanisms you could use to do model-free policy evaluation and in each of these cases, the goal was to estimate the value of the current policy. So there's some policy pi, this is used to generate these transitions, to generate the behavior and we're just estimating the value of that policy.

We want to improve the policy and one way to do that is to make it greedy. However greedy policy improvement over state value functions does require a model of the Markov Decision Process. Because the greedy policy would then be something like this where we take the argmax over this expectation of the transition. That's a bit cumbersome because we are not model free anymore.

So now the policy improvement step seems to require a model but fortunately there's a way around that. We can just instead of estimating state values, we could also choose to estimate state action values.

And then we can much more easily find the greedy policy by picking the highest valued action in every state. This would give us a new policy. So q here cound be an estimate of q_pi of the true value of the policy. And the pi' cound be a greedy policy with respect to these estimates. Then having a new policy, we could try to estimate that one instead.

The whole idea is to plug in these model-free estimates into the policy evaluation phase of policy iteration.

We're going to estimate the value of the policy and then go greedy. The greedy step is facilitated by using these action values. It's very easy to then if you have these action values, construct a greedy policy and this can be done model-free.

Now in order to make the full algorithm model-free, we also want this evaluation step to be model-free and one way to do that is, to use Monte Carlo policy evalution.

So we could just consider following a certain policy and do that indefinitely long, many many samples and we all the way convert this true action values.

There is a problem if we go fully model-free which is that if we really pick the greedy policy then we wouldn't explore which means we wouldn't actually sample all state action pairs when learning by interacting.

If you have a fully greedy policy and you're trying to evaluate that with a Monte Carlo algorithm, that means that you should be following that greedy policy but if you're following the greedy policy it might simply not select certain actions in certain states. And that means that we don't have a reliable estimate of the state action value from those actions. Which means that then if we consider the evaluation phase this will be maybe severely hampered and then the improvement step could be wrong.

So instead of doing full greedification, we could also consider an epsilon greedy policy where we still allow with some probability epsilon to pick any action turns out this is okay this is why this procedure is called generalized policy iteration.

Recall that for the policy improvement step, we just needed that the new policy was better but we don't need this to be fully greedy. So it turns out epsilon greedy can be plugged in here as well.

Now we have a full model-free algorithm that can be use for policy iteration and you might question, well sure but you're not doing the full evaluation anymore, you're not doing full greedification, is this algorithm still sound? Does this still converge to the actual optimal value function and the optimal policy?

What we're doing in terms of the algorithm, we're going to sample episodes according to our current policy then we're going to do policy evaluation, so we're going to model-free learn the value of that policy.For instance we could pick a step size that decays over time because otherwise we might have irreducible variance which might make our action values to be quite inaccurate.

And then in addition to that it might also be useful to decay the uniformness of the policy in the epsilon greedy policy. This will make it make sure that eventually you're selecting the greedy policy. This is a small change the epsilon greedy policy where we're allowing the epsilon to be dependent on the number of episodes you've seen so far or the time steps.

So we see that the evaluation and the exploration are both time dependent here but on every time step we're doing this approximate version where we have some policy right now we're evaluating that with some step size and some samples and then we're going to go partly greedy.

The reason to pick this epsilon is that, it actually has a certain property which is desirable. We call that property "Greedy in the limit with infinite exploration".

What does tha mean, greedy in the limit means that eventually you will become fully greedy and this is important to converge to the optimal policy eventually.

You should pick the right action and you shouldn't have this epsilon probability of picking a wrong action indefinitely. So what we want is eventually should become fully greedy but you should also explore infinitely often.

So what does the second thing mean, we can formalize that by saying the count for all state action pairs should go infinity as time goes to infinity. This ensures that we are going to evaluate everything properly. So if you continue to select every state action pair indefinitely often, that means you eventually have enough data to have a good evaluation of the value of the current policy and then this means that the greedification step can be trusted.

"Greedy in the limit" can be formalized as this where we say our stochastic policy, epsilon greedy, as time goes to infinity, should eventually become fully greedy with respect to whatever the current action values are.

These two conditions, they put in opposite directions. "greedy in the limit" can be achieved by being immediately greedy from the beginning but then you wouldn't explore infinitely often. Likewise you could "explore infinitely often" if you just kept the fixed epsilon but you wouldn't be greedy in the limit.

So are there exploration policies that do both of these at the same time? The answer to that is yes. For instance if you have an epsilon greedy policy that decays the epsilon with one over the number of episodes or one over the number of time steps in total, this would actually have both these properties if you're careful about decaying the epsilon properly.

If you have a "greedy in the limit exploration policy" which also explores infinitely often, so a GLIE policy, then Model-free control with Monte Carlo estimates converges to the optimal action-value function in the limit.

That's reassuring we've kept the optimality that we had from our dynamic programming algorithms but we can do this now in a fully model-free approach.

Temporal-Difference learning can learn from incomplete sequences which also means that for instance if the episodes are really long or if you only have exactly one episode in your whole lifetime, Temporal Difference learning can still learn whereas Monte Carlo would not be able to learn during the episode.

So an idea would be to use Temporal Difference methods instead of Monte Carlo methods to do control. So we wanted to apply Temporal Difference learning to the action value functions to have the same convenience of being able to easily pick a greedy or an epsilon greedy policy. And then we could use epsilon greedy policy improvement as before.

But we could also continue to update every time step because Temporal Difference learnig can learn from individual transitions. It doesn't need complete episodes.

One way to do that is the SARSA algorithm. SARSA is Temporal Difference learning for state action values.

One way to depict this is to look at this diagram where we started an action which corresponds to some action a taken from some state s and then we see some reward r and we reach a new state s' and then we consider already what the next action will be under our policy a' and then we use this to construct an update.

So our target here will be the immediate reward plus a discounted next state action value of S_t+1, A_t+1.

And we're going to compare this target to our current estimate, have a small step size to get rid of the variance or the noise in this update. And then we update our action value functions incrementally using this update.

We're going to do generalized policy iteration again but now we're going to consider instead of taking full episodes or even multiple episodes, we're just going to do this time step by time step.

So each evaluation phase will be one time step long and then we immediately consider the epsilon greedy policy with respect to our new action values.

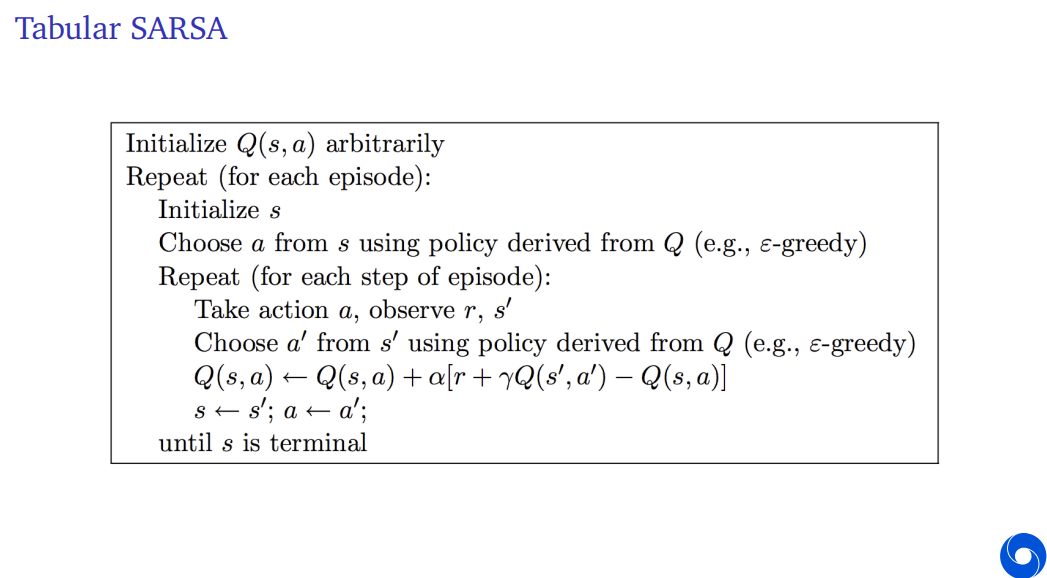

This is for the tabular algorithm. So we're going to use a capital Q to refer to specific cell in the table corresponding to some state and action and now for each state action pair, we're just going to arbitrarily initialize this for instance at zero.

And then we have this double loop where the outer loop is on episodes and the inner loop is on steps within the episode.

So each episode starts by initializing the state here. Think of state as the inital state of your episode which is given to you by the environments for instance these states could simply be the observation and in the simplest case the observation could be the full environment state if you're in a fully observable problem that would be the case and then as agent state, you could use that observation more generally when say states this should be the agent states. So the inital agent state could be due from the first observation in the episode somehow inferred from that.

Then we immediately pick an action according to our policy and the policy is derived from the action value typically although it doesn't have to be for this algorithm we could also be using SARSA for pure prediction but in this case if we're wanting to do a control, the policy could derive from our action values and could be epsilon greedy.

And then in the episode, we repeat the following steps where we execute that action in the environment which means we can then observe a reward in the next state or next observation that helps us construct the next state.

And then we immediately pick the action that we're going to execute in that next state and then again this is from a policy derived from the action values and then we use that action to update because we're going to update the state action value by adding this Temporal Difference error which is the reward plus the discounted state action value at the next time step minus our current estimate.

And then we replace s with s' and a with a'.

And we start to loop again. So on the next step we'll execute this a' that we've just chosen. So we picked the action here and then on the next loop we'll execute it in the world and then this just continues until the state is terminal.

So refresh the final step of the episode and then we repeat the next episode. We start again in a new state. So this would be the SARSA algorithm.

There is a theorem that this algorithm converges. And we need the same condition that we needed for Monte Carlo control which is that the policy should be "greedy in the limit with infinite exploration".

The "greedy in the limit" ensure that the action at the next time step will eventually become the greedy action so that we're actually evaluating the greedy policy instead of evaluating whatever policy we currently happen to follow that would be a different thing

And the "infinite exploration" is just to make sure that we actually update all state action values indefinitely often because otherwise we might have irreducible uncertainty about some of these action values which might lead us to draw the wrong conclusions about which policies are preferred.

'Research > RL_DeepMind' 카테고리의 다른 글

[Lecture 7] (1/2) Function Approximation (0) 2024.08.03 [Lecture 6] (2/2) Model-Free Control (0) 2024.08.02 [Lecture 5] Model-Free Prediction (0) 2024.07.30 [Lecture 4] Theoretical Fundamentals of Dynamic Programming (0) 2024.07.30 [Lecture 3] Markov Decision Processes and Dynamic Programming (0) 2024.07.29