-

PretrainingResearch/NLP_Stanford 2024. 6. 23. 13:16

※ Writing while taking a course 「Stanford CS224N NLP with Deep Learning」

※ https://www.youtube.com/watch?v=DGfCRXuNA2w&list=PLoROMvodv4rMFqRtEuo6SGjY4XbRIVRd4&index=9

Lecture 9 - Pretraining

Why should this pretraining, fine-tuning, two-part paradigm help?

Pretraining provides some starting parameters, L(theta). So this is all the parameters in your network from trying to do this minimum over all possible settings of your parameters of the pretraining loss.

And then, the fine-tuning process takes your data for fine-tuning.

You've got some labels. And it tries to approximate the minimum through gradient descent of the loss of the fine-tuning task of theta. But you start at theta hat. So you start gradient descent at theta hat, which your pretraining process gave you. And then, if you could actually solve this min and wanted to.

The starting point really does matter! => need check!!

The process of gradient descent, it stick relatively close to the theta hat during fine-tuning. So you start at theta hat, and then you walk downhill with gradient descent until you hit a valley. And that valley ends up being really good because it's close to the pretraining parameters,which were really good for a lot of things.

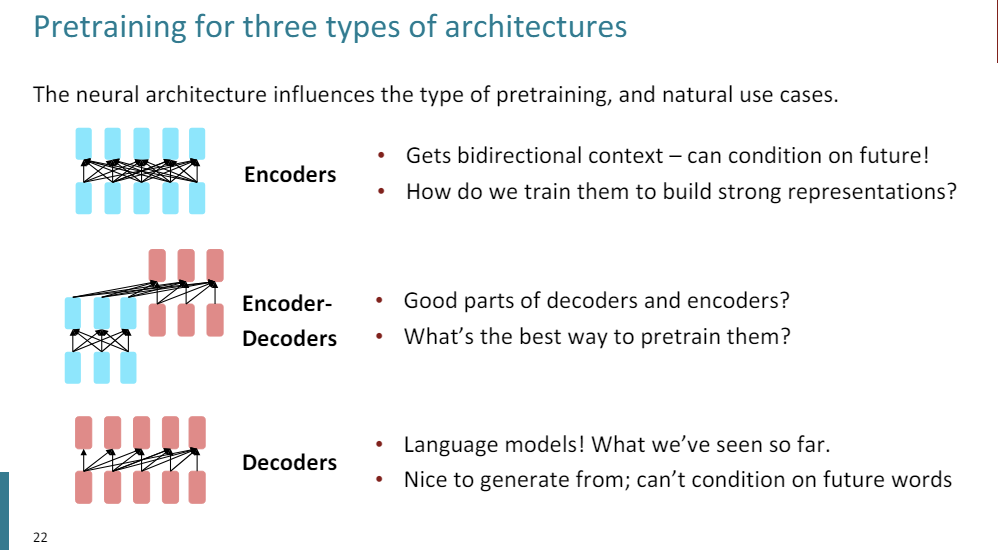

Encoders get bidirectional context. You have a single sequence, and you're able to see the whole thing, like an encoder in machine translation.

Encoder-Decoders have one portion of the network that gets bidirectional context. So that's like the source sentence of my machine translation system.

And then they're paired with a decoder that gets unidirectional context so that I have this informational masking where I can't see the future so that I can do things like language modeling, I can generate the next token of my translation, whatever. So you could think of it as, I've got my source sentence here and my partial translation here, and I'm decoding out the translation.

And then decoders only are things like language models.

And there's pretraining for all three large classes of models.

How you pre-train them, and then how you use them depends on the properties and the proclivities of the specific architecture?

Fine-tuning all parameters of the network versus just a couple of them.

What we've talked about so far is you pre-train all the parameters, and then you fine-tune all of them as well. So all the parameter values change.

An alternative, which you call parameter efficient or lightweight fine-tuning, you choose little bits of parameters, or you choose some very smart way of keeping most of the parameters fixed and only fine-tuning others.

The intuition is that these pretrained parameters were really good. And you want to make the minimal change from the pretrained model to the model that does what you want so that you keep some of the generality, some of the goodness of the pretraining.

One way that this is done is called prefix tuning. Prompt tuning is very similar - where you freeze all the parameters of the network. So I've pretrained my network here. And I never change any of the parameter values.

Instead, I make a bunch of fake pseudo-word vectors that I prepend to the very beginning of the sequence.

And I train just them. It's like these would have been like inputs to the network, but I'm specifying them as parameters, and I'm training everything to do my sentiment analysis task just by changing the values of these fake words.

This is nice because I get to keep all the good pretrained parameters and then just specify diff that ends up generalizing better. This is a very open field of research.

This is also cheaper because I don't have to compute the gradients, or I don't have to store the gradients and all the optimizer states with respect to all these parameters. I'm only training a very small number of parameters.

Another thing that works well is taking each weight matrix. So I have a bunch of weight matrices in my transformer, and I freeze the weight matrix and learn a very low-rank little diff.

And I set the weight matrix's value to be the original value plus my very low-rank diff from the original one. And this ends up being a very similarly useful technique.

The overall idea here is that, I'm learning way fewer parameters than I did via pretraining and freezing most of the pretraining parameters.

For encoder-decoders, we could do something like language modeling. I've got my input sequence here, encoder output sequence here.

And I could say this part is my prefix for having bidirectional context. And I could then predict all the words that are in the latter half of the sequence, just like a language model, and that would work fine.

I practice, what works much better is "span corruption". Idea is a lot like BERT, buit in a generative sense where I'm going to mask out a bunch of words in the input. And then, at the output, I generate mask token, and then what was supposed to be there, but the mask token replaced it.

What this does is that it allows you to have bidirectional context. I get to see the whole sequence, except I can generate the parts that were missing. So this feels a little bit like BERT. You mask out parts of the input, but you generate the output as a sequence like you would in language modeling.

This might be good for machine translation, where I have an input that I want bidirectional context in, but then I want to generate an output and I want to pre-train the whole thing.

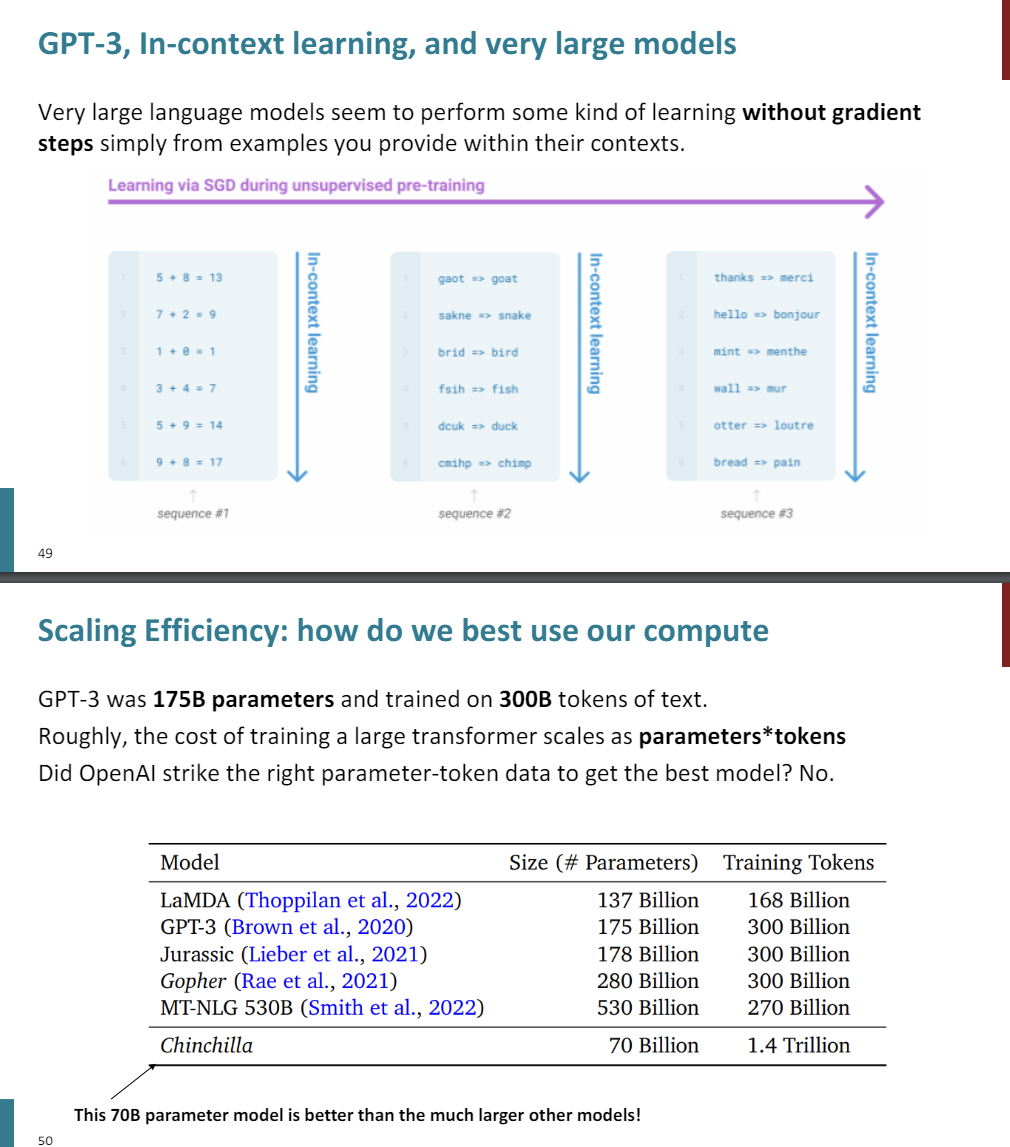

There were these emergent properties that showed up in much larger models. And it wasn't clear when looking at the smaller models that you'd get this new, this qualitatively new bebavior out of them.

It's not obvious from just the language modeling signal. GPT-3 is just trained on that decoder only, just predict the next word, that it would, as a result of that training, learn to perform seemingly quite complex things as a function of its context.

Amazing...!

This should be quite surprising... So far, we said -- we've talked about good representations, contextual representations, meanings of words, and context.

This is some very, very high-level pattern matching. It's coming up with patterns in just the input data and that one sequence of text that you've passed it so far and it's able to identify how to complete the pattern.

And you should think, what kinds of things can this solve? What are its capabilities? What are its limitations?

This ends up being an open area of research.

What are the kinds of problems that you maybe saw in the training data log? Maybe GPT-3 saw a ton of pairs of words. It saw a bunch of dictionaries, bilingual dictionaries, in its training data. So it learned to do something like this. Or is it doing something much more general, where it's really learning the task in context?

The actual story, w're not totally sure. It's something in the middle. It seems like it has to be tied to your training data in ways that we don't quite understand. But there's also a non-trivial ability to learn new, types of patterns just from the context. So this is a very interesting thing to work on.

Another way of interacting with these networks that has come out recently is called Chain of Thought.

We saw in the "in-context learning", that the prefix can help specify what task you're trying to solve right now. And it can do even more. So here's standard prompting.

We have a prefix of examples of questions and answers. So you have a question and then an example answer. So that's your prompt that's specifying the task. And then you have a new question, and you're having the model generate an answer, and it generates it wrong.

Chain-of-Thought Prompting says, well, how about in the example? In the demonstration we give the question, and then we give decomposition of steps towards how to get an answer. So I'm actually writing this out as part of the input.

I'm giving annotations as a human to say to solve this word problem. And then I give it a nwe question. And the model says, I know what I'm supposed to do. I'm supposed to first generate a sequence of steps, of intermediate steps, and then next say the answer is -- and then say what the answer is.

And it turns out, this hsould again be very surprising, that the model can tend to generate plausible sequencse of steps and then much more frequently generates the correct answer after doing so relative to trying to generate the answer by itself.

So you can think of this as a scratchpad. you can think of this as increasing the amount of computation that you're putting into trying to solve the problem.

You writing out your thoughts. As I generate each word of this continuation here, I'm able to condition on all the past words so far. And so maybe it just allows the network to decompose the problem into smaller, simpler problems, which is more able to solve each.

No one's really sure why this works exactly, either. At this point, with networks that are this large, their emergent properties are both very powerful and exceptionally hard to understand and very hard you should think to trust because it's unclear what its capabilities are, and what its limitations are, where it will fail.

특이점이 온 것 같다....!

'Research > NLP_Stanford' 카테고리의 다른 글

Reinforcement Learning from Human Feedback (RLHF) (0) 2024.06.24 Prompting (0) 2024.06.24 Self-Attention & Transformers (0) 2024.06.22 Neural Machine Translation (0) 2024.06.22 Sequence-to-Sequence model (0) 2024.06.22