-

[Lecture 11] (2/2) Off-Policy and Multi-Step Learning*RL/RL_DeepMind 2024. 8. 9. 01:04

https://www.youtube.com/watch?v=u84MFu1nG4g&list=PLqYmG7hTraZDVH599EItlEWsUOsJbAodm&index=11

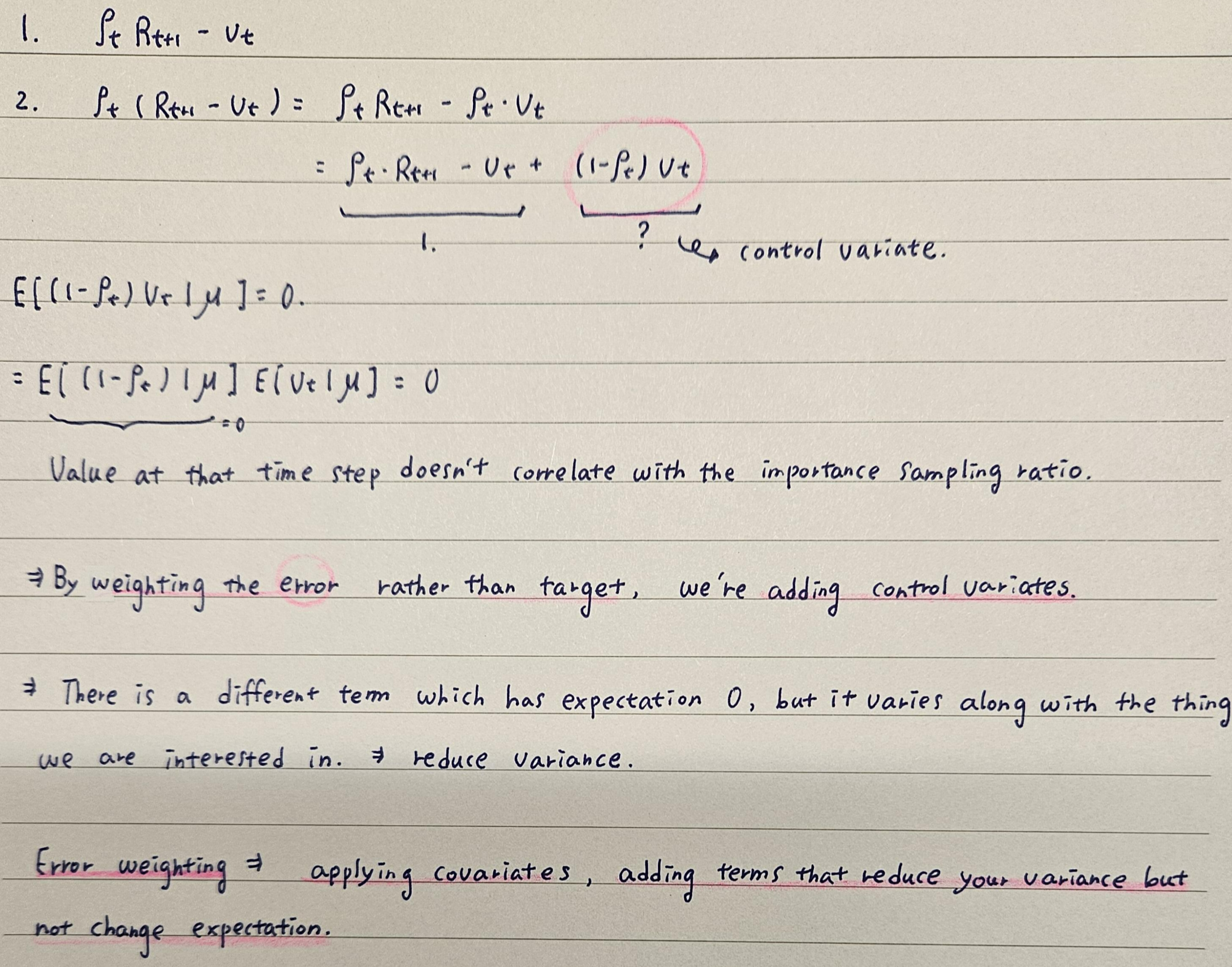

We can extend this idea of using control variates in a multi-step case.

In order to do that, we're going to consider a generic multi-step update. Here we're going to consider the lambda update. So instead of assuming Monte Carlo, for simplicity, we're going to allow bootstrapping now.

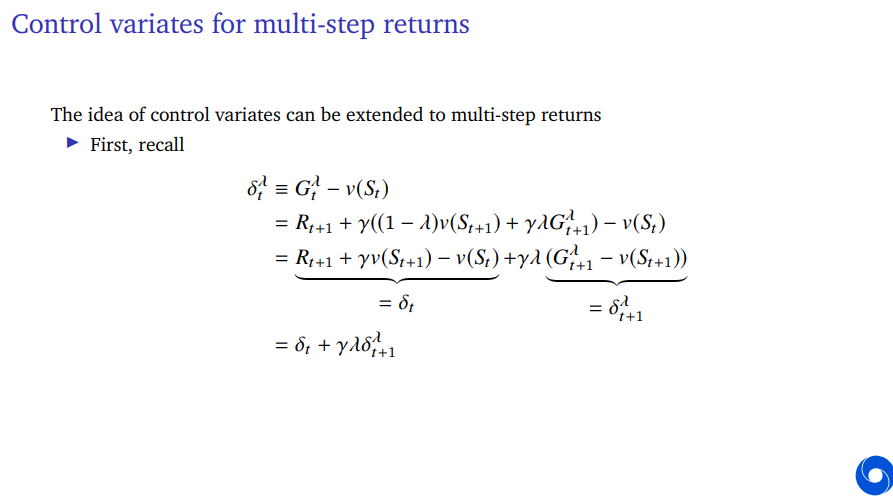

Recall that, this lambda return on every step is defined to take the first reward and then discount(gamma) and then on the next step it bootstraps with some weight (1-lambda) and the rest of the return with lambda.

We can rewrite this, as the one-step Temporal Difference error plus the discounted and additionally down weighted with this lambda parameter recursive lambda weighted Temporal Difference error.

So the lambda Temporal Difference error which is your lambda return minus current state estimates can be written recursively as the immediate Temporal Difference error plus the discounted with additional lambda recursive itself.

So if lambda is 0, we get one-step TD(0), if lambda is 1, this will turn out to be equivalent to the Monte Carlo error.

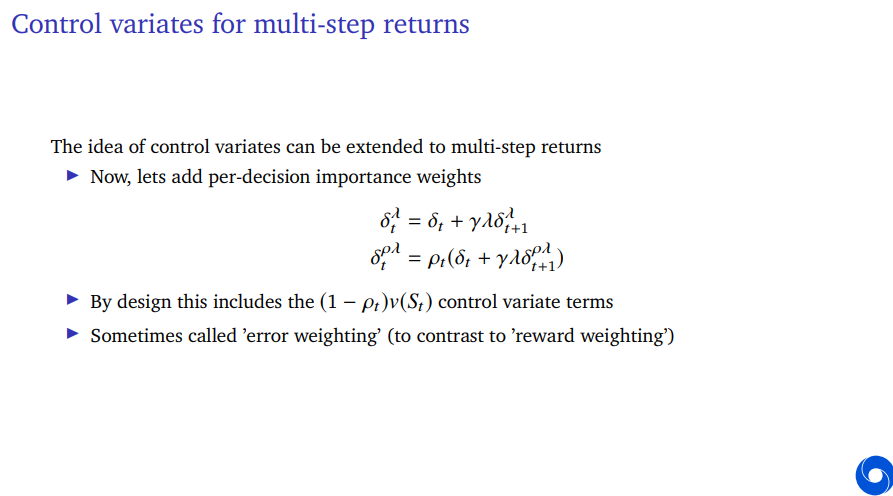

We can apply this in the per-decision case as well.

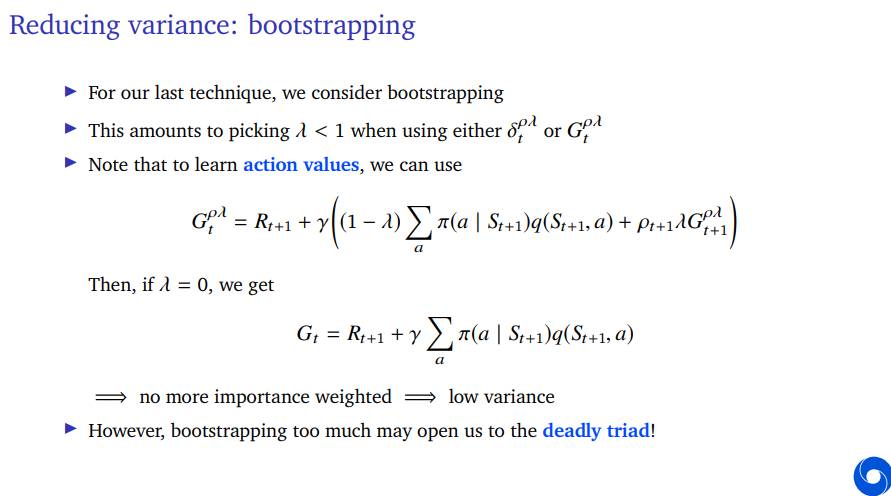

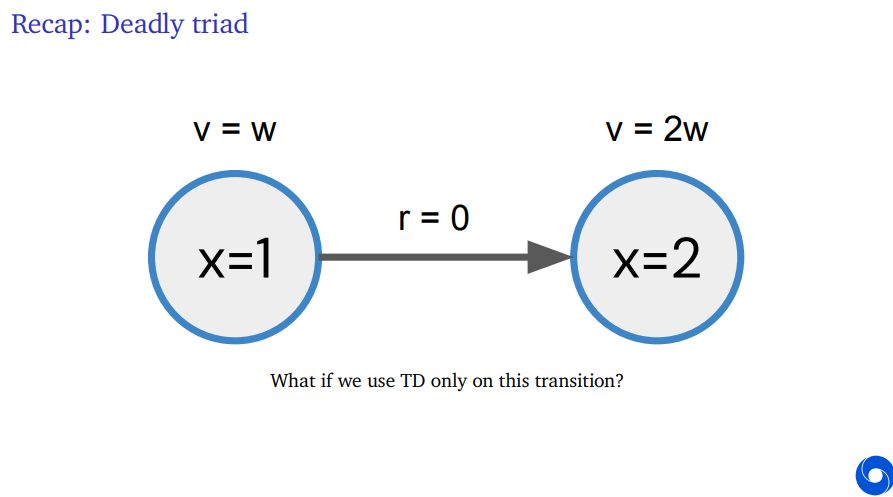

Bootstrapping is typically a good way to reduce your variance a little bit. We already had these lambda weighted errors or returns where lambda that could be lower than 1. And if lambda is lower than 1, then we're going to reduce the variance, but we might incur some bias, because we might bootstrap.

The bias is not because we are estimating for the wrong distribution necessarily. The bias is there because we're using estimates. Our value function won't be completely accurate and therefore there might be some bias due to our function approximation error or just our estimation error because we have seen finite data so far.

So for action values, we can use this importance sample to return where you can see the normal thing showing up here. This is the expected SARSA return. So we're doing the one-step case here. If lambda is equal to 0, but lambda can be different than 0 and if lambda different than 0, then we recurse and go into the next time step. So this quantity is defined recursively and we can see if lambda is 0, we can see the expected SARSA thing reappearing that we had previously.

'*RL > RL_DeepMind' 카테고리의 다른 글

[Lecture 12] (2/2) Deep Reinforcement Learning (0) 2024.08.09 [Lecture 12] (1/2) Deep Reinforcement Learning (0) 2024.08.09 [Lecture 11] (1/2) Off-Policy and Multi-Step Learning (0) 2024.08.08 [Lecture 9] (2/2) Policy Gradients and Actor Critics (0) 2024.08.05 [Lecture 9] (1/2) Policy Gradients and Actor Critics (0) 2024.08.04