-

[TEMPO] Prompt-based Generative Pre-trained Transformer for Time Series ForecastingPaper Writing 1/Related_Work 2024. 10. 13. 01:51

https://arxiv.org/pdf/2310.04948

https://github.com/DC-research/TEMPO

(Oct 2023)

Abstract

The past decade has witnessed significant advances in time series modeling with deep learning. While achieving state-of-the-art results, the best-performing architectures vary highly across applications and domains. Meanwhile, for natural language processing, the Generative Pre-trained Transformer (GPT) has demonstrated impressive performance via training one general-purpose model across various textual datasets. It is intriguing to explore whether GPT-type architectures can be effective for time series, capturing the intrinsic dynamic attributes and leading to significant accuracy improvements. In this paper, we propose a novel framework, TEMPO, that can effectively learn time series representations. We focus on utilizing two essential inductive biases of the time series task for pre-trained models: (i) decomposition of the complex interaction between trend, seasonal and residual components; and (ii) introducing the design of prompts to facilitate distribution adaptation in different types of time series. TEMPO expands the capability for dynamically modeling real-world temporal phenomena from data within diverse domains. Our experiments demonstrate the superior performance of TEMPO over state-of-the-art methods on zero shot setting for a number of time series benchmark datasets. This performance gain is observed not only in scenarios involving previously unseen datasets but also in scenarios with multi-modal inputs. This compelling finding highlights TEMPO’s potential to constitute a foundational model-building framework.

1. Introduction

Time series forecasting, i.e., predicting future data based on historical observations, has broad real-world applications, such as health, transportation, finance and so on. In the past decade, numerous deep neural network architectures have been applied to time series modeling, including convolutional neural networks (CNN) (Bai et al., 2018), recurrent neural networks (RNN) (Siami-Namini et al., 2018), graph neural networks (GNN) (Li et al., 2018; Cao et al., 2021), and Transformers (Liu et al., 2021; Zhou et al., 2021; Wu et al., 2023; Zhou et al., 2022; Woo et al., 2022; Kitaev et al., 2020; Nie et al., 2023), leading to state-of-the-arts results. While achieving strong prediction performance, some of the previous works on time series mostly benefit from the advance in sequence modeling (from RNN and GNN, to transformers) that captures temporal dependencies but have not fully capitalized on the benefits of intricate patterns within time series data, such as seasonality, trend, and residual. These components are the key differentiating factors of time series from classical sequence data (Fildes et al., 1991). As a result, recent studies suggest that deep learning-based architectures might not be as robust as previously thought and might even be outperformed by shallow neural networks or even linear models on some benchmarks (Zeng et al., 2023; Zhang et al., 2022b; Wu et al., 2023; Ekambaram et al., 2023; Fan et al., 2022). Despite the notable success of deep learning forecasters, the vast majority of them still follow a conventional training mechanism, training and predicting using the same datasets.

Meanwhile, the rise of foundation models in natural language processing (NLP) and computer vision (CV), such as LLaMA (Touvron et al., 2023), CLIP (Radford et al., 2021) and ChatGPT, marks major milestones on effective representation learning. It is extremely intriguing to explore a pretrained path for foundation time series models with vast amounts of data, facilitating performance improvement in downstream tasks. Some recent works shed light into the possibility of building general transformers for time series (Zhou et al., 2023; Sun et al., 2023; Goswami et al., 2024; Das et al., 2023b; Rasul et al., 2023). However, the theoretical and practical understanding of such models has not reached the consensus observed in other domains where generative models have been widely acknowledged (Garza & Mergenthaler-Canseco, 2023). In addition, prompting techniques in LLM (such as InstructGPT (Ouyang et al., 2022)) provide a way to leverage the model’s existing representations during pre-training instead of requiring learning from scratch. However, existing backbone structures and prompt techniques in language models do not fully capture the evolution of temporal patterns as in N-BEATS (Oreshkin et al., 2019) and AutoFormer (Wu et al., 2021), which are fundamental for time series modeling.

In this paper, we make an attempt to address the timely challenges of adapting large pre-trained models for time series forecasting tasks and developing a prompt-based generative pre-training transformer for time series, namely TEMPO. TEMPO consists of two key analytical components for effective time series representation learning: one focuses on modeling specific time series patterns, such as trends and seasonality, and the other concentrates on obtaining more universal and transferrable insights from the inherent properties of data through a prompt-based approach. Specifically, TEMPO firstly decomposes time series input into three additive components, i.e., trend, seasonality, and residuals via locally weighted scatterplot smoothing (Cleveland et al., 1990). Each of these temporal inputs is subsequently mapped to its corresponding hidden space to construct the time series input embedding of the generative pre-trained transformer (GPT). We conduct a formal analysis, bridging the time series domain with the frequency domain, to highlight the necessity of decomposing such components for time series analysis. In addition, we theoretically reveal that the attention mechanism is hard to achieve the decomposition automatically. Second, TEMPO utilizes a soft prompt to efficiently tune the GPT (Radford et al., 2019) for forecasting tasks by guiding the reuse of a collection of learnable continuous vector representations that encode temporal knowledge of trend and seasonality. In addition, we leverage the three key additive components of time series data—trend, seasonality, and residuals— to provide an interpretable framework for comprehending the interactions among input components (Hastie, 2017). Experiment results on zero shot setting and multimodal setting of TEMPO pave the path to foundational models for time series. Besides, we demonstrate the stable predictive power of our model on unseen samples with textual information on two multimodal datasets including TETS (Text for Time Series) dataset, which is first introduced in this work to foster further research topics of pre-trained time series models.

In summary, the main contributions of our paper include: (1) We introduce an interpretable prompt-tuning-based generative transformer, TEMPO, for time series representation learning. It further drives a paradigm shift in time series forecasting - from conventional deep learning methods to pre-trained foundational models. (2) We adapt pre-trained models for time series by focusing on two fundamental inductive biases: First, we utilize decomposed trend, seasonality, and residual information. Second, we explore the soft prompt strategies to accommodate time series data’s dynamic nature. (3) Through extensive experimentation on benchmark datasets and two multimodal datasets, our model demonstrates superior performance. Notably, our robust results towards highlights the potential of foundational models in the realm of time series forecasting.

2. Related Works

Pre-trained Large Language Models for Time Series.

The recent development of Large Language Models (LLMs) has opened up new possibilities for time-series modeling. LLMs, such as T5 (Raffel et al., 2020), GPT (Radford et al., 2018), GPT-2 (Radford et al., 2019), GPT-3 (Brown et al., 2020), GPT-4 (OpenAI, 2023), LLaMA (Touvron et al., 2023), have demonstrated a strong ability to understand complex dependencies of heterogeneous textual data and provide reasonable generations. Recently, there is growing interest in applying language models to time series tasks (Jin et al., 2024a; Gruver et al., 2024). For example, Xue & Salim naively convert time series data to text sequence inputs and achieves encouraging results. Sun et al. propose text prototype-aligned embedding to enable LLMs to handle time series data. In addition, Yu et al. present an innovative approach towards leveraging LLMs for explainable financial time series forecasting. The works in (Zhou et al., 2023) and (Chang et al., 2023) are the most relevant ones to our work, as they both introduce approaches for time-series analysis by strategically leveraging and fine-tuning LLMs. However, these studies directly employ time series data to construct embeddings, without adequately capturing the inherent and unqiue characteristics of time series data which is challenging to decouple such information within the LLMs (Shin et al., 2020). In addition, there is still very limited work on LLM for multimodal data with time series. METS (Li et al., 2023) is one of the early works pursuing this direction. While the experiment results are encouraging, it is difficult to extend METS to other modalities since the embedding alignment between time series and texts are specific. Please refer to the suvery papers (Jin et al., 2023; 2024b) for further references of time series meeting LLMs.

Prompt tuning.

Prompt tuning is an efficient, low-cost way of adapting a pre-trained foundation model to new downstream tasks which has been adapted to downstream tasks across various domains. In NLP domain, soft prompts with trainable representation are used through prompt-tuning (Lester et al., 2021) or prefix-tuning (Li & Liang, 2021). Prompting techniques have also been extended to CV tasks like object detection(Li et al., 2022) and image captioning (Zhang et al., 2022a), etc and other domains such as misinformation (Zhang et al., 2024). Multimodal works, such as CLIP (Radford et al., 2021), use textual prompts to perform image classification and achieve SOTA performance. In addition, L2P (Wang et al., 2022b) demonstrates the potential of learnable prompts stored in a shared pool to enable continual learning without rehearsal buffer, and Dualprompt (Wang et al., 2022a) introduces a dual-space prompt architecture, maintaining separate prompt encodings for general knowledge and expert information, etc. Our research builds upon these concepts by exploring the use of prompt design from indicative bias specifically for temporal reasoning and knowledge sharing across time series forecasting problems.

3. Methodology

In our work, we adopt a hybrid approach that incorporates the robustness of statistical time series analysis with the adaptability of data-driven methods. As shown in Figure 1, we propose a novel integration of seasonal and trend decomposition from STL (Cleveland et al., 1990) into the pre-trained transformers. This strategy allows us to exploit the unique strengths of both statistical and machine learning methods, enhancing our model’s capacity to handle time series data efficiently. Moreover, a semi-soft prompting approach is introduced to enhance the adaptability of pre-trained models for handling time series data. This innovative approach enables the models to merge their extensive learned knowledge with the unique requirements intrinsic to time series analysis.

3.1. Problem Definition

Given observed values of previous K timestamps, the task of multivariate time-series forecasting aims to predict the values for the next H timestamps. That is,

3.2. Time Series Input Representation

For time series data, representing the complex input by decomposing it into meaningful components, such as trend and season components, can help extract information optimally. In this paper, given the input X ∈ R n×L, where n is the feature (channel) size and L it the length of the time series, the additive STL decomposition (Cleveland et al., 1990) can be represented as:

Here, i is the channel index (corresponding to a certain covariate) for multivariate time series input, and the trend

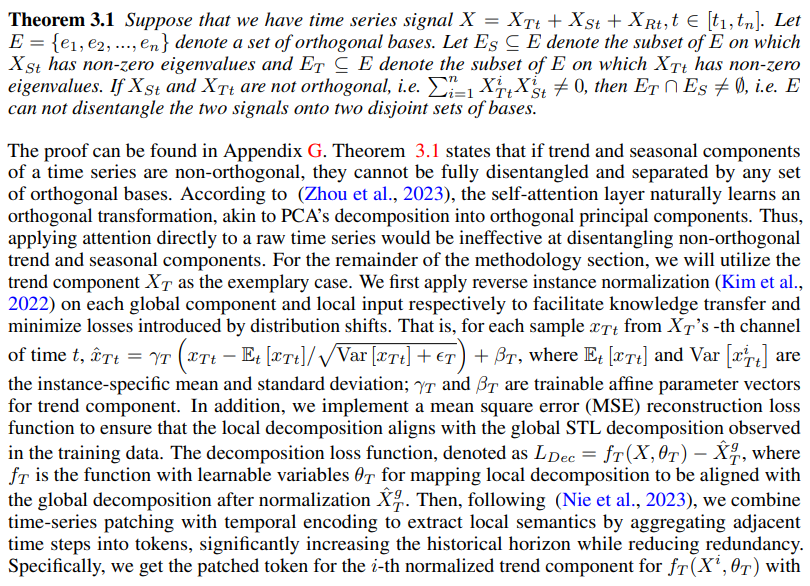

captures the underlying long-term pattern in the data, where m = 2k + 1 and k is the averaging step size. The seasonal component XS ∈ R n×L encapsulates the repeating short-term cycles, which can be estimated after removing the trend component. The residual component XR ∈ R n×L represents the remainder of the data after the trend and seasonality have been extracted. Note that, in practice, it is suggested to leverage as much information as possible to achieve a more precise decomposition. However, in consideration of computational efficiency, we opt not to use the STL decomposition on the largest possible data window on each instance. Instead, we perform local decomposition within each instance using a fixed window size. Inspired by N-BEATs (Oreshkin et al., 2019), we introduce learnable parameters for estimating the various local decomposition components. Same for the others. This principle applies to other components of the model as well. In Appendix G, we establish a connection between time series forecasting and frequency domain prediction, where our findings indicate that decomposition significantly simplifies the prediction process. Note that such decomposition is of more importance in current transformer-based methods as the attention mechanism, in theory, may not disentangle the disorthogonal trend and season signals automatically:

3.3. Prompt Design

Prompting techniques have demonstrated remarkable effectiveness across a wide range of applications by leveraging the power of task-specific knowledge encoded within carefully crafted prompts. This success can be attributed to the prompts’ ability to provide a structured framework that aligns the model’s outputs with the desired objectives, resulting in enhanced accuracy, coherence, and overall quality of the generated content. Previous works mostly focus on utilizing a fixed prompt to boost the pre-trained models’ performance through fine-tuning (Brown et al., 2020). In pursuit of leveraging the rich semantic information encapsulated within various time series components, our research introduces a semi-soft prompting strategy. This approach involves the generation of distinct prompts corresponding to each primary time series component: trend, seasonality, and residuals. ‘Predict the future time step given the [trend, season, residual]’ serves as the template from which we derive our component-specific prompts. These are subsequently concatenated with the relevant component data, thereby enabling a more refined modeling approach that acknowledges the multifaceted nature of time series data. Specifically, commence by translating the trend-specific prompts into the word embedding space, followed by a linear transformation to derive the learnable trend prompt vector Vt. This so-called ‘semi-soft’ prompt design thus strikes a balance between the interpretability and initial guidance of a ‘hard’ prompt and the adaptability of a ‘soft’ prompt. The combined embedding of this prompt with the time series representation is encapsulated by:

Here, xT denotes the aggregation of embeddings along the temporal axis. This concatenation procedure is mirrored for the seasonality and residual components, yielding xS and xR, respectively. This framework allows for an instance to be associated with specific prompts as the inductive bias, jointly encoding critical information relevant to the forecasting task, such as recurring patterns, overarching trends, and inherent seasonality effects. It is of note that our prompt design maintains a high degree of adaptability, ensuring compatibility with a broad spectrum of time series analyses. In particular, similar with (Wang et al., 2022a), we introduce prompt pool as an extension of our design of soft prompt in Appendix D, aimed at accommodating the characteristically non-stationary nature of real-world time series data and the associated distributional shifts (Huang et al., 2020; Fan et al., 2023). This adaptability underscores the potential of our prompting strategy to evolve in congruence with the complexities presented by diverse time series datasets.

3.4. Generative Pre-trained Transformer Architecture

We use the decoder-based generative pre-trained transformer (GPT) as the backbone to build the basis for the time-series representations. To utilize the decomposed semantic information in a data-efficient way, we choose to concatenate the prompt and different components together and put them into the GPT block. Specifically, the input of our time series embedding can be formulated as: x = xT ⊕ xS ⊕ xR, where ⊕ corresponds to concatenate operation and x∗ can be treated as different sentences. Note that, another alternative way is to build separate GPT blocks to handle different types of time series components. Inside the GPT block, we adopt the strategy used in (Zhou et al., 2023) and opt to update the gradients of the position embedding layer and layer normalization layers. In addition, we employ LORA (Low-Rank Adaptation) (Hu et al., 2021) to adapt to varying time series distributions efficiently as it performs adaptation with significantly fewer parameters.

The overall forecasting result should be an additive combination of the individual component predictions. Finally, the outputs Z of n features from the GPT block can be split into ZT , ZS, ZR ∈ R n×P ×L_E (output corresponding to trend, seasonality, and residual) based on their positions in the input order. Each Z component is then fed into fully connected layers to generate predictions Y∗ ∈ R n×L_H , where L_H is the prediction length. The forecast results can be formulated as follows: Yˆ = Yˆ T + Yˆ S + YˆR. After that, we de-normalize Y according to the corresponding statistics used in

4. Experiments

Our experiments are conducted using widely-recognized time series benchmark datasets, such as those detailed in (Zhou et al., 2021), alongside the GDELT dataset (Jia et al., 2024) and our proposed TETS dataset. These comprehensive datasets encompass a diverse array of domains, including, but not limited to, electricity (ETTh1, ETTh2, ETTm1, ETTm2, Electricity), traffic (Traffic), climate (Weather), news (GDELT), and finance (TETS), with data sampling frequencies ranging from minutes, hours to days and quarters. The inclusion of such varied datasets ensures a thorough evaluation of our experimental setups across multiple dimensions of time series data. Due to the absence of a standard test split for zero-shot comparison, we adopt a uniform training methodology to ensure fair performance assessment across datasets unseen during model training. Specifically, to advance the paradigm of foundation models within the domain of transfer learning, we investigate a zero-shot setting for our experiments, which is the ‘many-to-one’ scenario: training on multiple source datasets followed by zero-shot forecasting on a distinct, unseen target dataset. For instance, when evaluating performance on a ‘weather’ dataset, our model is pre-trained on diverse datasets including ‘ETTm1, ETTm2, ETTh1, ETTh2, Electricity, and Traffic’ without exposure to the target weather data. This ’many-to-one’ approach differs fundamentally from ‘one-to-one’ or ‘one-to-many’ configurations (Zhang et al., 2022c) by using diverse pre-training datasets from varied domains, like traffic and weather data. This diversity, while rich, introduces complexity, as the model must identify patterns across potentially misaligned samples, complicating learning compared to models trained and tested on in distribution datasets.

We use GPT-2 (Radford et al., 2019) as our backbone to build TEMPO1 as shown in Figure 1. To comprehensively demonstrate the performance of our model, we compare TEMPO with the following baselines over long-term forecasting and short-term forecasting: (1) The pre-trained LLM-based models, including Bert (Devlin et al., 2019), GPT2 (Radford et al., 2019; Zhou et al., 2023), T5 (Raffel et al., 2020), and LLaMA (Touvron et al., 2023). (2) The Transformer-based models, including the PatchTST (Nie et al., 2023), FEDformer (Zhou et al., 2022), ETSformer (Woo et al., 2022) and Informer (Zhou et al., 2021). (3) The variant of Linear-based models, DLinear (Zeng et al., 2023) model. (4) General 2D-variation model, TimesNet (Wu et al., 2023). Following traditional forecasting works, we report the Mean Squared Error(MSE) and Mean Absolute Error (MAE) results in this section. Please refer to the Appendix B and F for the detailed experiment setting and baselines.

4.1. Zero Shot Long-Term Forecasting Results

Table 1 presents the performance of multiple time series forecasting models on MSE and MAE metrics across different prediction lengths under the ‘many-to-one’ setting, with lower scores indicating more accurate forecasts. Our proposed model, TEMPO, surpassed existing baselines on average over all prediction horizons across all datasets, highlighting the broad applicability of TEMPO. Our model achieves the highest average performance scores. Specifically, it improves the weather and ETTm1 datasets by around 6.5% and 19.1%, respectively in MAE compared to the previous state-of-the-art model, PatchTST. It also secures the lowest error rates across numerous individual dataset-prediction length configurations. Compared to other pre-trained models for forecasting, TEMPO consistently delivers the best results across different time series datasets. These results suggest that incorporating LLM with the well-designed prompt and implementing time series decomposition can contribute significantly to enhancing the accuracy and stability of zero-shot time series forecasting.

4.2. Short-Term Forecasting with Contextual Information

Dataset and metrics.

In this section, we introduce TETS, a new benchmark dataset built upon S&P 500 dataset combining contextual information and time series, to the community. Following (Cao et al., 2023), we choose the symmetric mean absolute percentage error (SMAPE) as our metric in this section. Moreover, the GDELT is also used to verify the effectiveness the our proposed method. Please refer to Appendix B.2 and Appendix B.3 for the detailed dataset setting of TETS and GDELT; Appendix H for the proposed pipeline of collecting TETS dataset with both time series and textual information.

Contextual Information.

In order to incorporate the contextual information into our proposed TEMPO, we leverage the built-in tokenization capabilities of the generative pre-trained transformer to derive embeddings of input text. Then, we utilize these text embeddings corresponding to each time series instance, T_ext, to construct soft prompts with learnable parameters and concatenate them at the beginning of the input embedding, that is, x = T ext ⊕ xT ⊕ xS ⊕ xR. Where the x∗ for EBITDA is conducted with semi-soft prompt. This method is not strictly confined to our proposed model but can be feasibly applied in similar works to enhance their capability of handling and benefiting from contextual information. Comparisons with other design strategies of contextual information are provided in the Appendix D.4 for further reference.

Results.

From the transfer learning perspective, we choose to report the setting of ‘many-to-many’, which means we train a model using in-domain sectors data and directly do the zero-shot test on all cross-domain sectors. The SMAPE results of using different baseline models and our model on the TETS dataset and GDELT dataset are listed in Table 2 which is also zero-shot setting as data samples from those sectors are not seen during the training stage. Examining the results across all sectors, our proposed model, which combines time series data with supplementary summary (contextual) data, outperforms all the baseline methods in cross-domain sectors. Besides, we observe that transformer-based architectures training from scratch, specifically tailored for time series analysis—such as PatchTST, Informer, and Reformer (Kitaev et al., 2020)—tend to underperform in comparison to transformers pre-trained on linguistic datasets. This performance discrepancy indicates that the parameter initialization derived from pre-trained language models confers a superior starting point for model optimization. Consequently, these pre-trained models exhibit enhanced capabilities and adaptability within zero-shot learning contexts. Furthermore, in instances where the time series data exhibits a strong correlation to other modalities, such as textual information, devising an effective strategy to amalgamate these distinct modalities could lead to enhanced performance gains.

5. Analysis

5.1. Ablation Study

The provided ablation study, Table 3, offers critical insights into the impact of the prompt and decomposition components on the performance of our model. In this table, the MSE and MAE on various datasets are reported for four scenarios: the original model configuration (‘TEMPO’); the model without the prompt design and without decomposition, which is the setting of ‘w/o Dec’; the model without prompt design (‘w/o Pro’) and the model without the decomposition loss alignment (’w/o Dec Loss’). Averagely, the exclusion of the prompt component leads to a deterioration in the model’s predictive accuracy, indicating the prompt can be an important factor in enhancing the model’s overall performance. The omission of decomposition loss typically results in a decline in model performance. Decomposition loss facilitates the use of a richer historical dataset, which enhances the quality of individual decomposition components. This improvement in component quality is important for the model’s forecasting accuracy. Note that employing the prompt design in isolation, without the support of decomposition, can detrimentally impact the backbone model’s performance in most cases. This can be due to the difficulties in effectively prompting time series data from its raw form with limited semantic information. These findings underscore the essential nature of both prompt and decomposition elements in achieving robust forecasting capabilities under the zero-shot setting.

5.2. Interpreting Model Predictions

SHAP (SHapley Additive exPlanations) values serve as a comprehensive measure of feature importance, quantifying the average contribution of each feature to the prediction output across all possible feature combinations. As shown in Figure 2, when applied to our seasonal and trend decomposition, the SHAP values from the generalized additive model (GAM) suggest a dominant influence of the seasonal component on the model’s predictions, implying a significant dependency of the model on the overall recurring patterns within the data. While the directional shifts of ETTm1 dataset’s contribution is relatively stable. The escalating values in the ’Error’ column, which denote the discrepancy between the model’s predictions and the ground truth, indicate a potential decline in the model’s accuracy as the prediction length increases which is indeed observed in most experiments run. In this context, the STL decomposition proves invaluable as it enables us to identify and quantify the individual contributions of each component to the overall predictions, as demonstrated by the SHAP values. This detailed understanding can yield critical insights in how the pre-trained transformer is interpreting and leveraging the decomposing pre-processing step, thereby providing a robust foundation for model optimization and enhancement. SHAP values for weather dataset can be found at Figure 14.

6. Conclusion

This paper proposes a soft prompt based generative transformer, TEMPO, which achieves state-of-the-art performance in zero-shot time series forecasting. We introduce the novel integration of prompts and seasonal trend decomposition together within a pre-trained Transformer-based backbone to allow the model to focus on appropriately utilizing knowledge from different temporal semantics components. Moreover, we demonstrate the effectiveness of TEMPO with multimodel input, effectively leveraging contextual information in time series forecasting. Lastly, with extensive experiments, we highlight the superiority of TEMPO in accuracy, and generalizability. One potential limitation worth further investigation is that superior LLMs with better numerical reasoning capabilities might yield better results. In addition, the encouraging results of TEMPO on the zero-shot experiments shed light into effective foundational models for time series.

A. Showcases

A.1. Compare with GPT4TS

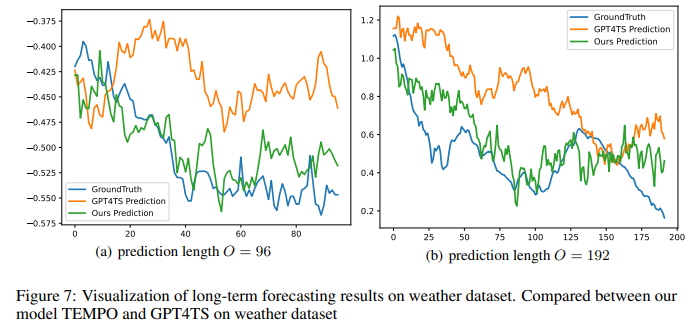

In Figure 3, 4, 5, 6, 7, we plot the comparison of the predicted value from our model and GPT4TS model given a look-back window. As shown in the datasets, we are able to predict close to the ground truth, which is also shown through our superior performance over other models in table 1. We select time series with different characteristics under different prediction lengths O ∈ {96, 192}: time series with high variability (Figure 5 a), periodic (Figure 3 a, Figure 3 b, 4 a, 4 b), non-periodic with a change in trend (Figure 6 a, Figure 6 b)

A.2. Compare with TimeGPT

We also compare our results with TimeGPT (Garza & Mergenthaler-Canseco, 2023), which is capable of generating accurate predictions for a diverse range of datasets not seen during training, demonstrating superior performance in zero-shot inference compared to traditional statistical, machine learning, and deep learning methods. Access to TimeGPT-1 (Beta) is provided through a Python SDK and a REST API. This accessibility allows us to explore TimeGPT’s forecasting capabilities on our datasets. As shown in Figure 8 and Figure 9, despite its design for various downstream tasks, it is important to note that TimeGPT may not perform as well in long-term forecasting scenarios. In contrast, our proposed model excels in zero-shot settings, including long-term forecasting, illustrating the need for foundation models that can adapt to both the breadth of time series applications and the depth of forecasting horizons.

B. Experiment Setting

B.1. Towards Foundation Model Experiments Details

It has been well-established that channel-independence works well for time series datasets, so we treat each multivariate time series as multiple independent univariate time series. We use popular time series benchmark datasets (Zhou et al., 2021): ETTm1, ETTm2, ETTh1, ETTh2, Weather, Electricity, Traffic, ILI and exchnge. 1) ETTm1, ETTm2, ETTh1, ETTh2 contain electricity load from two electricity stations at 15 minutes level and hourly level. 2) Weather dataset contains 21 meteorological indicators of Germany within 1 year; 3) Electricity dataset contains electricity consumption; 4) Traffic dataset contains the occupation rate of the freeway system across the State of California. The lookback window L is following (Zhou et al., 2023), and the prediction length O is set to {96, 192, 336, 720}. In this experiment part, our experiments were conducted using single NVIDIA A100 GPU, with a batch size set to 256, and focused on long-term forecasting by employing a Mean Squared Error (MSE) loss function. To ensure the reliability of our results, we performed three iterative loops and calculated the average of the outcomes. Our exploration covered [3, 6] GPT layers and tested various weights, [0.001, 0.01, and 1], for the MSE loss function applied to the reconstructed components of the time series. We have documented the optimal results obtained from this search. A comprehensive analysis of the impact that the number of GPT layers has on the performance will be addressed in future research.

Towards Foundation Model’s Zero Shot Setting

For each prediction length, we train a model on a mixture of training data from different domains and test the model on the target unseen domain’s data. We construct the combined training dataset by pooling the training data and fully shuffling them. To prevent undue bias and ensure fair representation of data from each domain in the combined training data, we select an equal number of training examples from each domain’s training data. We noted that the number of training samples that ETTh1 and ETTh2 has is on a much smaller magnitude compared to the other three training datasets (ETTm1, Weather, Electricity), so selecting the minimum number of training samples among all other training datasets would result in too much data loss from ETTm1, Weather, and Electricity, etc. Therefore, we included all training examples from ETTh1 and ETTh2 in the combined training dataset. Similar to traditional experimental settings, each time series (ETTh1, ETTh2, ETTm1, Weather, Electricity, ETTm2, Traffic) is split into three parts: training data, validation data, and test data following in 7:1:2 ratio in (Zhou et al., 2022), and we only merge the training and validation data. For ETTm1, ETTm2, Weather and Electricity data, the number of examples sampled to be pooled into the combined training dataset is chosen to be the minimum number of training examples among these training datasets.

B.2. Proposed TETS Dataset Setting

Data Collection

Our time series data for financial analysis and forecasting are derived primarily from the financial statements of companies including balance sheets, income statements, and cash flow statements. Specifically, we utilize data from the 500 largest U.S. companies across 11 sectors as listed in the Standard & Poor’s 500 Index (S&P 500), which we divide into two parts: the first seven sectors for training and evaluation, and the remaining four for zero-shot forecasting tasks to test the model’s ability to predict in unseen domains. While collecting corresponding contextual information from the abundance of digital news sources is challenging, OpenAI’s ChatGPT API offers a solution to gather and condense relevant news efficiently. By inputting key details into the API and limiting the response to 110 tokens, as shown in Figure 10, we can swiftly extract pertinent contextual data to improve our analysis. Please refer to Section H for further details of creating TETS dataset.

Prediction objective

The primary objective of our experiment is to forecast the Earnings Before Interest, Taxes, Depreciation and Amortization(EBITDA) for companies listed in S&P500, and our data range from 2000 to 2022. Following the multivariate time series framework presented in (Papadimitriou et al., 2020), we select foundational financial metrics from the income statements as input features: cost of goods sold (COGS), selling, general and administrative expenses (SG&A), RD expenses (RD EXP), EBITDA, and Revenue. Comparing with other metrics, the selected metrics contain information more relevant to our prediction objective. For Large Language based models, including our model TEMPO, GPT4TS, and T5, we apply channel-independence strategy to perform univariate time series forecasting tasks. All five features are used for training (predicting its future value based on its past value), while only EBITDA is accessible during the testing stage. Other models follow the multivariate time series forecasting setting, treating the five features as multivariate input and predicting the target, EBITDA, both in the training and testing stages.

We predict quarterly EBITDA based on the past 20 quarters’ data. This predicted value is then used to forecast the next quarter’s EBITDA, iteratively four times, leading to a yearly prediction. In order to measure the accuracy of these predictions based on the cumulative yearly value (sum of 4 quarters), we employ the symmetric mean absolute percentage error (SMAPE) as the evaluation metric as well as the forecasting loss function in this experimental part.

Data Split

For companies under each sector, we employ the windowing method to generate cohesive training and testing instances. Under the channel-independence setting where we separate each feature to obtain univariate time series, we get 80,600 samples from the seven in-domain sectors, and 9,199 samples from the four zero-shot sectors(also known as cross-domain sectors), five as much as we get in the channel dependent setting. The sectors splitting is elaborated in H. In our experiments shown in table 2, We use 70% of in-domain data for training, 10% of in-domain data for evaluation, and all zero-shot data for unseen testing.

Symmetric Mean Absolute Percentage Error

In reality, the magnitude of financial metrics can vary significantly among different companies. So, we choose the symmetric mean absolute percentage error (SMAPE), a percentage-based accuracy measure, as our evaluation metric. For EBITDA, there are many negative results that may influence the final SMAPE. We use the form of SMAPE-Abs SMAPE:

Here, Ft represents the true value, At represents the predicted value in our system, and n represents the total time steps we need to forecast.

SMAPE can be particularly sensitive to outliers. Specifically, when the true data and prediction have opposite signs, the resulting error may be up to 200%, seriously distorting the final results. Following the approach in (Papadimitriou et al., 2020), we filter out data points at the 80% and 90% thresholds and find most of the outliers are related to significant financial shifts due to mergers & acquisitions (M&A).

B.3. GDELT Dataset Setting

We utilized the GDELT dataset (Jia et al., 2024), which focuses on predicting the respective mentions and mentions in the news media. We utilized the data collected from the 55 regions under the US and the national data for the US and divided the 10 event root types in the dataset into unseen and seen sets, as demonstrated in Table 5. We focused on predicting the three key variables NumMentions, NumArticles, NumSources related to the particular event type within a given timeframe and geographical region. We apply channel-independence strategy to perform univariate time series forecasting tsks for all baseline models and our model. All three features are used for training and evaluation (predicting its future value based on its past value).

We predict the future 7 days based on the past 15 days’ data directly. In order to measure the accuracy of the predicitions, we use mean square error (MSE) and mean absolute error (MAE). For each region, we employ the windowing method to generate cohesive training and testing instances for each event root type. Under our channel-independence setting, we get 122,008 samples from the seven in-domain sectors (seen sectors) for training, and 76,048 samples for evaluating under the three zero-shot sectors (unseen sectors). In our experiments, we use 70% of in-domain data for training, 10% for evaluation and all zero-shot data for unseen testing.

C. Further Results

C.1. Self-Supervised Representation Learning

Our proposed model architecture can be designed to support self-supervised learning and thus further embrace foundation models for time series. Following (Nie et al., 2023), we mask a random subset of patches by replacing them with zeros, where the patches are divided into non-overlapping patches for simplicity and to avoid masked patches influencing predictions. The prediction head is removed and replaced with a linear layer to reconstruct the masked patches. The model is trained to minimize the MSE between the predicted and true masked patches. To handle multivariate time series with varying numbers of features, we apply channel independence (Zeng et al., 2023) to model each time series independently.

With the strong performance TEMPO showed under the experiment ’many-to-one’ zero-shot setting, from the perspective of a self-supervised cross-domain foundational model, we further investigate if using a TEMPO model trained on datasets across domains can still achieve comparable performance on unseen domains. Here, we still use the ’many-to-one’ setting but the model is trained in a self-supervised manner. Specifically, we first use all other domain’s data to train a representation model then only use 5% data of the training data to fine tune the total model with the prediction layer as a forecasting downstream task. Table 6 provides a comprehensive comparison of our model against other baseline models on three multivariate time series datasets that are unseen by the models during training, namely electricity and traffic and weather. All these selected 3 datasets are entirely dissimilar to any data the model has encountered before. TEMPO outperforms baseline models, achieving the lowest MSE and MAE in most cases. Note that TEMPO’s average MSE and MAE is 7.3% and 4.6% less than the best-performing baseline model (GPT2) for the weather dataset, respectively. This finding shed light on the strong generalizability of TEMPO and indicated its potential of serving as a foundational time series forecasting model, maintaining robust performance for unseen domains.

C.2. Comparing with Full-shot State-of-the-arts Baselines

Towards foundation model training differs significantly from the one-to-one/many scenarios, where pre-training involves a homogenous dataset, often with consistent season patterns, sampling rates, and temporal scales. This homogeneity facilitates pattern learning transferable to fine-tuned datasets. In contrast, towards foundation model training involves pre-training on highly diverse datasets, such as merging traffic and weather data, which may hinder the model’s ability to discern underlying patterns. In Table 7, we provide further results on ETTh1 and ETTh2 datasets, demonstrating that the performance of TEMPO (zero-shot setting) surpasses that of state-of-the-art models specifically designed for these target datasets with full-shot settings. The results in Table 7 are obtained from (Liu et al., 2023), including but not limited to iTransformer(Liu et al., 2023), Crossformer (Zhang & Yan, 2022), TiDE (Das et al., 2023a) and SCINet (Liu et al., 2022), which are also reported in our contemporaneous work, MOIRAI (Woo et al., 2024).

C.3. Comparing with ARIMA

As a pioneering foundation model, TEMPO is engineered to forecast future values directly, eliminating the necessity for retraining with each new data instance. Its underlying framework captures intricate temporal patterns, granting it the versatility to generalize across various time series. In this study, we compare TEMPO’s forecasting prowess with that of the ARIMA model (Hyndman & Khandakar, 2008), which is renowned for its capacity to make accurate predictions within a specific time series once the initial model parameters have been set. While ARIMA models excel in continuing predictions within the series they are configured for, they do not inherently possess the faculty to forecast across disparate time series without recalibration. We obtain the ARIMA’s forecasting results from (Challu et al., 2023). As shown in Table 8, the results highlight the superior adaptability of our ‘towards foundation model’ – TEMPO – which retains its predictive accuracy even when applied to time series beyond its training scope, thereby illustrating the feasibility of more universal and resilient forecasting methodologies.

D. Further Analysis

D.1. Design of Prompt Pool

In this section, we propose another potential prompt design for addressing non-stationary nature of real-world time series data with distributional shifts (Huang et al., 2020). Specifically, we introduce a shared pool of prompts stored as distinct key-value pairs. Ideally, we want the model to leverage related past experiences, where similar input time series tend to retrieve the same group of prompts from the pool (Wang et al., 2022b). This would allow the model to selectively recall the most representative prompts at the level of individual time series instance input. In addition, this approach can enhance the modeling efficiency and predictive performance, as the model would be better equipped to recognize and apply learned patterns across diverse datasets via a shared representation pool. Prompts in the pool could encode temporal dependencies, trends, or seasonality effects relevant to different time periods. Specifically, the pool of prompt key-value pairs is defined as:

D.2. Results on Different Prompt Design

In this section, we examine the impact of various prompt designs on model performance. We utilize the ‘semi-soft’ prompt as outlined in Section 3.3, where the prompt vectors are initialized semi-softly; the soft prompt, which entails the random initialization of vectors of identical dimensions to the ‘semi-soft’ prompt; and the hard prompt, which is semantically meaningful and remains fixed post-tokenization. Additionally, we explore the prompt pool, as described in Section D.1, and employ a similar leave-one-out approach to mask all prompts within the pool to investigate its effectiveness.

The findings, presented in Table 9, reveal that, in the ETTm2 dataset, the prompt pool outperforms the ‘semi-soft’ prompt in three out of four scenarios, underscoring the potential of prompts to enhance model capacity and adaptability to shifts in data distribution. Furthermore, we observe that prompts with explicit semantic content (Semi-soft and Hard) surpass the performance of simple soft prompts. This suggests that incorporating semantic information as discrete indicators within a pre-trained model can more effectively orchestrate domain knowledge. This understanding informs the design of prompts for efficient interaction with language models, especially in applications where precision and relevance of the output are crucial.

D.3. Analysis of Prompt Pool

Here is a summary of how the prompts are initialized and trained in our work:

• Initialization: The prompt embeddings in the pool are randomly initialized from a normal distribution, as is standard practice for trainable parameters in neural networks.

• Training: The prompts’ value and all other model parameters are trained in an end-to-end manner to optimize the forecasting objective. This allows the prompts to be continuously updated to encode relevant temporal knowledge.

The number of prompts and embedding dimensions are treated as hyperparameters and tuned for good performance. Different pool settings, including pool size, top k number, and prompt length, will lead to different results. To explore this, we conduct a total of 27 experiments, setting 3 distinct values for each of the 3 settings: (1) pool size of 10, 20, and 30. (2) top k numbers of 1, 2, and 3. (3) prompt lengths of 1, 2, and 3. We choose the combination with the best results for TEMPO settings. For the long-term and short-term forecasting experiments, we choose a pool size with M = 30 and K=3 and prompt length is 3. Detailed design analysis provides insights into prompt similarity and selection. Note that, the prompt pool’s key in (Wang et al., 2022b) is trainable which allows us to maintain consistent and distinct characteristics of time series data for analysis. Our work offers an initial exploration into prompt-based tuning for time series forecasting, but substantial room remains for advancing prompt pool design.

D.3.1. Prompt Selection Distribution

To elucidate the mechanics behind prompt selection, we have visualized the distribution histograms for chosen prompts corresponding to the trend, seasonal, and residual elements of the ETTm2 dataset in Figure 11. In our experimental framework, each data point is permitted to select multiple prompts—with three prompts being chosen per component. Consequently, the frequency is determined by the number of times a particular prompt is selected across the dataset. The histograms reveal pronounced discrepancies in prompt preferences between periodic and seasonal components.

For instance, within the ETTm2 dataset, prompts 11, 20, and 24 are predominantly selected for capturing trends, whereas prompts 8, 10, and 29 are primarily chosen for seasonal fluctuations. This observation substantiates the premise that the prompt pool is adept at furnishing specialized prompts tailored to discrete characteristics of time series data.

D.4. Analysis on Designs of Injecting Contextual Information

In this section, we investigate the influence of various configurations of textual injection and original prompt design from multi-modality perspective. As depicted in Table 10, eight distinct prompt designs were formulated. ’Sum’ denotes the utilization of a direct summary of textual data as a prompt, while ’SumP’ signifies the use of textual information as a query within the prompt pool. The symbols ’+’ and ’⊕’ represent summation and concatenation operations, respectively. ’TP’ stands for ’time series prompt pool,’ and ’Semi’ indicates a ’semi-soft prompt’ where we manually design the prompt, with trainable parameters, referred to as ”Predict the future time step given the {time series data type}” for 3 different time series (Trend, Season, Residual) after decomposition. Each design choice exerts a distinct impact on the performance metrics. The direct incorporation of textual information along with the prompt pool yields the most optimal and near-optimal outcomes. In future work, we aim to delve deeper into the analysis of multimodal solution design strategies for time series forecasting.

D.5. Hidden Representation

Figure 12 demonstrates the difference between the representation of the output hidden space from the pre-trained langauge model. While the representation of time series learned from GPT4TS is centered as a whole, the representation of the decomposed component from TEMPO implies a certain soft boundary between the three components. This is a demonstration of how TEMPO is able to learn the representation of trend, seasonality, residual parts respectively, which contributes to the superior performance of our model TEMPO.

F. Baseline Model Explanations

We demonstrate the baseline models we compared with in our experiments in the following:

• DLinear (Zeng et al., 2023): DLinear combines a decomposition scheme from Autoformer and FEDformer with linear layers to predict time series data by modeling trend and seasonal components separately and summing their features for enhanced performance in trend-rich datasets.

• PatchTST (Nie et al., 2023): PatchTST is a Transformer-based model for multivariate time series forecasting that segments data into subseries patches and uses a channel-independent design to efficiently reduce computational costs while enhancing long-term prediction accuracy.

• FEDformer (Zhou et al., 2022): FEDformer combines seasonal-trend decomposition with Transformers for time series forecasting, leveraging frequency insights for efficiency and accuracy, outperforming state-of-the-art methods.

• Informer (Zhou et al., 2021): Informer is a transformer-based model optimized for long sequence time-series forecasting, leveraging ProbSparse self-attention for efficiency, selfattention distilling for handling long inputs, and a generative decoder for rapid predictions.

• ETSformer (Woo et al., 2022): ETSformer is a novel Transformer architecture for timeseries forecasting that integrates exponential smoothing principles, replacing traditional self-attention with exponential smoothing attention and frequency attention, to enhance accuracy, efficiency, and interpretability.

• TimesNet (Wu et al., 2023): TimesNet transforms 1D time series into 2D tensors capturing intra- and inter-period variations and uses TimesBlock with an inception block to extract complex temporal patterns, excelling in multiple time series tasks.

• GPT-2 (Radford et al., 2019): GPT-2 is a decoder-based language model developed by OpenAI, designed to generate coherent and diverse textual content from a given prompt. In our work, we use the GPT-2 with 6 layers as the backbone, which is adapted from GPT4TS (Zhou et al., 2023).

• BERT (Devlin et al., 2019): BERT (Bidirectional Encoder Representations from Transformers) is an encoder-based deep learning model utilizing the Transformer architecture designed by Google to understand the context of words in a sentence by analyzing text bi-directionally.

• T5 (Raffel et al., 2020): T5 (Text-to-Text Transfer Transformer) is a state-of-the-art neural network model with encoder-decoder based architecture designed by Google that converts every language problem into a text-to-text format.

• LLaMA (Touvron et al., 2023): LLaMA (Large Langauge Model Meta AI) is a collection of state-of-the-art foundation language models ranging from 7B to 65B parameters delivering exceptional performance, while significantly reducing the needed computational power and resources. In our work, we use the first 6 layers of 7B LLaMA.

H. Details of the TETS Dataset

Time series data

Analyzing and forecasting a company’s future profitability and viability are essential for its development and investment strategies. Financial assessment and prediction are data-driven, mostly relying on the combination of diverse data types including company reports, etc. In this project, our primary sources are the company’s financial statements: balanced sheet, income statements, and cash flow statements.

The Standard & Poor’s 500 Index (S&P 500) represents a stock market index that measures the stock performance of the 500 largest companies in the U.S.11 sectors in the S&P500 are included in our dataset: Basic Materials (21 companies), Communication Services (26 companies), Energy (22 companies), Financial Services (69 companies), Healthcare (65 companies), Technology (71 companies), Utilities (30 companies), Consumer Cyclical (58 companies), Consumer Defensive (36 companies), Industrials (73 companies), Real Estate (32 companies). In terms of dataset division, we separate the sectors in our dataset to achieve both in-domain task setting and zero-shot task setting. The first seven sectors are treated as training and evaluation sectors, while the last four sectors are reserved as unseen sectors for zero-shot forecasting task.

To address missing numerical information for companies in the S&P 500 that lack data prior to 2010, we apply linear interpolation after experimenting with various methods. Linear interpolation is a technique that estimates a value within a range using two known end-point values. For missing values in research and development expenses, we adopted a zero-filling strategy. This is because null entries in these statements typically indicate that the company did not make any investment in that area.

Contextual data collection

The rise of Large-scale pre-trained models (LLMs) in the field of Natural Langauge Processing has provided new possibilities for their application in time seris analysis. LLMs have proven useful for analyzing and learning complicated relationships and making inferences across different time series sequences. However, most existing approaches primarily convert time series data to direct input into LLMs, overlooking the fact that the LLMs are pre-trained specifically for natural language and thus neglecting the incorporation of contextual data.

Further, the information contained in time series data is limited, especially in the financial field. Time series data in the financial field, such as company statements, primarily reflect the financial numeric changes based on the company’s historical strategy and broader macroeconomic shifts. These data contain the company’s internal historical information. However, the broader market environment, referred to as external information, also plays an important role in the company’s future development. For example, medicine and healthcare companies experienced steady growth before the outbreak of COVID-19. But between 2019 and 2020, after the outbreak of the pandemic, the financial statements of such companies were impacted significantly. As a result, we recognize the value of integrating news and reports as external data sources to complement internal information contained in time series data. The information contained in the external data mainly includes 3 parts: (i). Policy shifts across regions (ii). Significant events occurring globally (iii). Public reaction to companies’ products. Together, these elements provide supplementary information missing in time series data (internal data), therefore enhancing our forecasting capabilities.

Extracting contextual data, such as news and reports, from varied sources presents a significant challenge. In today’s digital age, numerous news websites and apps deliver a wide range of world news, spanning from influential news affecting entire industries to trivial, minor reports. Thus, it is crucial to filter and summarize the information, distinguishing between pivotal and less significant news. Fortunately, the recently released ChatGPT API2 by Open AI offers the capability of collecting and summarizing news and reports for a specified duration.

Through consolidating all relevant details – query, quarter, yearly context, company information, and specific requirements – into user message and setting a cap at 110 tokens for response, we can efficiently obtain the desired contextual information from ChatGPT API. For illustration, Figure 10 displays an example from company A, showcasing designed prompts and corresponding responses from ChatGPT 3.5. If the contextual information can not be generated, the API often returns messages with keywords such as ’unfortunately’ and ’sorry’. We detect and replace them with the term ’None’, representing neutral contextual information. Additionally, Figure 15 and 17 provide a illustration of our dataset, encompassing both time series data and the corresponding contextual texts. A detailed view of the contextual texts can be seen in Figure 16 and 18.

'Paper Writing 1 > Related_Work' 카테고리의 다른 글