-

Are Language Models Actually Useful for Time Series Forecasting?*Paper Writing 1/Related_Work 2024. 10. 10. 23:56

https://arxiv.org/pdf/2406.16964

https://github.com/BennyTMT/TS_models

(Jun 2024)

Abstract

Large language models (LLMs) are being applied to time series tasks, particularly time series forecasting. However, are language models actually useful for time series? After a series of ablation studies on three recent and popular LLM-based time series forecasting methods, we find that removing the LLM component or replacing it with a basic attention layer does not degrade the forecasting results—in most cases the results even improved. We also find that despite their significant computational cost, pretrained LLMs do no better than models trained from scratch, do not represent the sequential dependencies in time series, and do not assist in few-shot settings. Additionally, we explore time series encoders and reveal that patching and attention structures perform similarly to state-of-the-art LLM-based forecasters.1

1. Introduction

Time series analysis is a critical problem across many domains, including disease propagation forecasting [7], retail sales analysis [3], healthcare [23, 15] and finance [28]. A great deal of recent work in time series analysis (constituting repositories with more than 1200 total stars on GitHub) has focused on adapting pretrained large language models (LLMs) to classify, forecast, and detect anomalies in time series [13, 42, 19, 4, 5, 29, 12, 37, 14]. These papers posit that language models, being advanced models for sequential dependencies in text, may generalize to the sequential dependencies in time series data. This hypothesis is unsurprising given the popularity of language models in machine learning research writ large. So to what extent are language models really beneficial for traditional time series tasks?

Our main claim is simple but profound: popular methods for adapting language models for time series forecasting perform the same or worse than basic ablations, yet require orders of magnitude more compute. Derived from extensive ablations, these findings reveal a worrying trend in contemporary time series forecasting literature. Our goal is not to imply that language models will never be useful for time series. In fact, recent works point to many exciting and promising ways that language and time series interact, like time series reasoning [22] and social understanding [6]. Rather, we aim to highlight surprising findings that existing methods do very little to use the innate reasoning power of pretrained language models on established time series tasks.

We substantiate our claim by performing three ablations of three popular and recent LLM-based forecasting methods [42, 13, 19] using eight standard benchmark datasets from reference methods and another five datasets from MONASH [11]. First, we successfully reproduce results from the original publications. Then, we show that replacing language models with simple attention layers, basic transformer blocks, randomly-initialized language models, and even removing the language model entirely, yields comparable or better performance. The same performance was observed on another five datasets that were not studied by the reference methods.

Next, we compare the training and inference speed of these methods against their ablations, showing that these simpler methods reduce training and inference time by up to three orders of magnitude while maintaining comparable performance. Then, to investigate the source of LLM forecaster‘s performance, we further explore time series encoders. We find that a simple linear model with an encoder composed of patching and attention can achieve forecasting performance similar to that of LLMs. Next, we test whether the sequence modeling capabilities of LLMs transfer to time series by shuffling input time series and find no appreciable change in performance. Finally, we show that LLMs do not even help forecasting in few-shot settings with 10% of the training data. We discuss the implications of our findings and suggest that time series methods that use large language models are better left to multimodal applications [4, 10, 33] that require textual reasoning.

The key contributions we make in this paper are as follows:

• We propose three straightforward ablation methods for methods that pass time series into LLMs for forecasting. We then ablate three top-tier methods on thirteen standard datasets and find that LLMs fail to convincingly improve time series forecasting. However, they significantly increase computational costs in both training and inference.

• We study the impact of an LLM’s pretraining by re-initializing their weights prior to forecasting. We find that this has no impact on forecasting performance. Additionally, in shuffling input time series, we find no evidence the LLMs successfully transfer sequence modeling abilities from text to time series and no indication that they help in few-shot settings.

• We find a very simple model, with patching and attention as encoder, can achieve performance similar to LLMs. This suggests a massive gap between the benefits LLMs pose and the time series forecasting problem, despite a rapid rush to adopt LLMs.

2. Related Work

Here, we summarize the key related works for our paper. They can be broadly classified into three sections: (i) time series forecasting using LLMs; (ii) encoders in LLM time series models; and (iii) smaller and efficient neural models for time-series.

Time Series Forecasting Using LLMs.

Recently, with the development of Large Language Models (LLMs) [9, 26, 31] and their demonstrated multi-modal capabilities, more researchers have successfully applied LLMs to time series forecasting tasks [12, 14, 5, 4]. Chang et al., [5] used finetuning the transformer module and positional encoding in GPT-2 to align pre-trained LLMs with time series data for forecasting tasks. Zhou et al. [42] proposed a similar finetuning method, named “OneFitAll”, for time series forecasting with GPT-2. Additionally, Jin et al. [13] introduced a reprogramming method to align LLM’s Word Embedding with time series embeddings, showing good representation of time series data on LLaMA [31]. Similarly, LLATA [19] and TEST [29] adapted word embeddings to enable LLMs to forecast time series data effectively. In addition to time-series forecasting models, Liu et al. [20] show that these models can be extended to classifying health-time series, such as heart-rate and daily-footsteps. These models have also been shown to outperform supervised neural models in few-shot settings.

Encoders in LLM Time Series Models.

In order for an LLM to learn from text it must first be discretized and encoded as word tokens which are 1 × d vectors [9, 26, 31]. Similarly, LLM-based methods for time series learn discrete time series tokens. One method this is to segment the time series into overlapping patches, which effectively shortens the time series while retaining its features [13, 42, 5, 4, 25, 24]. Another method involves decomposing a method based on its trend, seasonal components, and residual components [4, 25]. Lastly, Liu et al. [19] feed the multivariate time series using a Transformer to enable different channels to learn the dynamics of other channels. These embedding procedures are followed by a linear neural network layer that projects the time series encoding to the same dimensions used by the pre-trained LLM.

Smaller and Efficient Neural Models.

In addition to LLMs, there has been a large body of research on smaller yet efficient frameworks that outperform their bulky counterparts in time series forecasting [17, 39, 30, 21, 2]. For example, Zeng et al. [38] present DLinear, an incredibly simple model that combines decomposition techniques and achieves better forecasting performance than state-of-the-art transformer-based time series architectures at the time, such as Informer [40], FEDformer [41], and Autoformer [35]. Furthermore, Xu et al. [36] introduces a lightweight model with only 10k parameters, which captures both amplitude and phase information in the time-series to outperform transformer-based models.

3. Experimental Setup

We use three state-of-the-art methods for time series forecasting and propose three ablation methods for LLMs: (i) “w/o LLM”; (ii) “LLM2Attn”; (iii) and “LLM2Trsf”. To evaluate the effectiveness of LLMs in time series forecasting, we test these methods on eight standard datasets.

3.1. Reference Methods for Language Models and Time Series

We experiment with three recent methods for time series forecasting using LLMs. All models were published between December 2023 and May 2024 and are popular, with their GitHub repositories collectively amassing 1,245 stars. These methods are summarized in Table 2, and use either GPT2 [26] or LLaMA [31] as base models, with different alignment and fine-tuning strategies.

• OneFitsAll [42]: OneFitsAll, sometimes called GPT4TS, applies instance norm and patching to the input time series and then feeds it into a linear layer to obtain a input representation for the language model. The multi-head attention and feed forward layers of the language model are frozen while the positional embeddings and layer norm are optimized during training. A final linear layer is used to transform the language model’s final hidden states into a prediction.

• Time-LLM [13]: In Time-LLM the input time series is tokenized via patching and aligned with a low-dimensional representation of word embeddings using multi-head attention. The outputs of this alignment, combined with the embeddings of descriptive statistical features, are passed to a frozen pre-trained language model. The output representations of the language model are then flattened and passed through a linear layer to obtain a forecast.

• LLaTA [19]: LLaTA embeds the input time series by treating each channel as a token. One half of the architecture is a “textual branch” which uses cross attention to align the time series representation with a low dimensional representation of the language model’s word embeddings. This representation is then passed through a pretrained, frozen language model to obtain a “textual prediction”. Simultaneously, a “temporal” branch learns a low-rank adapter for a pretrained language model based on the input time series to produce a “temporal prediction” which is used for inference. The model includes additional loss terms that enforce similarity between these representations.

Reproducibility Note.

While experimenting with each model, we tried to replicate the conditions of their original papers. We used the original hyper-parameters, runtime environments, and code, including model architectures, training loops, and data-loaders. To ensure a fair comparison, we have included error metrics from the original papers alongside our results wherever possible.

3.2. Proposed Ablations

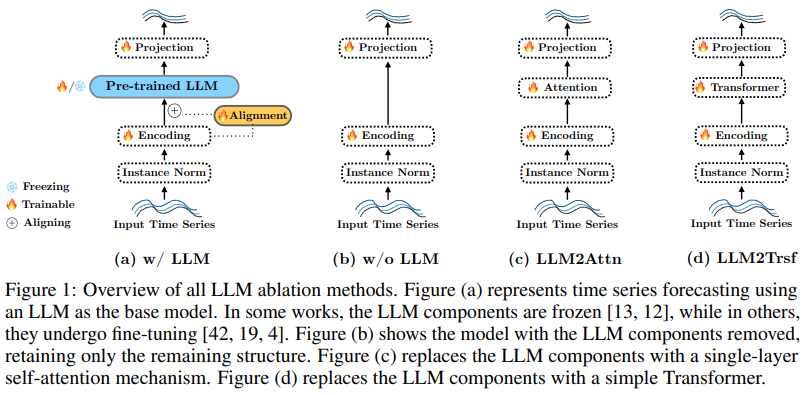

To isolate the influence of the LLM in an LLM-based forecaster, we propose three ablations: removing the LLM component or replacing it with a simple block. Specifically, for each of the three methods we make the following three modifications:

• w/o LLM (Figure 1 (b)). We remove the language model entirely, instead passing the input tokens directly to the reference method’s final layer.

• LLM2Attn (Figure 1 (c)). We replace the language model with a single randomly-initialized multi-head attention layer.

• LLM2Trsf (Figure 1 (d)). We replace the language model with a single randomly-initialized transformer block.

In the above ablations, we keep left parts of the forecasters unchanged (trainable). For example, as shown in Figure 1 (a), after removing the LLM, the input encodings are passed directly to the output projection. Alternatively, as shown in Figure 1 (b) or (c), after replacing the LLM with attention or a transformer, they are trained along with the remaining structure of the original method.

3.3. Datasets and Evaluation Metrics

Benchmark Datasets.

We evaluate on the following real-world datasets: (1) ETT [18]: encompasses seven factors related to electricity transformers across four subsets: ETTh1 and ETTh2, which have hourly recordings, and ETTm1 and ETTm2, which have recordings every 15 minutes; (2) Illness [35]: includes the weekly recorded influenza illness among patients from the Centers for Disease Control, which describes the ratio of patients seen with influenza-like illness to the total number of patients; (3) Weather [35]: local climate data from 1,600 U.S. locations, between 2010 and 2013, and each data point consists of 11 climate features; (4) Traffic [35]: is an hourly dataset from California transportation department, and consists of road occupancy rates measured on San Francisco Bay area freeways; (5) Electricity [32]: contains the hourly electricity consumption of 321 customers from 2012 to 2014. The train-val-test split for ETT datasets is 60%-20%-20%, and for Illness, Weather, and Electricity datasets is 70%-10%-20% respectively. The statistics for all datasets is given in Table 1. We highlight that these datasets, with the same splits and size, have been extensively used to evaluate time-series forecasting ability of LLM-based and other neural models for time-series data [40, 42, 4, 13, 5, 38, 35, 41]. (6) Exchange Rate [16]: collected between 1990 and 2016, it contains daily exchange rates for the currencies of eight countries (Australia, British, Canada, Switzerland, China, Japan, New Zealand and Singapore). (7) Covid Deaths [11]: contains daily statistics of COVID-19 deaths in 266 countries and states between January and August 2020. (8) Taxi (30 min) [1]: contains taxi rides from 1,214 locations in New York City between January 2015 and January 2016. The data is collected every 30 minutes, with an average of 1,478 samples. (9) NN5 (Daily) [11]: contains daily cash withdrawal data from 111 ATMs in the UK, with each ATM having 791 data points. (10) FRED-MD [11]: contains 107 monthly macroeconomic indices released by the Federal Reserve Bank since 01/01/1959. It was extracted from the FRED-MD database.

Evaluation Metrics and Setup.

We report the results in terms of mean absolute error (MAE) and mean squared error (MSE) between predicted and true values of the time-series. Mathematically, given

4. Results

In this section, we provide the details of our comprehensive evaluation of all baseline LLM models for time-series forecasting. Specifically, we ask the following research questions. (RQ1) Do pretrained language models contribute to forecasting performance? (RQ2) Are LLM-based methods worth the computational cost? (RQ3) Does language model pretraining help performance on forecasting tasks? (RQ4) Do LLMs represent sequential dependencies in time series? (RQ5) Do LLMs help with few-shot learning? (RQ6) Where does the performance come from?

4.1. Do pretrained language models contribute to forecasting performance? (RQ1)

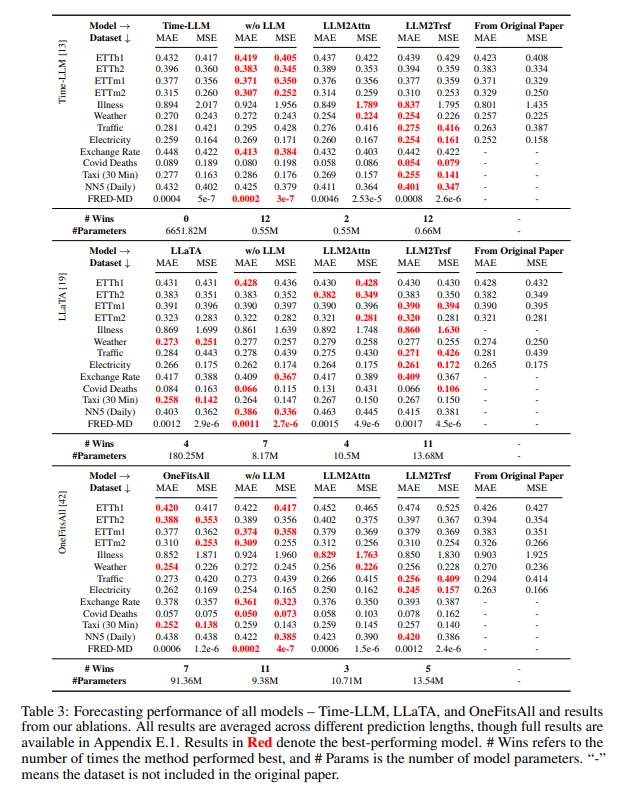

Our results show that pretrained LLMs are not useful for time series forecasting tasks yet. Overall, as shown in Table 3, across 8 datasets and two metrics, ablations out perform Time-LLM methods in 26/26 cases, LLaTA in 22/26 cases, and OneFitsAll in 19/26 cases. We averaged results over different predicting lengths, as in [42, 13, 19]. Across all prediction lengths (eight datasets and four prediction lengths) ablations outperformed Time-LLM, LLaTA, and OneFitsAll in 35/40, 31/40, and 29/40 cases as measured by MAE, respectively. To ensure a fair comparison, we also report results from each method’s original paper alongside our replication. For specific results refer to Appendix E.1. To better evaluate the effectiveness of LLMs and ablation methods, we include 95% bootstrapped confidence intervals for each task. In tasks where LLMs performed better, such as OneFitsAll with ETTh1, shown in Figure 2, there is still substantial overlap in the confidence intervals with the ablation method “w/o LLM” in MAE. Other datasets results and MSE metrics are shown in Figure 5 and Figure 6 in the Appendix. To summarize, our results on the evaluation above, it is hard to conclude that LLMs are effective in time series forecasting.

4.2. Are LLM-based methods worth the computational cost? (RQ2)

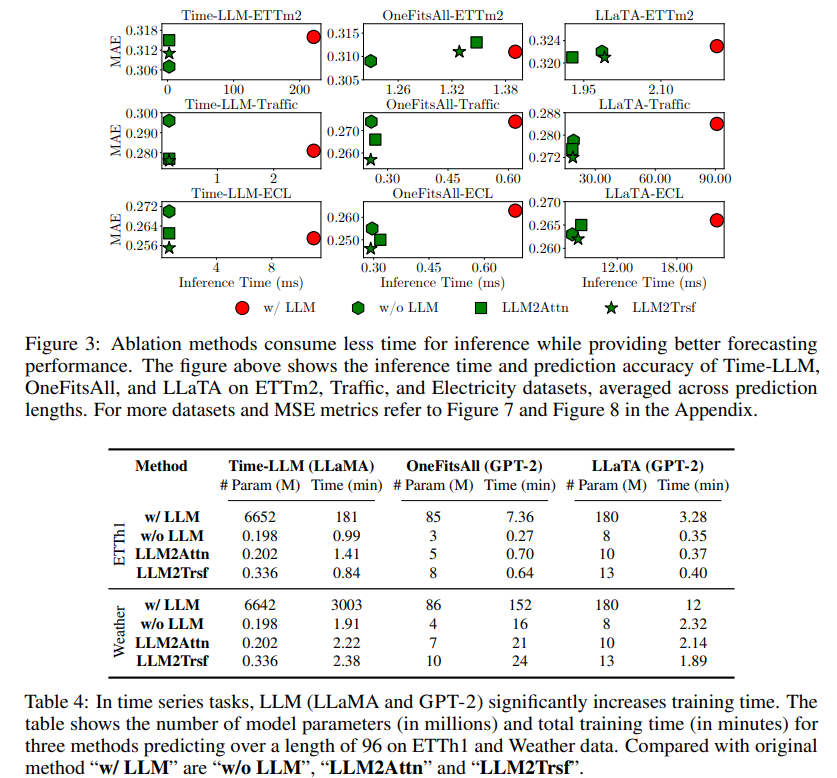

In the previous section, we showed that LLMs do not meaningfully improve performance on time series forecasting tasks. Here, we evaluate the computational intensity of these methods with their nominal performance in mind. The language models in our reference methods use hundreds of millions and sometimes billions of parameters to perform time series forecasting. Even when the parameters of the language models are frozen they still contribute to substantial overhead during training and inference. For instance, Time-LLM has 6642 M parameters and takes 3003 minutes to train on the Weather dataset whereas ablation methods have only 0.245 M parameters and take 2.17 minutes on average. Information about training other methods on ETTh1 and Weather datasets are shown in Table 4. In the case of inference time, we divide by the maximum batch size to give an estimate of inference time per example. Time-LLM, OneFitsAll, and LLaTA take, on average, 28.2, 2.3, and 1.2 times longer than the modified models. Examples can be seen in Figure 3, where the green marks (ablation methods) are typically below the red one (LLM) and are positioned towards the left of the axis, indicating a lower computational costs and better forecasting performance. Other datasets and MSE metric refer to Figure 7 and Figure 8 in Appendix. In conclusion, the computational intensity of LLMs in time series forecasting tasks does not result in a corresponding performance improvement.

4.3. Does language model pretraining help performance on forecasting tasks? (RQ3)

Our evaluation in this section indicates that pretraining with language datasets is unnecessary for time series forecasting. To test whether the knowledge learned during pretraining meaningfully improves forecasting performance we experimented with different combinations of pretraining and finetuning LLaTA’s [19] language model on time series.

• Pretrain + Finetune (Pre+FT). This is the original method, wherein a pretrained language model is finetuned on time series data. In the case of LLaTA, the base language model is frozen and low rank adapters (LoRA) are learned.

• Random Initialization + Finetune (woPre+FT). Does the textual knowledge from pretraining aid time series forecasting? In this method we randomly initialize the weights of the language model (thereby erasing any effect of pretraining) and train the LLM from scratch.

• Pretrain + No Finetuning (Pre+woFT). How much does finetuning on time series improve prediction performance? For this baseline we again leave the language model frozen and forgo learning LoRAs. Results from this model are therefore indicative of the base language model’s performance without additional guidance on processing time series.

• Random Initialization + No Finetuning (woPre+woFT). This baseline is effectively a random projection from the input time series to a forecasting prediction and serves as a baseline comparison with the other methods.

Overall, as shown in Table 5, across 8 datasets using MAE and MSE metrics, the "Pretraining + Finetune" method performed the best 3 times, while "Random Initialization + Finetune" achieved this 8 times. This indicates that language knowledge offers very limited help for forecasting. However, "Pretrain + No Finetuning" and the baseline "Random Initialization + No Finetuning" performed the best 5 times and 0 times, respectively, suggesting that Language knowledge does not contribute meaningfully during the finetuning process. Detailed results refer to Table 18 in Appendix.

In summary, textual knowledge from pretraining provides very limited aids for time series forecasting.

4.4. Do LLMs represent sequential dependencies in time series? (RQ4)

Most time series forecasting methods that use LLMs finetune the positional encoding to help understand the position of time steps in the sequence [4, 42, 19, 5, 29]. We would expect a time series model with good positional representations to show a significant drop in predictive performance when the input is shuffled [38]. We applied three types of shuffling to the time series: shuffling the entire sequence randomly ("sf-all"), shuffling only the first half of the sequence ("sf-half"), and swapping the first and second halves of the sequence ("ex-half"). As shown in Table 6, LLM-based methods were no more vulnerable to input shuffling than their ablations. This implies that LLMs do not have unique capabilities for representing sequential dependencies in time series.

4.5. Do LLMs help with few-shot learning in forecasting? (RQ5)

In this section, our evaluation demonstrates that LLMs are still not meaningfully useful in few-shot learning scenarios.

While our results indicate that LLMs are not useful for time series forecasting, it is nonetheless possible that knowledge encoded in pretrained weights could help performance in few-shot settings where data are scarce. To evaluate whether this is the case we trained models and their ablations on 10% of each dataset. Specifically, we evaluated LLaMA in Time-LLM methods. The results for LLaMA, shown in Table 7, compared LLaMA with completely removing the LLM (w/o LLM). There was no difference, with each performing better in 8 cases. We conducted similar experiments with LLaTA, a GPT-2-based method. Our results in Table 8 indicate that our ablations can perform better than LLMs in few-shot scenarios.

4.6. Where does the performance come from? (RQ6)

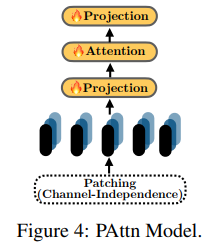

In this section, we evaluate common encoding techniques used in LLM time series models. We find that combining patching with one-layer attention is a simple and effective choice.

In subsection 3.2, we found that simple ablations of LLM-based methods did not decrease performance. To understand why such simple methods work so well we selected some popular techniques used for encoding in LLM time series tasks, such as patching [42, 13, 5, 29, 19], decomposition [4, 25]. A basic transformer block also can be used to aid in encoding [19].

The specific results, shown in Table 19 in the Appendix, indicate that a structure combining patching and attention, named “PAttn”, performs better than most other encoding methods on small datasets (with time stamps less than 1 million) and is even comparable to LLM methods. Its detailed structure, as shown in Figure 4, involves applying "instance norm" to the time series, followed by patching and projection. Then, one-layer attention enables feature learning between patches. For larger datasets, such as Traffic (~15 million) and Electricity (~8 million), a single-layer linear model with a basic transformer, named "LTrsf," performs better in encoding. In those methods, finally, time series embedding will be projected with a single linear layer to forecast. Details of other encoders is in Appendix subsection D.3.

Overall, patching plays a crucial role in encoding. Additionally, basic Attention and Transformer block also effectively aid in encoding.

5. Conclusion

In this paper we showed that despite the recent popularity of LLMs in time series forecasting they do not appear to meaningfully improve performance. We experimented with simple ablations, showing that they maintain or improve the performance of the LLM-based counterparts while requiring considerably less compute. Once more, our goal is not to suggest that LLMs have no place in time series analysis. To do so would likely prove to be a shortsighted claim. Rather, we suggest that the community should dedicate more focus to the exciting tasks could be unlocked by LLMs at the interface of time series and language such as time series reasoning [22], or social understanding [6].

A. Limitations

Here, we discuss the limitations of our paper.

1. We evaluate the ability of LLMs using time-series forecasting. However, to get a better picture of how LLMs can work with time-series, this ability should be evaluated across other downstream tasks as well, such as time-series classification and question-answering.

2. Our evaluation is limited to only time-series datasets, i.e., sequences with even time-intervals. However, there also exists a large fraction of data in the form of non-uniform series, such as payment records, online purchases, etc. Understanding the ability of LLMs in forecasting non-uniform sequences is also necessary to verify our claim on the usefulness of LLMs for time-series data.

B. Broader Societal Impact

One of the major impacts our study will have is on the influx of models that use LLMs for modeling time-series. Our results will help researchers to not simply follow the trend of using LLMs in all applications, but to check their usability in detail. Specifically, these findings will help them determine if the LLM component is necessary and if the computational costs are reasonable for the specific setting. In addition to the research community, our findings on the better performance of smaller and simpler models will help develop scalable models that are easy to understand, interpret, and can be deployed cheaply in real-world applications. While we agree that a majority of our results are experimental and limited to selected datasets, we feel that these results will also help researchers narrow down their search space for better models in time-series forecasting, and not simply neglect the simpler models.

D. Additional Experimental Details

D.1. System Configuration

We train and evaluate each reference method and each architecture modification using the same device. For Time-LLM [13], applying LlaMA-7B [31], we use NVIDIA A100 GPU with 80GB memory. For other methods [42, 19], applying GPT-2 [26], we use NVIDIA RTX A6000 GPU with 48GB memory. Though an analysis of memory footprint is beyond scope of this research, we note that training the baselines in the absence of LLM can be done within smaller GPUs as well.

D.2. Baseline Hyper-Parameter Details

When reproducing the reference methods, we used the original repository’s hyper-parameters and model structures. In the ablation study, due to the smaller model parameters, we adjusted the learning rate or increased the batch size in some cases. All other training details remained identical with the reference methods. The specific training details and hyper-parameters are provided in the code and run scripts of the repository2 . Note that the training process and hyper-parameters for the simple methods can also be accessed via this link.

https://anonymous.4open.science/r/TS_Models-D1BC/

D.3. Details of Simple Methods

To investigate the source of LLM method performance, we conducted further research on encoders. We used various encoders to encode time series data, followed by a linear layer to project the time series embeddings to the forecast. The encoder structure combining patching and attention is shown in Figure 4. In addition, we propose three different neural models (i) “LTrsf”: which performs better on larger datasets, directly projects the time series into a transformer for encoding; (ii) `‘D-LTrsf”; and (iii) “D-PAttn”: both of them decompose the time series into three sub-sequences and forecast each using the above two methods respectively, and then linearly combine the results for the final forecast. Across our results in Table 19, we note that even simpler models significantly outperform LLM-based time-series models. In detail, the LLM-based models, all combined we able to appear 33 times as the best and the second-best performer. However, ‘PAttn’ was outperforming them by appearing 34 times as the best and the second-best performer.

E. Additional Experiments

Here we present the results of additional experiments that also highlight the ability of LLMs in modeling time-series data.

E.1. Confidence Intervals for Forecasting

Since LLMs and deep learning models in general are probabilistic in nature, their predictions can vary across different runs and different random initializations. Thus, we report the confidence intervals (CIs) for all MAE and MSE predictions made by our baseline models. We report the CIs for MAE prediction by Time-LLM, LLaTA, OneFitsAll in Tables 9, 11, and 13, for MSE predictions in Tables 10, 12, and 14, respectively. Across all results, we note that the range of variation is quite small, and these intervals do not affect our observations. To illustrate the subtle differences more clearly, we present the visualized results in Figure 5 and Figure 6.

Confidence Intervals for other Datasets.

To evaluate the generality of the ablations in the paper, we introduce five additional datasets that have not been studied by the reference methods [42, 13, 19]. The above datasets are used in many time series forecasting studies [27, 8, 34, 1, 38]. The prediction lengths for the “Exchange Rate” are "96, 192, 336, 720", as in [38, 16]. The prediction lengths for the other four datasets are 30, 48, 56, and 12, respectively, following the settings in Chronos [1]. As shown in Tables 15, 16, and 17, the forecasting performance on the five new datasets, using the three methods [42, 13, 19] we referenced, still demonstrates that language models are unnecessary for forecasting tasks.

E.2. Complete Results

Here we provide the results for all methods and datasets that we were unable to add to the main paper.

Inference Times.

All results regarding “inference time” and forecast performance are shown in Figure 7 and Figure 8 respectively.

Randomized Parameters and Encoder Exploration.

The results of the randomized parameters are shown in Table 18. The results of the encoders’ exploration are shown in Table 19.

Random-Shuffling of Inputs.

The remaining results for shuffled input are shown in Table 20.

References

[1] A. F. Ansari, L. Stella, C. Turkmen, X. Zhang, P. Mercado, H. Shen, O. Shchur, S. S. Rangapuram, S. P. Arango, S. Kapoor, J. Zschiegner, D. C. Maddix, H. Wang, M. W. Mahoney, K. Torkkola, A. G. Wilson, M. Bohlke-Schneider, and Y. Wang. Chronos: Learning the language of time series, 2024.

[4] D. Cao, F. Jia, S. O. Arik, T. Pfister, Y. Zheng, W. Ye, and Y. Liu. Tempo: Prompt-based generative pre-trained transformer for time series forecasting. In ICLR, 2024.

[5] C. Chang, W.-C. Peng, and T.-F. Chen. Llm4ts: Two-stage fine-tuning for time-series forecasting with pre-trained llms. arXiv preprint arXiv:2308.08469, 2023.

[6] J. Cheng and P. Chin. Sociodojo: Building lifelong analytical agents with real-world text and time series. In ICLR, 2024.

[10] R. Girdhar, A. El-Nouby, Z. Liu, M. Singh, K. V. Alwala, A. Joulin, and I. Misra. Imagebind: One embedding space to bind them all. In CVPR, 2023.

[12] N. Gruver, M. Finzi, S. Qiu, and A. G. Wilson. Large language models are zero-shot time series forecasters. In NeurIPS, 2023.

[13] M. Jin, S. Wang, L. Ma, Z. Chu, J. Y. Zhang, X. Shi, P.-Y. Chen, Y. Liang, Y.-F. Li, S. Pan, et al. Time-llm: Time series forecasting by reprogramming large language models. In ICLR, 2024.

[14] M. Jin, Y. Zhang, W. Chen, K. Zhang, Y. Liang, B. Yang, J. Wang, S. Pan, and Q. Wen. Position paper: What can large language models tell us about time series analysis. In ICML, 2024.

[17] S. Lee, T. Park, and K. Lee. Learning to embed time series patches independently. In ICLR, 2024.

[19] P. Liu, H. Guo, T. Dai, N. Li, J. Bao, X. Ren, Y. Jiang, and S.-T. Xia. Taming pre-trained llms for generalised time series forecasting via cross-modal knowledge distillation. arXiv preprint arXiv:2403.07300, 2024.

[20] X. Liu, D. McDuff, G. Kovacs, I. Galatzer-Levy, J. Sunshine, J. Zhan, M.-Z. Poh, S. Liao, P. Di Achille, and S. Patel. Large language models are few-shot health learners. arXiv preprint arXiv:2305.15525, 2023.

[22] M. A. Merrill, M. Tan, V. Gupta, T. Hartvigsen, and T. Althoff. Language models still struggle to zero-shot reason about time series. arXiv preprint arXiv:2404.11757, 2024.

[23] M. A. Morid, O. R. L. Sheng, and J. Dunbar. Time series prediction using deep learning methods in healthcare. ACM Trans. Manage. Inf. Syst., 14(1), 2023.

[24] Y. Nie, N. H. Nguyen, P. Sinthong, and J. Kalagnanam. A time series is worth 64 words: Long-term forecasting with transformers. In ICLR, 2023.

[25] Z. Pan, Y. Jiang, S. Garg, A. Schneider, Y. Nevmyvaka, and D. Song. Ip-llm: Semantic space informed prompt learning with llm for time series forecasting. arXiv preprint arXiv:2403.05798, 2024.

[27] K. Rasul, A. Ashok, A. R. Williams, A. Khorasani, G. Adamopoulos, R. Bhagwatkar, M. Biloš, H. Ghonia, N. V. Hassen, A. Schneider, et al. Lag-llama: Towards foundation models for time series forecasting. arXiv preprint arXiv:2310.08278, 2023.

[29] C. Sun, Y. Li, H. Li, and S. Hong. Test: Text prototype aligned embedding to activate llm’s ability for time series. In ICLR, 2024.

[30] S. J. Talukder and G. Gkioxari. Time series modeling at scale: A universal representation across tasks and domains. 2023.

[33] C. Wimmer and N. Rekabsaz. Leveraging vision-language models for granular market change prediction. arXiv preprint arXiv:2301.10166, 2023.

[34] G. Woo, C. Liu, A. Kumar, C. Xiong, S. Savarese, and D. Sahoo. Unified training of universal time series forecasting transformers. 2024.

[37] H. Xue and F. D. Salim. Promptcast: A new prompt-based learning paradigm for time series forecasting. IEEE Transactions on Knowledge and Data Engineering, 2023.

[38] A. Zeng, M. Chen, L. Zhang, and Q. Xu. Are transformers effective for time series forecasting? In AAAI, 2023.

[39] L. Zhao and Y. Shen. Rethinking channel dependence for multivariate time series forecasting: Learning from leading indicators. In ICLR, 2024.

[42] T. Zhou, P. Niu, L. Sun, R. Jin, et al. One fits all: Power general time series analysis by pretrained lm. In NeurIPS, 2023.

'*Paper Writing 1 > Related_Work' 카테고리의 다른 글