-

[ORPO] Monolithic Preference Optimization without Reference Model*RL/RL 2024. 12. 14. 12:10

https://arxiv.org/pdf/2403.07691

https://github.com/xfactlab/orpo

Abstract

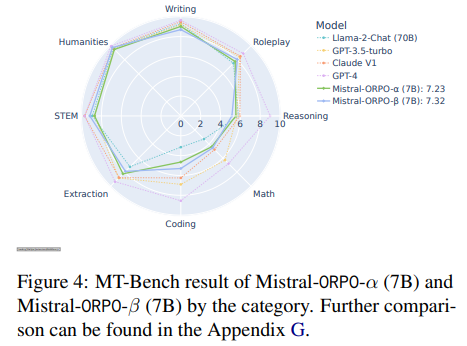

While recent preference alignment algorithms for language models have demonstrated promising results, supervised fine-tuning (SFT) remains imperative for achieving successful convergence. In this paper, we study the crucial role of SFT within the context of preference alignment, emphasizing that a minor penalty for the disfavored generation style is sufficient for preference-aligned SFT. Building on this foundation, we introduce a straightforward and innovative reference model-free monolithic odds ratio preference optimization algorithm, ORPO, eliminating the necessity for an additional preference alignment phase. We demonstrate, both empirically and theoretically, that the odds ratio is a sensible choice for contrasting favored and disfavored styles during SFT across the diverse sizes from 125M to 7B. Specifically, fine-tuning Phi-2 (2.7B), Llama-2 (7B), and Mistral (7B) with ORPO on the UltraFeedback alone surpasses the performance of state-of-the-art language models with more than 7B and 13B parameters: achieving up to 12.20% on AlpacaEval2.0 (Figure 1), 66.19% on IFEval (instruction-level loose, Table 6), and 7.32 in MT-Bench (Figure 12). We release code1 and model checkpoints for Mistral-ORPOα (7B)2 and Mistral-ORPO-β (7B).3

1. Introduction

Pre-trained language models (PLMs) with vast training corpora such as web texts (Gokaslan and Cohen, 2019; Penedo et al., 2023) or textbooks (Li et al., 2023c) have shown remarkable abilities in diverse natural language processing (NLP) tasks (Brown et al., 2020; Zhang et al., 2022; Touvron et al., 2023; Jiang et al., 2023; Almazrouei et al., 2023). However, the models must undergo further tuning to be usable in general-domain applications, typically through processes such as instruction tuning and preference alignment.

Instruction-tuning (Wei et al., 2022; Taori et al., 2023; Wang et al., 2023; Zhou et al., 2023a) trains models to follow task descriptions given in natural language, which enables models to generalize well to previously unseen tasks. However, despite the ability to follow instructions, models may generate harmful or unethical outputs (Carlini et al., 2021; Gehman et al., 2020; Pryzant et al., 2023). To further align these models with human values, additional training is required with pairwise preference data using techniques such as reinforcement learning with human feedback (Ziegler et al., 2020; Stiennon et al., 2022, RLHF) and direct preference optimization (Rafailov et al., 2023, DPO).

Preference alignment methods have demonstrated success in several downstream tasks beyond reducing harm. For example, improving factuality (Tian et al., 2023; Cheng et al., 2024; Chen and Li, 2024), code-based question answering (Gorbatovski and Kovalchuk, 2024), and machine translation (Ramos et al., 2023). The versatility of alignment algorithms over a wide range of downstream tasks highlights the necessity of understanding the alignment procedure and further improving the algorithms in terms of efficiency and performance. However, existing preference alignment methods normally consist of a multi-stage process, as shown in Figure 2, typically requiring a second reference model and a separate warm-up phase with supervised fine-tuning (SFT) (Ziegler et al., 2020; Rafailov et al., 2023; Wu et al., 2023).

In this paper, we study the role and impact of SFT in pairwise preference datasets for model alignment in Section 3 and propose a simple and novel monolithic alignment method, odds ratio preference optimization (ORPO), which efficiently penalizes the model from learning undesired generation styles during SFT in Section 4. In contrast to previous works, our approach requires neither an SFT warm-up stage nor a reference model, enabling resource-efficient development of preference-based aligned models.

We demonstrate the effectiveness of our method with the evaluation of model alignment tasks and popular leaderboards in Section 6.1 and 6.2 by fine-tuning Phi-2 (2.7B), Llama-2 (7B), and Mistral (7B) with ORPO. Then, we conduct controlled experiments comparing ORPO against established methods for model alignment, RLHF, and DPO for different datasets and model sizes in Section 6.3. Along with the post-hoc analysis of generation diversity in Section 6.4, we expound on the theoretical, empirical, and computational justification of utilizing the odds ratio in monolithic preference alignment in Section 7.3. We release the training code and the checkpoints for Mistral-ORPO-α (7B) and Mistral-ORPO-β (7B). These models achieve 7.24 and 7.32 in MT-Bench, 11.33% and 12.20% on AlpacaEval2.0 , and 61.63% and 66.19% in IFEval instruction-level loose accuracy, respectively.

2. Related Works

Alignment with Reinforcement Learning

Reinforcement learning with human feedback (RLHF) commonly applies the Bradley-Terry model (Bradley and Terry, 1952) to estimate the probability of a pairwise competition between two independently evaluated instances. An additional reward model is trained to score instances. Reinforcement learning algorithms such as proximal policy optimization (PPO) (Schulman et al., 2017) are employed to train the model to maximize the score of the reward model for the chosen response, resulting in language models that are trained with human preferences (Ziegler et al., 2020; Stiennon et al., 2022). Notably, Ouyang et al. (2022) demonstrated the scalability and versatility of RLHF for instruction-following language models. Extensions such as language model feedback (RLAIF) could be a viable alternative to human feedback (Bai et al., 2022b; Lee et al., 2023; Pang et al., 2023). However, RLHF faces challenges of extensive hyperparameter searching due to the instability of PPO (Rafailov et al., 2023; Wu et al., 2023) and the sensitivity of the reward models (Gao et al., 2022; Wang et al., 2024). Therefore, there is a crucial need for stable preference alignment algorithms.

Alignment without Reward Model

Several techniques for preference alignment mitigate the need for reinforcement learning (Rafailov et al., 2023; Song et al., 2023; Azar et al., 2023; Ethayarajh et al., 2024). Rafailov et al. (2023) introduce direct policy optimization (DPO), which combines the reward modeling stage into the preference learning stage. Azar et al. (2023) prevented potential overfitting problems in DPO through identity preference optimization (IPO). Ethayarajh et al. (2024) and Cai et al. (2023) proposed Kahneman-Tversky Optimisation (KTO) and Unified Language Model Alignment (ULMA) that does not require the pairwise preference dataset, unlike RLHF and DPO. Song et al. (2023) further suggests incorporation of the softmax value of the reference response set in the negative log-likelihood loss to merge the supervised fine-tuning and preference alignment.

Alignment with Supervised Fine-tuning

Preference alignment methods in reinforcement learning (RL) often leverage supervised fine-tuning (SFT) to ensure the stable update of the active policy in relation to the old policy (Schulman et al., 2017). This is because the SFT model is the old policy in the context of RLHF (Ziegler et al., 2020). Furthermore, empirical findings indicate that, even in non-RL alignment methods, the SFT model is crucial for achieving convergence to desired results (Rafailov et al., 2023; Tunstall et al., 2023).

In contrast, there have been approaches to build human-aligned language models by conducting SFT only with filtered datasets (Zhou et al., 2023a; Li et al., 2023a; Haggerty and Chandra, 2024; Zhou et al., 2023b). Zhou et al. (2023a) demonstrated that SFT with a small amount of data with finegrained filtering and curation could be sufficient for building helpful language model assistants. Furthermore, Li et al. (2023a) and Haggerty and Chandra (2024) proposed an iterative process of finetuning the supervised fine-tuned language models with their own generations after fine-grained selection of aligned generations and Zhou et al. (2023b) suggested that a curated subset of preference dataset is sufficient for alignment. While these works highlight the impact and significance of SFT in the context of alignment, the actual role of SFT and the theoretical background for incorporating preference alignment in SFT remains understudied.

3. The Role of Supervised Fine-tuning

We study the behavior of supervised fine-tuning (SFT) as an initial stage of preference alignment methods (Ziegler et al., 2020; Rafailov et al., 2023) through analysis of the loss function in SFT and empirical demonstration of the preference comprehension ability of the trained SFT model. SFT plays a significant role in tailoring the pre-trained language models to the desired domain (Zhou et al., 2023a; Dong et al., 2024) by increasing the log probabilities of pertinent tokens. Nevertheless, this inadvertently increases the likelihood of generating tokens in undesirable styles, as illustrated in Figure 3. Therefore, it is necessary to develop methods capable of preserving the domain adaptation role of SFT while concurrently discerning and mitigating unwanted generation styles.

Absence of Penalty in Cross-Entropy Loss

The goal of cross-entropy loss model fine-tuning is to penalize the model if the predicted logits for the reference answers are low, as shown in Equation 2.

where y_i is a boolean value that indicates if ith token in the vocabulary set V is a label token, p_i refers to the probability of ith token, and m is the length of sequence. Using cross-entropy alone gives no direct penalty or compensation for the logits of non-answer tokens (Lin et al., 2017) as y_i will be set to 0. While cross-entropy is generally effective for domain adaptation (Mao et al., 2023), there are no mechanisms to penalize rejected responses when compensating for the chosen responses. Therefore, the log probabilities of the tokens in the rejected responses increase along with the chosen responses, which is not desired from the viewpoint of preference alignment.

Generalization over Both Response Styles

We conduct a pilot study to empirically demonstrate the miscalibration of chosen and rejected responses with supervised fine-tuning alone. We fine-tune OPT-350M (Zhang et al., 2022) on the chosen responses only from the HH-RLHF dataset (Bai et al., 2022b). Throughout the training, we monitor the log probability of rejected responses for each batch and report this in Figure 3. Both the log probability of chosen and rejected responses exhibited a simultaneous increase. This can be interpreted from two different perspectives. First, the cross-entropy loss effectively guides the model toward the intended domain (e.g., dialogue). However, the absence of a penalty for unwanted generations results in rejected responses sometimes having even higher log probabilities than the chosen ones.

Penalizing Undesired Generations

Appending an unlikelihood penalty to the loss has demonstrated success in reducing unwanted degenerative traits in models (Welleck et al., 2019; Li et al., 2020). For example, to prevent repetitions, an unwanted token set of previous contexts, k ∈ C_recent, is disfavored by adding the following term to (1 − p^(k)_i) to the loss (such as Equation 2) which penalizes the model for assigning high probabilities to recent tokens. Motivated by SFT ascribing high probabilities to rejected tokens (Figure 3) and the effectiveness of appending penalizing unwanted traits, we design a monolithic preference alignment method that dynamically penalizes the disfavored response for each query without the need for crafting sets of rejected tokens.

4. Odds Ratio Preference Optimization

We introduce a novel preference alignment algorithm, Odds Ratio Preference Optimization (ORPO), which incorporates an odds ratio-based penalty to the conventional negative log-likelihood (NLL) loss for differentiating the generation styles between favored and disfavored responses.

4.1. Preliminaries

Given an input sequence x, the average loglikelihood of generating the output sequence y, of length m tokens, is computed as Equation 3. The odds of generating the output sequence y given an input sequence x is defined in Equation 4:

Intuitively, oddsθ(y|x) = k implies that it is k times more likely for the model θ to generate the output sequence y than not generating it. Thus, the odds ratio of the chosen response yw over the rejected response yl , ORθ(yw, yl), indicates how much more likely it is for the model θ to generate yw than yl given input x, defined in Equation 5.

4.2. Objective Function of ORPO

The objective function of ORPO in Equation 6 consists of two components: 1) supervised fine-tuning (SFT) loss (L_SFT); 2) relative ratio loss (L_OR).

L_SFT follows the conventional causal language modeling negative log-likelihood (NLL) loss function to maximize the likelihood of generating the reference tokens as previously discussed in Section 3. L_OR in Equation 7 maximizes the odds ratio between the likelihood of generating the favored response yw and the disfavored response yl . We wrap the log odds ratio with the log sigmoid function so that L_OR could be minimized by increasing the log odds ratio between yw and yl .

Together, L_SFT and L_OR weighted with λ tailor the pre-trained language model to adapt to the specific subset of the desired domain and disfavor generations in the rejected response sets.

4.3. Gradient of ORPO

The gradient of L_OR further justifies using the odds ratio loss. It comprises two terms: one that penalizes the wrong predictions and one that contrasts between chosen and rejected responses, denoted in Equation 8 4 for d = (x, yl , yw) ∼ D.

When the odds of the favored responses are relatively higher than the disfavored responses, δ(d) in Equation 9 will converge to 0. This indicates that the δ(d) will play the role of a penalty term, accelerating the parameter updates if the model is more likely to generate the rejected responses.

Meanwhile, h(d) in Equation 10 implies a weighted contrast of the two gradients from the chosen and rejected responses. Specifically, 1−P(y|x) in the denominators amplifies the gradients when the corresponding side of the likelihood P(y|x) is low. For the chosen responses, this accelerates the model’s adaptation toward the distribution of chosen responses as the likelihood increases.

5. Experimental Settings

5.1. Training Configurations

Models

We train a series of OPT models (Zhang et al., 2022) scaling from 125M to 1.3B parameters comparing supervised fine-tuning (SFT), proximal policy optimization (PPO), direct policy optimization (DPO), and compare these to our ORPO. PPO and DPO models were fine-tuned with TRL library (von Werra et al., 2020) on top of SFT models trained for a single epoch on the chosen responses following Rafailov et al. (2023) and Tunstall et al. (2023). We notate this by prepending "+" to each algorithm (e.g., +DPO). Additionally, we train Phi-2 (2.7B) (Javaheripi and Bubeck, 2023), a pre-trained language model with promising downstream performance (Beeching et al., 2023), as well as Llama2 (7B) (Touvron et al., 2023) and Mistral (7B) (Jiang et al., 2023). Further training details for each method are in Appendix C.

Datasets

We test each training configuration and model on two datasets: 1) Anthropic’s HH-RLHF (Bai et al., 2022a), 2) Binarized UltraFeedback (Tunstall et al., 2023). We filtered out instances where yw = yl or where yw = ∅ or where yl = ∅.

Reward Models

We train OPT-350M and OPT1.3B on each dataset for a single epoch for reward modeling with the objective function in Equation 11 (Ziegler et al., 2020). The OPT-350M reward model was used for PPO, and OPT-1.3B reward model was used to assess the generations of finetuned models. We refer to these reward models as RM-350M and RM-1.3B in Section 6.

5.2. Leaderboard Evaluation

In Section 6.1, we evaluate the models using the AlpacaEval1.0 and AlpacaEval2.0 (Li et al., 2023b) benchmarks, comparing ORPO to other instruction-tuned models reported in the official leaderboard,5 including Llama-2 Chat (7B) and (13B) (Touvron et al., 2023), and Zephyr α and β (Almazrouei et al., 2023). Similarly, in Section 6.2, we evaluate the models with MT-Bench (Zheng et al., 2023) and report the results and the scores of the same models reported in the official leaderboard.6 Using GPT-4 (Achiam et al., 2023) as an evaluator in AlpacaEval1.0, we assess if the trained model can be preferred over the responses generated from text-davinci-003. For AlpacaEval2.0 , we used GPT-4-turbo7 as an evaluator following the default setting. We assess if the generated responses are favored over those generated from GPT-4. Finally, using GPT-4 as an evaluator in MT-Bench, we check if the models can follow the instructions with hard answers in a multi-turn conversation.

6. Results and Analysis

First, we assess the general instruction-following abilities of the models by comparing the preference alignment algorithms in Sections 6.1 and 6.2. Second, we measure the win rate of OPT models trained with ORPO against other alignment methods training OPT 1.3B as a reward model in Section 6.3. Then, we measure the lexical diversity of the models trained with ORPO and DPO in Section 6.4.

6.1. Single-turn Instruction Following

Phi-2 (2.7B)

ORPO improved pre-trained Phi-2 to exceed the performance of the Llama-2 Chat instruction-following language model by only using UltraFeedback as the instruction-tuning dataset, as shown in Table 1. λ of 0.25 was applied for Phi2, resulting in 71.80% and 6.35% in AlpacaEval.

Llama-2 (7B)

Notably, UltraFeedback and ORPO with λ of 0.2 on Llama-2 (7B) resulted in higher AlpacaEval scores than the chat versions of both 7B and 13B scale trained with RLHF, eventually showing 81.26% and 9.44% in both AlpacaEvals. In contrast, in our controlled experimental setting of conducting one epoch of SFT and three epochs of DPO following Tunstall et al. (2023) and Rafailov et al. (2023), Llama-2 + SFT and Llama2 + SFT + DPO yielded models with outputs that could not be evaluated. This supports the efficacy of ORPO, in which the model can rapidly learn the desired domain and the preference with limited data. This aligns with the h(d) examination in the gradient of our method studied in Section 4.3.

Mistral-ORPO-α (7B)

Furthermore, fine-tuning Mistral (7B) with single-turn conversation dataset, UltraFeedback, and ORPO with λ of 0.1 outperforms Zephyr series, which are the Mistral (7B) models fine-tuned with SFT on 20K UltraChat (Ding et al., 2023) and DPO on the full UltraFeedback. As shown in Table 1, Mistral-ORPO-α (7B) achieves 87.92% and 11.33%, which exceeds Zephyr α by 1.98% and Zephyr β by 0.34% in AlpacaEval2.0 . The sample responses and corresponding references from GPT-4 can be found in Appendix I.

Mistral-ORPO-β (7B)

Using the same configuration of Mistral-ORPO-α (7B), we additionally compare fine-tuning Mistral on the cleaned version of the UltraFeedback8 to demonstrate the effect of the data quality (Bartolome et al., 2023). While the actual sizes of datasets are similar, ORPO gains further advantages from the dataset quality by scoring over 91% and 12% on AlpacaEval, as shown in Table 1. Further instruction-following evaluation on two Mistral-based models with IFEval (Zhou et al., 2023c) is reported in the Appendix D.

6.2. Multi-turn Instruction Following

With our best model, Mistral-ORPO-α (7B) and Mistral-ORPO-β (7B), we also assess the multi-turn instruction-following skills with deterministic answers (e.g., math) through MT-Bench.

As shown in Figure 4, ORPO-Mistral (7B) series achieve comparable results to either larger or the proprietary models, including Llama-2-Chat (70B) and Claude. Eventually, Mistral-ORPO-α (7B) and Mistral-ORPO-β (7B) scored 7.23 and 7.32 in MTBench without being exposed to the multi-turn conversation dataset during training.

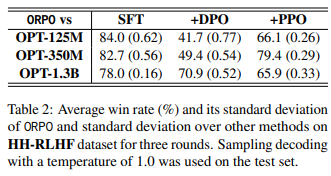

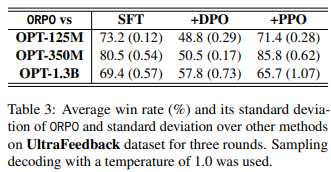

6.3. Reward Model Win Rate

We assess the win rate of ORPO over other preference alignment methods, including supervised fine-tuning (SFT), PPO, and DPO, using RM-1.3B to understand the effectiveness and scalability of ORPO in Tables 2 and 3. Additionally, we visually verify that ORPO can effectively enhance the expected reward compared to SFT in Figure 5.

HH-RLHF

In Table 2, ORPO outperforms SFT and PPO across all model scales. The highest win rate against SFT and PPO across the size of the model was 78.0% and 79.4%, respectively. Meanwhile, the win rate over DPO was correlated to the model’s size, with the largest model having the highest win rate: 70.9%.

(근데 작은 size에서는 DPO가 나은 듯..? OPT-125M, OPT-250M)

UltraFeedback

The win rate in UltraFeedback followed similar trends to what was reported in HH-RLHF, as shown in Table 3. ORPO was preferred over SFT and PPO for maximum 80.5% and 85.8%, respectively. While consistently preferring ORPO over SFT and PPO, the win rate over DPO gradually increases as the size of the model increases. The scale-wise trend exceeding DPO will be further shown through 2.7B models in Section 6.1.

Overall Reward Distribution

In addition to the win rate, we compare the reward distribution of the responses generated with respect to the test set of the UltraFeedback dataset in Figure 5 and HH-RLHF dataset in Appendix F. Regarding the SFT reward distribution as a default, PPO, DPO, and ORPO shift it in both datasets. However, the magnitude of reward shifts for each algorithm differs.

In Figure 5, RLHF (i.e., SFT + PPO) has some abnormal properties of the distribution with a low expected reward. We attribute this to empirical evidence of the instability and reward mismatch problem of RLHF (Rafailov et al., 2023; Gao et al., 2022; Shen et al., 2023) as the RLHF models were trained with RM-350M and assessed with RM1.3B. Meanwhile, it is notable that the ORPO distribution (red) is mainly located on the very right side of each subplot, indicating higher expected rewards. Recalling the intent of preference alignment methods, the distributions in Figure 5 indicate that ORPO tends to fulfill the aim of preference alignment for all model sizes.

6.4. Lexical Diversity

The lexical diversity of the preference-aligned language models was studied in previous works (Kirk et al., 2024). We expand the concept of per-input and across-input diversity introduced in Kirk et al. (2024) by using Gemini-Pro (Gemini Team et al., 2023) as an embedding model, which is suitable for assessing the diversity of instruction-following language models by encoding a maximum of 2048 tokens. The diversity metric with the given set of sampled responses is defined as Equation 13.

where cos(hi , hj) refers to the cosine similarity between the embedding hi and hj. 5 different responses are sampled with a temperature of 1.0 to 160 queries in AlpacaEval (i.e., K = 5, N = 160) using Phi-2 and Llama-2 trained with ORPO and DPO. We report the results in Table 4.

Per Input Diversity (PID)

We average the inputwise average cosine similarity between the generated samples with Equation 14 to assess the per-input diversity. In Table 4, ORPO models have the highest average cosine similarity in the first column for both models, which implies the lowest diversity per input. This indicates that ORPO generally assigns high probabilities to the desired tokens, while DPO has a relatively smoother logit distribution.

Across Input Diversity (AID)

Using 8 samples generated per input, we sample the first item for each input and examine their inter cosine similarity with Equation 15 for across-input diversity. Unlike per-input diversity, it is noteworthy that Phi-2 (ORPO) has lower average cosine similarity in the second row of Table 4. We can infer that ORPO triggers the model to generate more instruction-specific responses than DPO.

7. Discussion

In this section, we expound on the theoretical and computational details of ORPO. The theoretical analysis of ORPO is studied in Section 7.1, which will be supported with the empirical analysis in Section 7.2. Then, we compare the computational load of DPO and ORPO in Section 7.3.

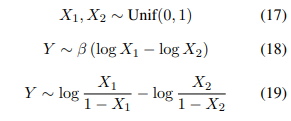

7.1. Comparison to Probability Ratio

The rationale for selecting the odds ratio instead of the probability ratio lies in its stability. The probability ratio for generating the favored response yw over the disfavored response yl given an input sequence x can be defined as Equation 16.

While this formulation has been used in previous preference alignment methods that precede SFT (Rafailov et al., 2023; Azar et al., 2023), the odds ratio is a better choice in the setting where the preference alignment is incorporated in SFT as the odds ratio is more sensitive to the model’s preference understanding. In other words, the probability ratio leads to more extreme discrimination of the disfavored responses than the odds ratio.

We visualize this through the sample distributions of the log probability ratio log PR(X2|X1) and log odds ratio log OR(X2|X1). We sample 50,000 samples each with Equation 17 and plot the log probability ratio and log odds ratio in Figure 6. We multiply β for the probability ratio as it is practiced in the probability ratio-based methods and report the cases where β = 0.2 and β = 1.0.

Recalling that the log sigmoid function is applied to the log probability ratio and log odds ratio, each ratio’s scale determines the expected margin between the likelihood of the favored and disfavored styles when the loss is minimized. In that sense, the contrast should be relatively extreme to minimize the log sigmoid loss when PR(X2|X1) is inputted instead of OR(X2|X1) to the log sigmoid function, regarding the sharp distribution of log PR(X2|X1) in Figure 6. This results in overly suppressing the logits for the tokens in the disfavored responses in the setting where SFT and preference alignment are incorporated, as the model is not adapted to the domain. We empirically support this analysis through the ablation study in Appendix B. Therefore, the odds ratio is a better choice when the preference alignment is done with SFT due to the mild discrimination of disfavored responses and the prioritizing of the favored responses to be generated.

Throughout fine-tuning, minimizing the log sigmoid loss leads to either PR(X2|X1) or OR(X2|X1) to be larger. This is equivalent to the rejected responses’ token-wise likelihood, which will generally get smaller. In this context, it is essential to avoid an overly extreme contrast. This precaution is especially important given the sharp distribution of log PR(X2|X1) depicted in Figure 6. The excessive margin could lead to the unwarranted suppression of logits for tokens in disfavored responses within the incorporated setting, potentially resulting in issues of degeneration.

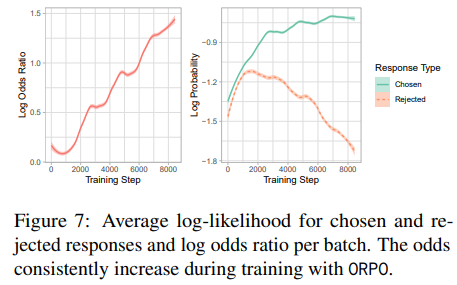

7.2 Minimizing L_OR

We demonstrate that models trained with ORPO learned to reflect the preference throughout the training process. We monitored the log probabilities of the chosen and rejected responses and the log odds ratio with λ = 1.0. With the same dataset and model as Figure 3, Figure 7 shows that the log probability of rejected responses is diminishing while that of chosen responses is on par with Figure 3 as the log odds ratio increases. This indicates that ORPO is successfully preserving the domain adaptation role of SFT while the penalty term L_OR induces the model to lower the likelihood of unwanted generations. We discuss the effect of λ in Equation 6 in Appendix E, studying the proclivity of the log probability margin between the favored and disfavored responses with respect to λ.

7.3. Computational Efficiency

As depicted in Figure 2, ORPO does not require a reference model, unlike RLHF and DPO. In that sense, ORPO is computationally more efficient than RLHF and DPO in two perspectives: 1) memory allocation and 2) fewer FLOPs per batch.

The reference model (π_SFT) in the context of RLHF and DPO denotes the model trained with supervised fine-tuning (SFT), which will be the baseline model for updating the parameters with RLHF or DPO (Ziegler et al., 2020; Rafailov et al., 2023). Thus, two π_SFTs, a frozen reference model and the model undergoing tuning, are required during training. Furthermore, in theory, two forward passes should be calculated for each model to acquire the logits for the chosen and rejected responses. In other words, four forward passes happen in total for a single batch. On the other hand, a reference model is not required in ORPO as π_SFT is directly updated. This leads to half the number of forward passes required for each batch during training.

8. Conclusion

In this paper, we introduced a reference-free monolithic preference alignment method, odds ratio preference optimization (ORPO), by revisiting and understanding the value of the supervised fine-tuning (SFT) phase in the context of preference alignment. ORPO was consistently preferred by the fine-tuned reward model against SFT and RLHF across the scale, and the win rate against DPO increased as the size of the model increased. Furthermore, we validate the scalability of ORPO with 2.7B and 7B pre-trained language models by exceeding the larger state-of-the-art instruction-following language models in AlpacaEval. Specifically, MistralORPO-α and Mistral-ORPO-β achieved 11.33% and 12.20% in AlpacaEval2.0 , 7.23 and 7.32 in MTBench, thereby underscoring the efficiency and effectiveness of ORPO. We release fine-tuning code and model checkpoints for Mistral-ORPO-α and Mistral-ORPO-β to aid reproducibility.

Limitations

While conducting a comprehensive analysis of the diverse preference alignment methods, including DPO and RLHF, we did not incorporate a more comprehensive range of preference alignment algorithms. We leave the broader range of comparison against other methods as future work, along with scaling our method to over 7B models. In addition, we will expand the fine-tuning datasets into diverse domains and qualities, thereby verifying the generalizability of our method in various NLP downstream tasks. Finally, we would like to study the internal impact of our method on the pre-trained language model, expanding the understanding of preference alignment procedure to not only the supervised fine-tuning stage but also consecutive preference alignment algorithms.

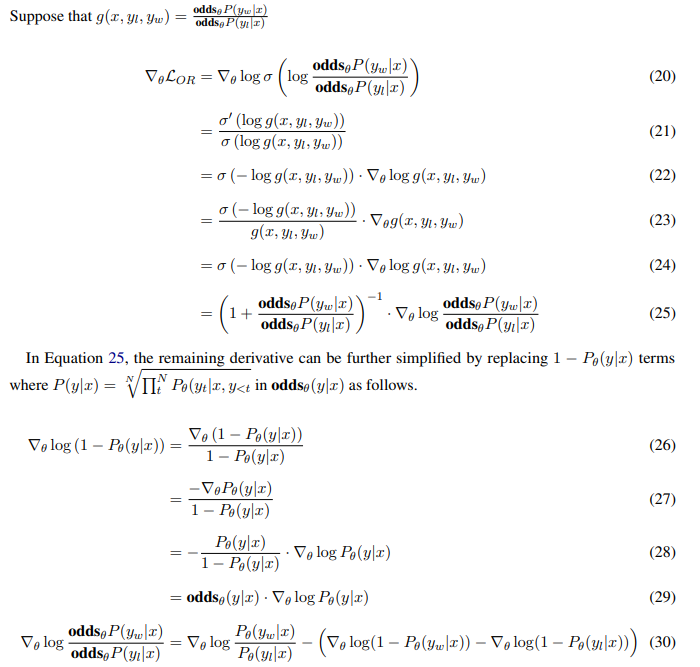

A. Derivation of ∇θL_OR with Odds Ratio

B. Ablation on Probability Ratio and Odds Ratio

In this section, we continue the discussion in Section 7.1 through empirical results comparing the log probabilities of chosen and rejected responses in UltraFeedback when trained with probability ratio and odds ratio. Recalling the sensitivity of each ratio discussed in Section 7.1, it is expected for the probability ratio to lower the log probabilities of the rejected responses with a larger scale than the odds ratio. This is well-shown in Figure 8, which is the log probabilities of each batch while fine-tuning with probability ratio (left) rapidly reaches under -4, while the same phenomenon happens after the over-fitting occurs in the case of odds ratio (right).

C. Experimental Details

Flash-Attention 2 (Dao, 2023) is applied for all the pre-trained models for computational efficiency. In particular, the OPT series and Phi-2 (2.7B) were trained with DeepSpeed ZeRO 2 (Rasley et al., 2020), Llama-2 (7B) and Mistral (7B) were trained with Fully Sharded Data Parallel(FSDP) (Zhao et al., 2023). 7B and 2.7B models were trained with four and two NVIDIA A100, and the rest were trained on four NVIDIA A6000. For optimizer, AdamW optimizer (Loshchilov and Hutter, 2019) and paged AdamW (Dettmers et al., 2023) were used, and the linear warmup with cosine decay was applied for the learning rate. For input length, every instance was truncated and padded to 1,024 tokens and 2,048 tokens for HH-RLHF and UltraFeedback, respectively. To guarantee that the models can sufficiently learn to generate the proper response to the conversation history or the complex instruction, we filtered instances with prompts with more than 1,024 tokens.

Supervised Fine-tuning (SFT)

For SFT, the maximum learning rate was set to 1e-5. Following Ziegler et al. (2020) and Rafailov et al. (2023), the training epoch is set to 1.

Reinforcement Learning with Human Feedback (RLHF)

For RLHF, the hyperparameters were set as Table 5 for UltraFeedback. For the HH-RLHF dataset, the output_min_length and output_max_length were set to 64 and 256.

Direct Preference Optimization (DPO)

For DPO, β was set to 0.1 for every case. The learning rate was set to 5e-6, and the model was trained for three epochs to select the best model by evaluation loss in each epoch. However, in most cases, the first or the second checkpoint was selected as the best model as the evaluation loss increased from the third epoch.

Odds Ratio Preference Optimization (ORPO)

As ORPO does not require any special hyperparameter, only the learning rate and epoch were the only hyperparameter to set. For ORPO, the maximum learning rate was set to 8e-6 and trained for 10 epochs. The best model is selected based on the lowest evaluation loss for the OPT series, Phi-2 (2.7B) and Llama-2 (7B).

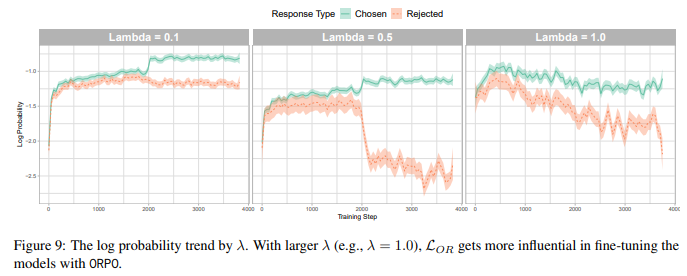

E. Ablation on the Weighting Value (λ)

For the weighting value λ in Equation 6, we conduct an ablation study with {0.1, 0.5, 1.0}. Mistral (7B) and UltraFeedback were used for the base model and dataset. In Section E.1, we compare the log probability trends by the value of λ, and we assess the downstream effect of λ in Section E.2.

E.1. Log Probability

In Figure 9, we find that larger λ leads to stronger discrimination of the rejected responses in general. With λ = 0.1, the average log probability of the chosen and the rejected responses stay close as the fine-tuning proceeds. Also, unlike other settings, the log probabilities for the rejected responses do not decrease, but rather, the log probabilities of the chosen responses increase to minimize L_OR term.

Moreover, in λ = 0.5, there exists a similar trend of further increasing the log probabilities of the chosen responses, but the log probabilities of the rejected responses are diminishing simultaneously. Lastly, in λ = 1.0, the chosen responses diminish along with the rejected responses while enlarging the margin between them. However, this does not mean smaller λ is always the better. It will depend on the specific need and model.

E.2. MT-Bench

The downstream impact of λ stands out in the MT-Bench result. In comparison to λ = 0.1, Mistral+ORPO (7B) with λ = 1.0 performs worse in extraction, math, and reasoning, which are the categories that generally require deterministic answers. On the other hand, it performs better in STEM, humanities, and roleplay, which ask the generations without hard answers. Along with the amount of discrepancy between the trend in the logits of chosen and rejected responses, we can infer that making a more significant margin between the chosen and the rejected responses through higher λ in ORPO leads to overly adapting to the chosen responses set in the training dataset. This proclivity results in open-ended generations generally being preferred by the annotator while showing weaker performance in the hard-answered questions.

F. Test Set Reward Distribution on HH-RLHF

Along with Figure 11, which depicts the reward distribution of OPT2-125M, OPT2-350M, and OPT2-1.3B on the UltraFeedback dataset, we report the reward distribution of each pre-trained checkpoint trained on the HH-RLHF dataset. As discussed in Section 6.3, ORPO consistently pushes the reward distribution of SFT to the right side.

I. Generation Samples from Mistral-ORPO-α (7B)

In this section, we two sample generations from Mistral-ORPO-α (7B) on AlpacaEval, including the one which is preferred over the response of GPT-4 and the one in which GPT-4 was preferred. For readability, the instances with short responses are selected for both cases. Additionally, the responses for Mistral-ORPO-α (7B) were sampled with a temperature of 0.7. The checkpoints for Mistral-ORPO-α (7B) and Mistral-ORPO-β (7B) can be found in https://huggingface.co/kaist-ai/mistral-orpo-alpha and https://huggingface.co/kaist-ai/mistral-orpo-beta.

'*RL > RL' 카테고리의 다른 글

Reinforce Implementation (0) 2024.12.14 [SimPO] Simple Preference Optimization with a Reference-Free Reward (0) 2024.12.14 [DPO] Direct Preference Optimization: Your Language Model is Secretly a Reward Model (0) 2024.12.14 [PPO] Proximal Policy Optimization Algorithms (0) 2024.12.13 [DPG] Distributional Reinforcement Learning for Energy-Based Sequential Models (0) 2024.12.12