-

Why induction head in Transformer is important for meta-learning?LLMs/Interpretability 2026. 1. 6. 15:14

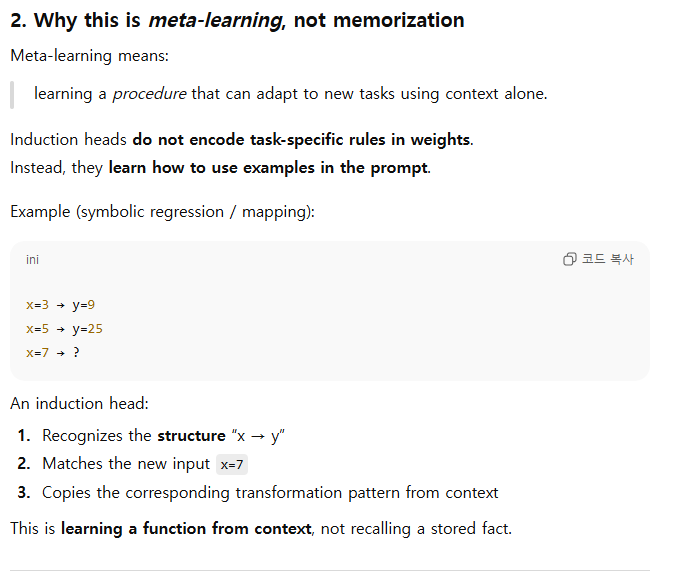

Asking GPT-5.2..

'LLMs > Interpretability' 카테고리의 다른 글

Towards Monosemanticity: Decomposing Language Models With Dictionary Learning (0) 2026.01.06 Superposition (0) 2026.01.06 Transformer Circuits (0) 2026.01.06 Circuits (0) 2026.01.05 잡설 (0) 2026.01.05