-

Model Analysis & ExplanationNLP/NLP_Stanford 2024. 6. 29. 08:04

※ Writing while taking a course 「Stanford CS224N NLP with Deep Learning」

※ https://www.youtube.com/watch?v=f_qmSSBWV_E&list=PLoROMvodv4rMFqRtEuo6SGjY4XbRIVRd4&index=17&t=1s

Lecture 17 - Model Analysis and Explanation

If you get high accuracy on the in-domain test set, you are not guaranteed high accuracy on even what you might consider to be reasonable out-of-domain evaluaitons. And life is always out of domain, and if you're building a system that will be given to users, it's immediately out of domain, at the very least because it's trained on text that's now older thatn the things that the users are now saying.

So it's a really important take away that your benchmark accuracy is a single number that does not guarantee good performance on a wide variety of things. And from a what are our neural networks doing perspective, one way to think about it is that models seem to be learning the data set, fitting the fine-grained heuristic and statistics that help it fit this one data set, as opposed to learning the task.

So humans can perform natural language inference. If you give them examples from whatever data set, once you've told them how to do the task, they'll be very generally strong at it.

But you take you MNLI model and you test it on HANS, and it got whatever that was below chance accuracy. That's not the thing that you want to see.

So it definitely learns the data set well because the accuracy in domain is high. But our models are seemingly not frequently learning the mechanisms that we would like them to be learning.

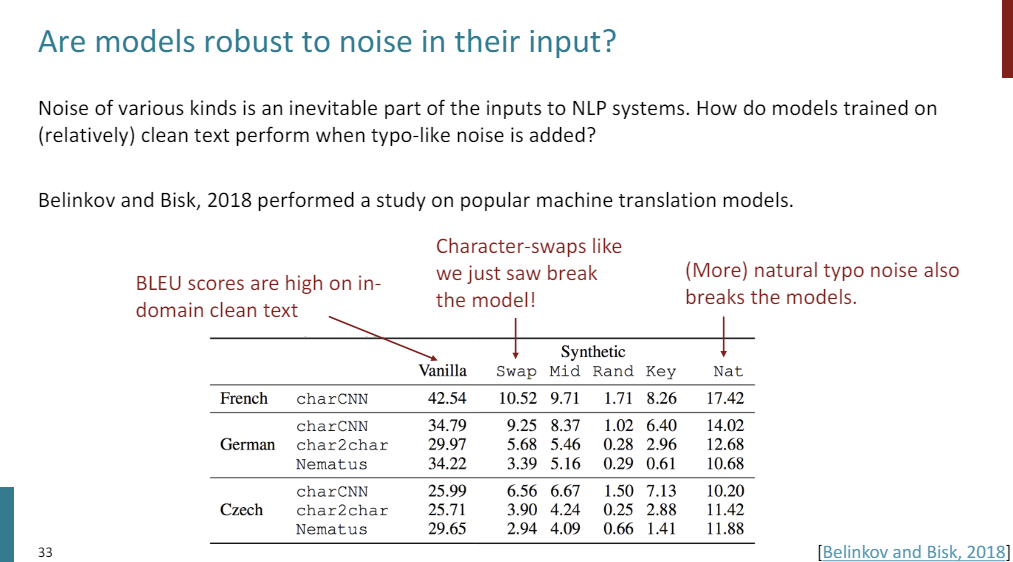

Are there changes to the inputs that look fine to humans but actually make the models do a bad job?

Motivation of using LSTM networks instead of simple recurrent neural networks was that they could use long context. But how long is your long- and short-term memory? And the idea of Khandelwal et al 2018 was shuffle or remove contexts that are farther than some k words away, changing k. And if your accuracy, if the predictive ability of your language model, the perplexity doesn't change once you do that, it means the model wasn't actually using that context.

So we've got how far away from the word that you're trying to predict are you actually sorrupting, shuffling, or removing from the sequence. And then on the y-axis is the increase in loss. So if the increas in loss is 0, it means that the model was not using the thing that you just removed because if it was using it, it would now do worse without it.

So if you shuffle the history farther away from 50 words, the model does not even notice. One, it says everything past 50 words of this LSTM language model, you could have given it in random order and it wouldn't have noticed. And then, two, it says that if you're closer than that, it actually is making use of the word order. That's a pretty long memory.

And then if you actually remove the words entirely, you can notice that the words are missing up to 200 words away. So you don't know the order-- you don't care about the order they're in, but you care whether they're there or not. And so this is an evaluation of, do LSTMs have long-term memory?

This one at least has effectively no longer than 200 words of memory, but also no less. So that's a general study for a single model.

Let's talk about individual predictions on individual inputs. One way of interpreting, why did my model make this decision for a single example, what parts of the input led to the decision, is where we come in with saliency map.

Saliency map provides a score for each word indicating its importance to the model's prediction.

So you've got BERT here. BERT is making a prediction for this mask. And then the saliency map is visualized. According to this method of saliency called simple gradients, "emergency" and "her" are the important words, appraently. So these two together are, according to this method, what's important for the model to make this prediction to mask. You can see some statistics, biases, et cetera that it's picked up in the predictions and then have it mapped out onto the sentence. And it seems like it's really helping interpretability.

How do you define importance? What does it mean to be important to the models' prediction? Here's one way of thinking about it. It's called the simple gradient method.

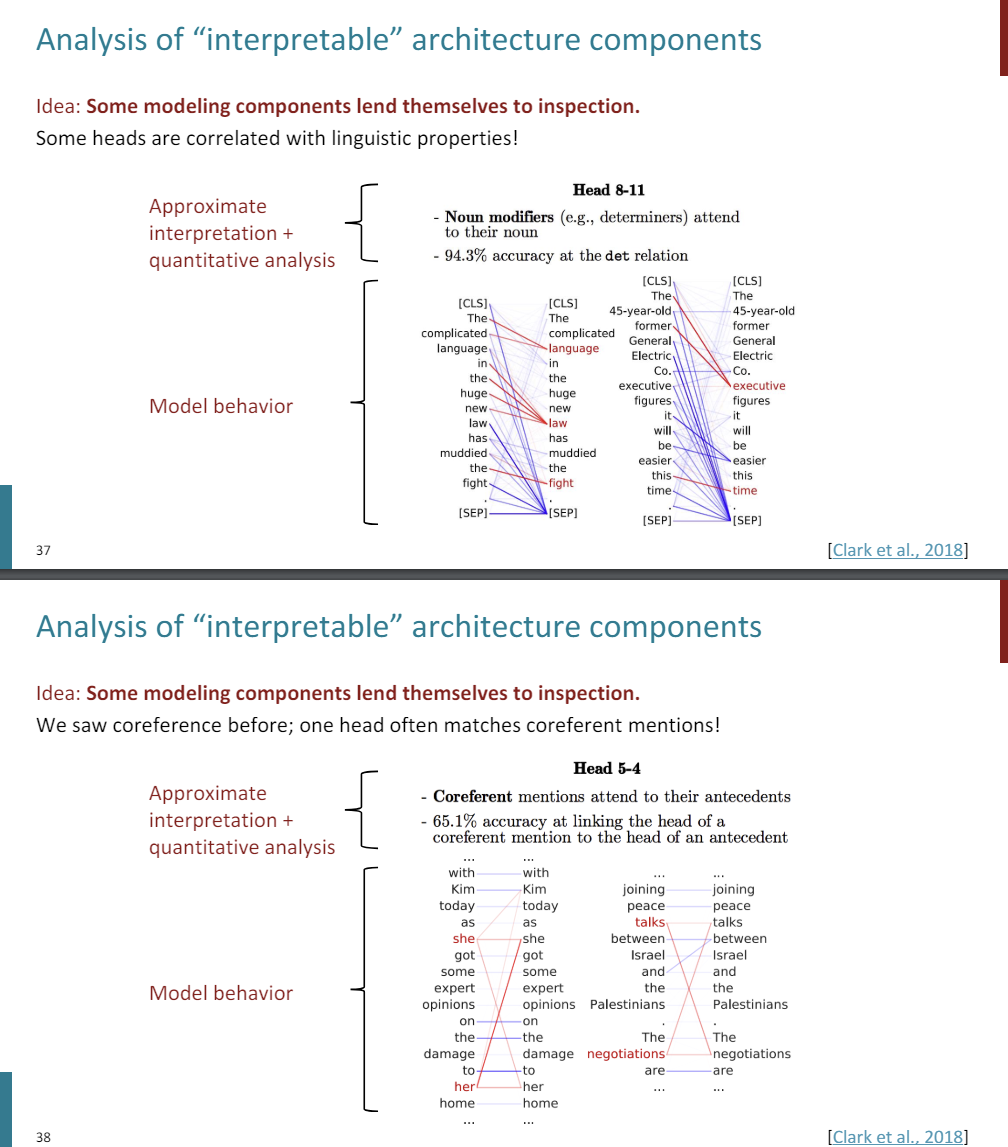

Now we're going to look at the representations of our neural networks. We've talked about their behavior and then whether we could change or observe reasons behind their behavior. Now we'll go into less abstraction, loo more at the actual vector representations that are being built by models, and we can answer a different kind of question, at the very least, than with the other studies. The first thing is that some modeling components lend themselves to inspection.

you can visualize them well and you can correlate them easily with various properties. So let's say you have attention heads in BERT. This attention head seems to do this global aggregation pretty consistently. So it's the first layer, which means that this word, "found" is uncontextuallized. But in deeper layers, the problem is that once you do some rounds of attention, you've had information mixing and flowing between words. And How do you know exatly what information you're combining, what you're attending to, even? It's hard to tell. And saliency methods more directly evaluate the imporatnce of models. But it's still interesting to see at a local mechanistic point of view what kinds of things are being attended to.

Some attention heads seemed to perform simple operations. So you have the global aggregation here that we saw already. Others seem to attend pretty robustly to the next token, cool. Next token is a great signal. Some heads attend to the SEP token, so here you have it attending to SEP. And then maybe some attended periods.

What we have in all of these studies is we've got an approximate interpretation and quantitative analysis relating, allowing us to reason about very complicated model behavior. They're all approximations, but they're definitely interesting.

It's connecting very complex model behavior to these interpretable summaries of correlating properties.

Other cases, you can have individual hidden units that lend themselves to interpretation. Here you've got a character level LSTM language model. Each row here is a sentence. The interpretation that you should take is that, as we walk along the sentence, this single unit is going from very negative to very positive or very positive to very negative. It's tracking the position in the line. So it's just a linear positioning unit, and pretty robustly doing so across all of these sentences. This is from a nice visalization study way back in 2016.

Here's another cell from that same LSTM language model that seems to turn on inside quotes. So here's a quote, and then it turns on. So that's positive in the blue. End quote here, and then it's negative. Seems, again, very interpretable, also potentially a very useful feature to keep in mind. This is just an individual unit in the LSTM that you can just look at and see that it does this.

Even further this, this is a study by some AI and neuroscience researchers, is we saw that LSTMs were good at subject-verb number agreement. Can we figure out the mechanisms by which the LSTM is solving the task? Can we actually get some insight into that?

So we have a word-level language model. This cell that's being tracked here, it's an individual hidden unit, one dimension, is, after it sees "boys", it starts to go higher. And then it goes down to something very small once it sees "greets". This cell seems to correlate with the scope of a subject-verb number agreement instance effectively.

What allows it to do that? Probably some complex other dynamics in the network, but it's still a fascinating. So those are all observatinal studies that you could do by picking out individual components of the model, that you can just take each one of and correlating them with some behavior.

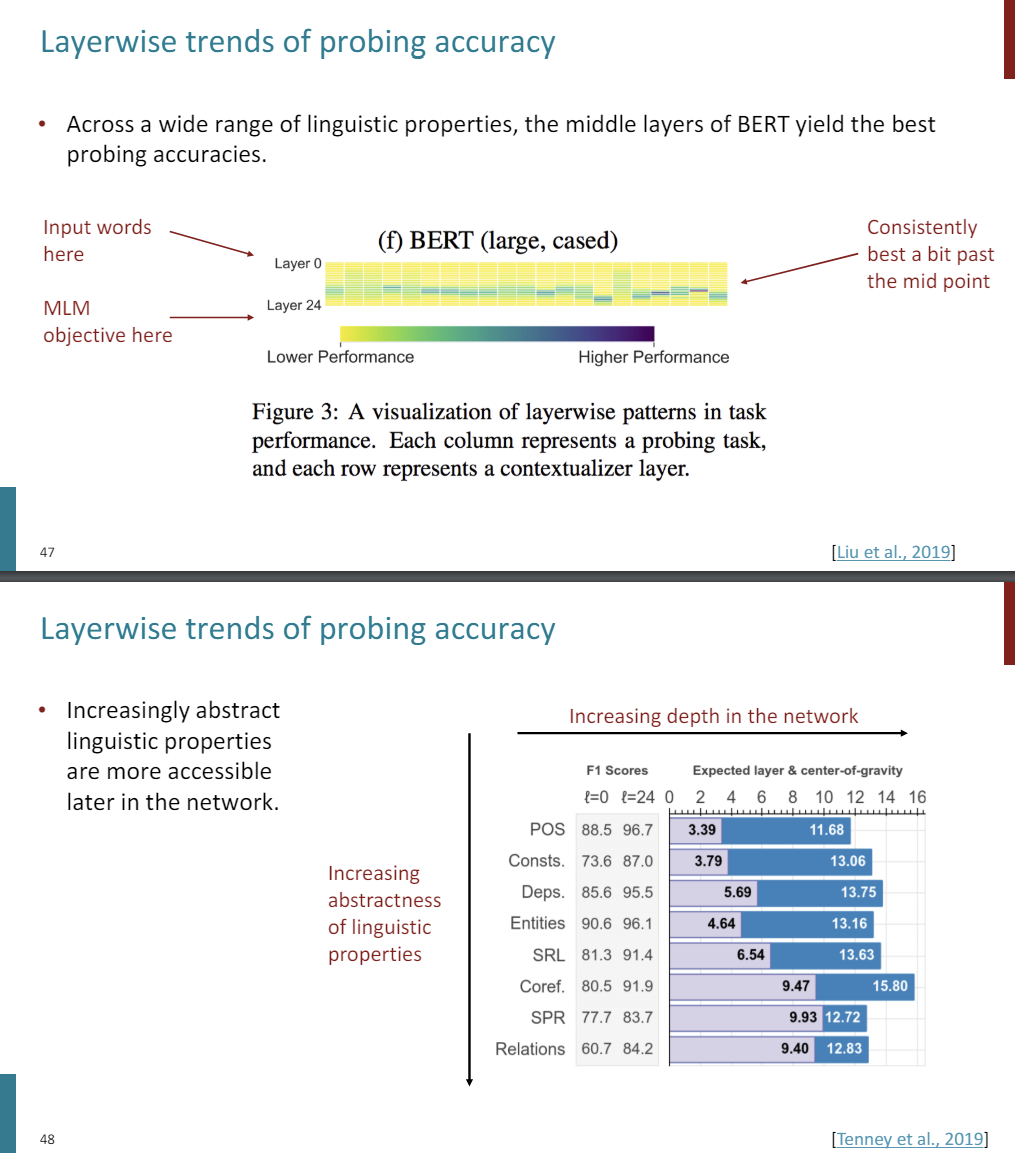

Now we'll look at a general class of methods called probing by which we still use supervised knowledgem like knowledge of the type of coreference that we're looking for. But instead of seeing if it correlates with something that's immediately interpretable, like a attention head, we're going to look into the vectore representations of the modela and see if these properties can be read out by some simple function to say, maybe this property was made very easily accessible by my neural network.

So the general paradigm is that you've got language data that goes into big pretrained transformer with fine tuning, and you get state of the art results. And the question for the probing methodology is, if it's providing these general purpose language representations, what does it actually encode about language? Can we quantify this? Can we figure out what kinds of things it's learning about language that we seemingly now don't have to tell it?

And so you might have something like a sentence, "I record the record" And you put it into your transformer model with its word embeddings at the beginning, maybe some layers of self attention and stuff, and you make some predictions.

And now our objects of study are going to be these intermediate layers. So it's a vectore per word or subword for every layer. And the question is, can we use these linguistic properties, like the dependency parsing to understand correlations between properties and the vectors and these things that we can interpret? We can interpret dependency parses.

So there are a couple of things that we might want to look for here. We might want to look for semantics. So here in the sentence, "I record the record," I am an agent. That's a semantic thing. Record is a patient. It's the thing I'm recoring. You might have syntax, so you might have the syntax tree that you're interested in, that's the dependency parse tree. Maybe you're interested in part of speech, because you have "record" and "record," and the first one's a verb, the second one is a noun, they're identical strings. Does the model encode that one is one and the other is the other?

So how do we this kind of study? So we're going to decide on a layer that we want to analyze and we're going to freeze BERT. So we're not going to fine tune BERT. All the parameters are frozen. So we decide on layer 2 of BERT. We're going to pass in some sentences. We decide on what's called a probe family.

The question I'm asking is, can I use a model from my family, say linear, to decode a property that I'm interested in really well from this layer? So it's indicating that this property is easily accessible to linear models, effectively.

So maybe I train the model, I train a linear classifier on top of BERT, and I get a really high accuracy. But then you can also take a baseline. So I want to compare two layers now. So I've got layer 1 here, I want to compare it to layer 2. I train a probe on it as well. Maybe the accuracy isn't as good, and now I can say, By layer 2, part of speech is more easily accessible to linear functions that it was at layer 1.

So what did that? The self-attention and feed forward stuff made it more easily accessible. That's interesting because it's a statement about the information processing of the model.

So we're going to analyze these layers. Let's take a second more to think about it.

What does each dimension of Word2vec mean? And the answer was not really anything. But we could build intuitions about it and think about properties of it through these connections between simple mathematical properties of Word2vec and linguistic properties that we could understand.

So we had this approximation that says, cosine similarity is effectively correlated with semantic similarity. Think about even if all we're going to do at the end of the day is fine tune these word embeddings anyway, likewise we had this sort of idea about the analogies being encoded by linear offsets.

So some relationships are linear in space, and they didn't have to be. It's emergent property that we've now been able to study since we discovered this. Why is that the case in Word2Vec? In general, even though you can't interpret the individual dimensions of Word2vec, these emergent, interpretable connections between approximate linguistic ideas and simple math on these objects is fascinating.

So one piece of work that extends this idea comes back to dependency parse trees. They describe the syntax of sentences.

In a paper that I did with Chris, we showed that, BERT and models like it make the dependency parse tree structure emergent, more easily accessible than one might imagine in its vector space.

'NLP > NLP_Stanford' 카테고리의 다른 글

Multimodal Deep Learning - 2. Features and fusion / 3. Contrastive models (0) 2024.06.29 Multimodal Deep Learning - 1. Early models (0) 2024.06.29 Reinforcement Learning from Human Feedback (RLHF) (0) 2024.06.24 Prompting (0) 2024.06.24 Pretraining (0) 2024.06.23