-

Multimodal Deep Learning - 2. Features and fusion / 3. Contrastive modelsNLP/NLP_Stanford 2024. 6. 29. 15:21

※ Writing while taking a course 「Stanford CS224N NLP with Deep Learning」

※ https://www.youtube.com/watch?v=5vfIT5LOkR0&list=PLoROMvodv4rMFqRtEuo6SGjY4XbRIVRd4&index=22

Lecture 16 - Multimodal Deep Learning

If all of this multimodal stuff is cool and useful and doesn't look that difficult, why aren't we all doing multimodal things? So why do we focus on specific modalities?

There are a couple of problems just to be aware of. So one is modalities can sometimes dominate, especially text is much more dominant than vision or audio in many use cases. So you can already just have a model that picks up on the text signal and learn to ignore the image completely, which actually happened embarrassingly for visual question answering. So visual question answering you could do that without actually looking at the picture.

The additional modalities can add a lot of noise, so it makes your machine-learning problem more difficult.

You don't always have full coverage. So as I said if you look at Facebook posts, sometimes you have text, sometimes you have pictures, sometimes you have both, but you don't have a guarantee that you always have both. So how do you deal with that?

In may cases, we just really weren't ready, it was too complicated to implement stuff. And also just in general how to design your model to combine all the information is quite complicated.

Featurizing text. We all know how to do that by now, especially in the age of transformers and before in LSTMs where we just have your batch by your sequence. So batch size by sequence length by emedding size. So it's always like a 3D tensor, and that's how you encode your textual information when you pump it through your neural net.

And so with images, it's slightly trickier because you can just look at the patches. But then if you do convolutions, you're shifting over the image and then you're aggregating. And in many cases, you don't really want to be this uniform.

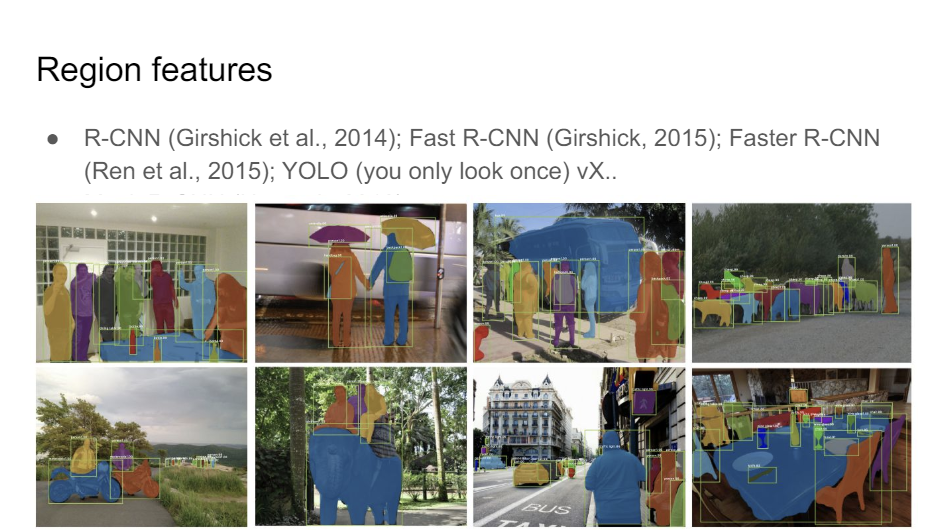

You want to have something that actually looks at the things in the picture. So this is called region features where you would use an object detector as a first step for processing your image, and then you would have a ConvNet backbone that encodes the features for that particular sub-image like these guys, like skateboard or something, it has its own vector representation.

And then in terms of dense features, we now also have Vision Transformers.

There are all these models, like YOLO is a really good one. So we're at YOLOv7 now, or 8. So there's a new one coming out every other year or something.

The basic idea is that we get these bounding boxes for things in the images or actually segmentations with the bounding boxes is what people tend to use and they have labels. So this is labeled like backpack or something.

So you can do this as a pre-processing step on your image to get a much richer representation of what is really in that image, which you can then pump into your system.

How you encode the information that is in these little bounding boxes or actually in the image itself in general? We just use a standard ConvNet for that.

This probably feels super obvious now, but in 2014 when people were starting to discover this, it was really very surprising that you could just use off-the-shelf ConvNet features to really replace the entire computer vision pipeline. So people used to do all of this very fancy sophisticated stuff and people spent decades on trying to refine this and then it was all thrown away and replaced by a ConvNet that does all of that stuff for free.

The cool thing you get there is that you can transfer very easily across different tasks. So you can have a very generic ConvNet and then use it to all kinds of very specialized things like spotting buildings in Paris, for example, or flowers, or other stuff.

Vision Transformers are what we would use these days to encode the images where you have these flattened patches and then you would do kind of the standard BERT architecture, and then you do classification. So this is all a starndard transformer. Everything is standard, except now your input is not words or tokens, it's patches of an image. And then you classify that.

We have a bunch of features and now how do we combine the information?

Let's say we have two vectors u and v. It sounds easy how we could combine them. It turns out that there are very many ways to combine them. You can do very simple things. Obviously, inner product or similarity is what you would use if you want to do cross-modal things. So if you want to embed things in the same vector space.

But you can do fancier projections on top or different combinations that are linear, or you can do multiplicative things where you multiply the components element-wise or you do some sort of gating over the different features. you can do attention. You can do fancier bilinear things. You can do very fancy compact bilinear things.

So there's really a wealth of literature on all the different ways you can combine two vectors. This is called multimodal fusion. Most of the literature on multimodality is essentially about this question-- what is the best way to do fusion? And that's it.

It's maybe useful to distinguish between different levels of fusion. So you can do it very early where you have the different features and then you just in the modern sense of attention you would attend to everything in all the features from the beginning.

You can first treat them separately and then combine them, or you can treat them as completely separate and then you only combine the final scores. So that's what we would call early fusion. And then my invention for calling the middle part would be sort of middle fusion, and then you have late fusion where you really just combine the scores or the logits, but you don't really have any interaction between the information from the different modalities.

This is a paper, FiLM, where you have this very special feature map F and it gets modulated by a multiplicative factor gamma and an additive bias vector beta, and you have a different one for every layer of a ResNet that is conditioned on some encoding of the thing you're after. So in this case, "are there more cubes than yellow things" We have some vector representation for that. And we use that vector representation to modulate the ResNet blocks at every layer of the ConvNet.

So you can modulate one network with the other one and try to have them learn as much as possible from that.

Let's talk about late fusion. Late fusion is what we would now call contrastive models.

The basic idea is that we have this similarity score. So we process the modalities completely independently and then at the very end, we do some combination.

The most famous instance of that these days is CLIP. So CLIP from OpenAI. So it's again, exactly the same contrastive loss that we've seen, all these early approaches. It does negative sampling but then in batch. So you just have a batch. You have two things that are aligned. So like this is the first piece of text and the first image, they are aligned. So this is the right answer. And I just want to make sure that I rank this thing higher than all the alternatives. So it's a very simple idea.

Really nothing special about this architecture that was invented here, but what made this thing so cool was first of all, it was transformers and it was transformers all the way. So your text encoder would be a transformer and your image encoder would be a ViT image encoder, so also a transformer.

And it was trained on lots of web data. So Alec Radford is really a genius at creating very high-quality data sets. And he created 300 million image text pairs for this data set, trained a bigger model on it than people used to do, and then we got this amazing model out of it.

And so moving away from the words there to the sort of text that you would see on the internet. So the caption for an image on the web is not going to say dog or cat, it's going to say a photo of a cat doing something. So that means that you can do zero-shot label predictions where you have "a photo of the--" and then you need to figure out what the right label is for a given image using this kind of prompt.

So you can prompt vision and language models in very much the same way and do zero-shot generalization.

When it came out, acually, on ImageNet itself, it didn't really outperform ResNet. So you might think it's not all that special. But what really made it special was that it generalized much better to these other data sets. So this ResNet thing here is pretty terrible at some of these kind of adversarial versions of ImageNet, and CLIP is super robust to that. So it's just a way better image encoder in general.

So very quickly after CLIP, there was this paper from Google using ALIGN. It's the same idea, but then you just keep throwing more data and more compute at it and that often works much better. So that's what they found here, too.

1.8 billion image text pairs instead of 300 million gives you a better model. Surprise. So still very cool.

And what is really cool, I think, is that there's this organization called LAION, where they've started this open-source collective to create really high-quality datasets. And so the LAION, the initial dataset, was-- how many examples in the initial LAION? 400 million. So now there's a much bigger version of LAION that's even multilingual and it has 5 billion examples.

So Stable Diffusion was trained on sort of the English subset of this thing. And that's one of the reasons that it's so awesome is because it's just seen a ton of data and that really makes your system a lot better.

So if you're looking for the ultimate dataset to play around with our own ideas, if you have enough compute, obviously, then you should really look at this dataset.

'NLP > NLP_Stanford' 카테고리의 다른 글

Multimodal Deep Learning - 5. Evaluation / 6. Where to next? (0) 2024.06.30 Multimodal Deep Learning - 4. Multimodal foundation models (0) 2024.06.29 Multimodal Deep Learning - 1. Early models (0) 2024.06.29 Model Analysis & Explanation (0) 2024.06.29 Reinforcement Learning from Human Feedback (RLHF) (0) 2024.06.24