-

Reinforcement Learning from Human FeedbackResearch/NLP_CMU 2024. 7. 8. 07:29

※ Summaries after taking 「Advanced NLP - Carnegie Mellon University」 course

https://www.youtube.com/watch?v=s9yyH3RPhdM&list=PL8PYTP1V4I8DZprnWryM4nR8IZl1ZXDjg&index=11

https://phontron.com/class/anlp2024/assets/slides/anlp-11-distillation.pdf

reinforcement learning은 묘하게 Bayesian inference랑 닮았다..!

그나저나 이 엄청난 강의 퀄리티에 어울리지 않는 마이크로폰 퀄리티 어쩔 ㅠㅠㅠㅠ

What we want to do is we want to maximize the likelihood of predicting the next word in the reference given the previous words, which gives us the loss of the output given the input, where the input can be the prompt, the output can be the answer. But there's lots of problems with learning from maximum likelihood. I'm going to give three examples here, all of these are actually real problems that we need to be worried about.

So first one is that some mistakes are worse than others. In the end, we want good outputs and some mistaken predictions can be a bigger problem for the output being good.

From the point of view of maximum likelihood, all of these are just tokens and messing up one token is the same as messing up another token, so that's you know an issue.

Another problem is that the "gold standard" in maximum likelihood estimation can be bad. It can be not what you want and corpora are full of outputs that we wouldn't want a language model reproducing. For example, toxic comments on Reddit, disinformation. Another thing that a lot of people don't think about quite as much is a lot of the data online is from automatically generated nowadays. For example, from machine translation, a lot of the translations online are from 2016 Google translate when Google translate was a lot less good than it is now. So you have poor quality translations that were automatically generated.

A final problem is something called "exposure bias." Exposure bias means, MLE training doesn't consider the necessity for generation and it relies on gold standard context, so if we go back to the MLE equation, when we're calculating MLE, this y less than t is always correct. It's always a good output and so what the model does is, it learns to over rely on good outputs.

One example of a problem that this causes is, models tend to repeat themselves over and over again. For example, when you use some generation algorithms, and the reason why this happens is, because in a gold standard output, if a word has appeared previously, that word is more likely to happen next.

So like, if you say, "I am going to Pittsburgh," you're much more likely to say "Pittsburgh" again in the future, because you're talking about Pittsburgh topically as coherent. So what you get is, you get MLE trained models saying "I am going to Pittsburgh I am going to Pittsburgh I am going to Pittsburgh." ^^;

So "exposure bias" is that the model has never been expose to mistakes in the past and so it can't deal with them. So what this does is, if you have an alternative training algorithm, you can fix this by generating a whole bunch of outputs, scoring some of them poorly and penalizing the model for generating poor outouts. That can fix these problems as well.

(human이나 model이나 시행착오로 배우는 게 중요하구나! ㅎㅎㅎ 실패를 경험 삼아 발전하기..!)

How we measure how good an output is? There's different ways of doing this. First one is Objective assessment, so for some tasks, there's objectively a correct answer.

There's also Human subjective annotations, so you can ask humans to do annotation for you.

There's Machine prediction of human preferences, and there's also use in another system in a downstream task.

The way Objective assessment works is, you have an annotated correct answer and match agaist this, so like if you're solving math problems, answering objective questions. You can pick any arbitrary example. You can pick your classification example from text classification tasks, and even clearer example is, if you have math problems, there's objectively one answer to any math problem and there's no other answer that could be correct. So this makes your life easy if you're handling this type of problem.

But of course, there's many other types of problems we want to handle that don't have objective answers like this. So let's say we're handling a generation task where we don't have an objective answer.

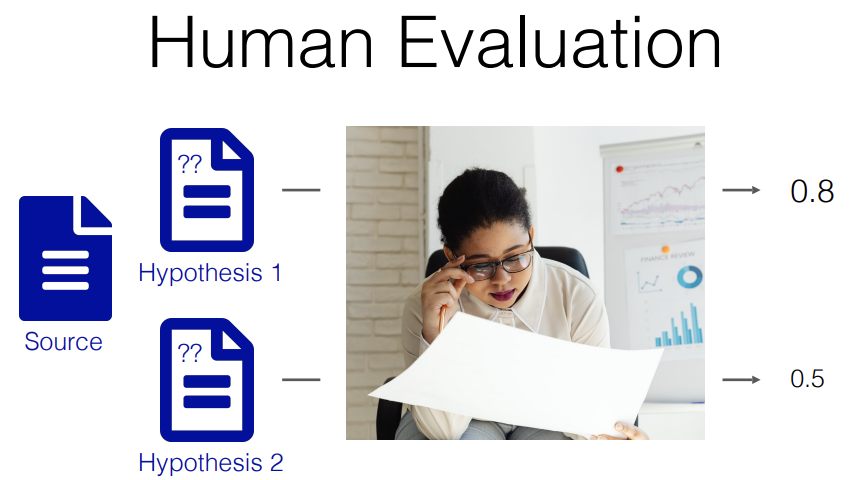

In this case, one of our gold standards is Human evaluation. So we might have a source input like a prompt or an input text for machine translation, we have one or several hypotheses, and we ask a human annotator to give a score for them or do some other annotation.

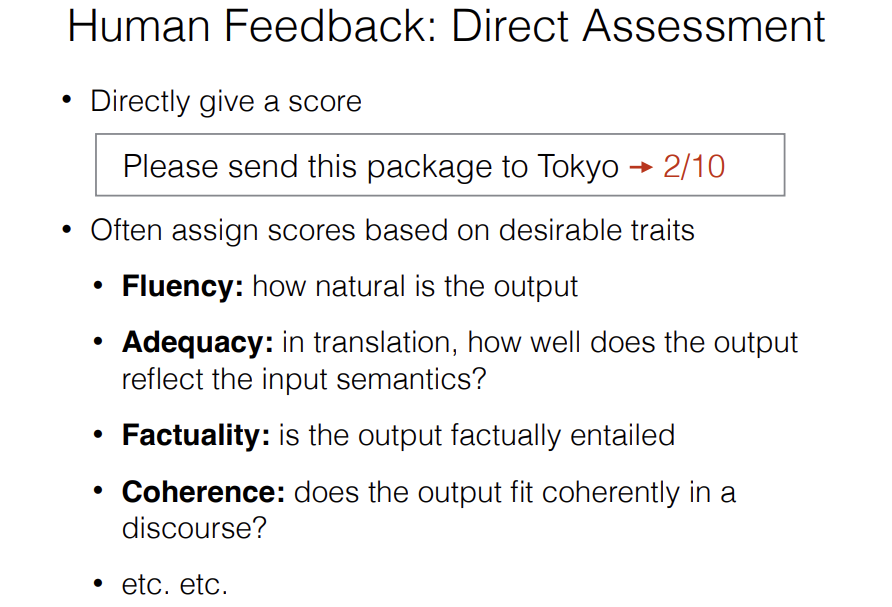

And the different varieties of annotation that we can give are something called "Direct assessment." It's give a score directly to how good the output is. It's kind of subjective. One of the difficulties of direct assessment is, giving a number like this is pretty difficult. If you don't have a very clear rubric and very skilled annotators, it's hard to get consistency between people when you do this.

So the advantage is it gives you an idea of how good things are overall, but the disadavantage is, it's more difficult to annotate and get consistency.

The next type of feedback is, Preference ratings. What you do is, you have two or more outputs from different models or different generations from an individual model, and you ask a human "which one is better?" like, "is one better than the other or are they tied?"

So this is a little bit of an easier task. It's easier to get people to annotate these things consistently. However, it has the disadvantage that, you can't really tell whether systems are really good or really bad. So let's say, you have a bunch of really bad systems that you're comparing with each other, you might find that one is better than the other, but that still doesn't mean it's ready to be deployed or if you have a bunch of really good systems, they're very similar to another but one is slightly more fluent than the other, you might still get a similar result and so that also makes it a little bit difficult to use practically in some ways.

One other problem with preference rankings is that, there's a limited number of things that humans can compare before they get really overwhelmed. If you say, I want to rate 15 or 20 systems how good they are with respect to each other, it's going to be impossible for humans to come up with a good preference rankings between them.

So the typical way around this which is also used in things like the chatbot Arena and other things like this is, to use ELO or TrueSkill rankings. And what these are is these are things that were created for the ranking of chess players or video game players or other things where they battle against each other in multiple matches, pair-wise, and then you put all of the wins and losses into these ranking algorithms and they give you a score about how good each of the players are.

Final variety of Human Feedback is Error Annotation. This can be useful for a number of reasons. The way it works is, you annotate individual errors within the outputs.

There's something for machine translation called "Multi-dimensional Quality Metrics", what they do is, they annotate spans in the output where each span in the output is given a severity ranking of the error and it's given a type of the error. There's about eight different types of errors.

So the advantage of this is, it gives you more fine-grained feedback. In that you can say "okay, this system has a lot of accuracy errors, this system has a lot of linguistic conventions errors," It also can be more consistent because, if you just say to people which output is better or what is the score of this output, people have trouble deciding about that because it's a more subjective evaluation, but if I say is this word correct, it's a little bit easier for people to do. So you can get more consistent annotations here.

The problem with this is, this can be very time-consuming.

These are three ways of collecting human feedback. And then there's an alternative which is automatic evaluation of outputs. And there's a bunch of different ways we can do this. The basic idea here is, we have a source, we have a couple hypotheses, and we have an automatic system that generates outputs like scores, and we optionally have a reference output. So the reference output is a human created gold standard output with respect to what the output should be in an ideal case.

The goal of automatic evaluation is, to predict human preferences or to predict what the human scores would be. Because still at this point, we mostly view what humans think of the output to be gold standard and this is called a variety of things depending on what field you're in. In machine translation and summarization, it's called "automatic evaluation", also a lot in dialogue. If you're talking about people from reinforcement learning or chatbots, a lot of people call it "reward model" because that specifically comes from the point of view of learning from this feedback, but essentially they're the same thing.

They're trying to predict how good an output is, and how much you should reward the model for producing that output.

There's a bunch of different methods to do this. I'm going to cover three paradigms for doing this.

The first one is Embedding-based evaluation. The way Embedding-based evaluation works is, it's unsupervised calculation based on embedding similarity between the output that the model generated and a reference output that you have created.

So we have a reference here that says "The weather is cold today" and we have a candidate that says "It is freezing today." So this is a reasonably good output and we run this through some embedding model. It was called BERTscore. Of course, you can run it through BERT, but it can be any embedding model that gives you embedding for each token in the sequence.

So there are five tokens in this sequence, four tokens in this sequence, you get five embeddings and then four embeddings. You calculate pair-wise cosine similarity between all of them and this gives you cosine similarity matrix and then you take the maximum similarity along either the rows or the columns.

Here because the rows correspond to tokens in the reference, how well you find something that is similar to each of the tokens in the reference is, like a recall based method. Because it's saying how many tokens in the reference have a good match in the output.

And then if you look at the columns, this is like a precision based metric, because it's saying how many of the things in the output have a similar match in the reference.

So you can calculate recall and precision over all of the tokens. And then feed this into something that looks like f-measure. And you can also use TF-IDF weighting to upweight low frequency words. Because low frequency words tend to be more content words.

This is one method that's pretty widely used. the BERTScore code base is also really nice and easy to use.

The way Regression-based evaluation works is, this is used in a supervised setting. What you have to do is, you have to calculate a whole bunch of actual human judgements and usually these judgements can either be direct assessment where you actually have a score or they can be pair-wise judgements.

And then if you have direct assessment, you use a regression-based loss, like minimum squared error, if you have pair-wise, you use a ranking-based loss that tries to upweight the ones that are higher scoring, downward the ones that are lower scoring.

One typical example of this is, COMET, which has been at least for a very long time the state-of-the-art machine translation evaluation, and the reason why it works so well is, because we have a bunch of evaluation for machine translation. They've been doing evaluation on machine translation systems for years and you can use that as lots of supervised training data. So you just take these evaluation data, you have human annotations, you have the output according to a model like COMET, you calculate the difference between them and you update model parameters.

The problem with this is, for a lot of tasks, we don't have lots of training data, so training these is a little bit less feasible.

Now recently what we have been moving into is, QA-based evaluation where we ask a language model how good the output is.

GEMBA is an one of the early examples of this for machine translation evaluation where they just ask GPT4 like "score the following translation from source language to target language with respect to the human preference on a continuous scale from 0 to 100 where the score of zero means no meaning preserved and the score of 100 means a perfect meaning in grammar."

You feed in the source, you feed in the human reference optionally if you have a human reference, and then you feed in the target. And you get a score.

So this works pretty well and this can give you better results especially if you have a strong language model.

The problem is, it's very unpredictable whether this is going to work well. And it's very dependent on the prompt that you're using. So right now a lot of people are using GPT4 without actually validating whether it does a good job at evaluation. And the results are all across the board. It can be anywhere from very very good to very very bad at evaluating particular tasks. So I would be at least a little bit suspicious of whether GPT4 is doing a good job evaluating for your task especially more complex tasks.

I would especially be suspicious if you're doing any of two following things. Number one, if you're comparing GPT4 or any model against itself in another model, because GPT4 really likes its own outputs and there are papers that demonstrate that GPT4 likes its own outputs more than others. ----> ????

Also if you're explicitly optimizing the outputs using RLHF, there is something called "good hearts law", which is anytime you start optimizing towards a metric, it becomes a bad metric and that also happens for GPT4 based evaluation. --------> ???? 이건 뭔가용..?

흠.. 모델이 자기자신의 output에 편향된다..?? 나만 궁금했던 게 아니구나. ㅎㅎ 질문이 나왔다. ㅎㅎ 덕분에 교수님의 설명을 들었다. ㅎㅎ 여전히 뭔가 시원하진 않지만..

I don't know if we actually have a very clear empirical evidence of why this is the case, but my hypothesis about this is, we would expect models to be more biased towards their own outputs and the reason why is because models are within their embeddings, they're encoding when they're in a high probability part of the space and when they're in a low probability part of the space. And the high probability part of the space is going to be associated with good outputs because when models are more sure of their outputs, they're more likely to be good just because that indicates that they're closer to the training data. So model probabilities are associated with good outputs.

Separately from that, I believe a model can identify when it's in a high probability segment of the space and when it's in a low probability segment of the space, and because of that, I expect that there are segments of the embedding space where it's more likely to answer yes about something being good or not. And those are going to be associated with high probability outputs as well.

And also models are more likely to generate outputs that are high probability according to their model by definition. So all three of those effects together would go into a model being biased supports its own outputs compared to outputs in another model.

Models output high probability things from their own probability space by definition, things that are high probability are associated with being good just because otherwise a model would be outputting garbage.

And the final thing which is more tenuous is, if the model is in a high probability segment of the space, it's more likely to output yes according to a question of it being good and that's probably true but I'm not 100% sure.

When I say, an evaluation metric is good or not, what do I mean by "being good or not" reward model or whatever else, the way we typically do this is, by doing something called "Meta-evaluation."

It's called meta-evaluation, because it's evaluation of evaluation. -_-;;

The way do this is, we have human scores and we have automatic scores and we usually calculate correlation between the scores. So typical ones are rank correlation like Pearson's correlation or Kendall.

So the more associated the automatic scores are with the human scores, the higher these correlations are going to be.

There's other things that you can calculate. So if you're trying to figure out whether a model matches human pairwise preferences, you can just calculate accuracy of pairwise preferences. You can also calculate the absolute error between the judgements.

So these are good things to do if you want to use an evaluation metric, but you aren't sure whether it's good or not.

All of the automatic evaluation methods that I talked about are trying to match human preferences but that's not the only thing that you necessarily want to do. The final thing that you might want to do is, use the model outputs in a downstream system, and see whether they are effective for that.

So there's two concepts of intrinsic evaluation and extrinsic evaluation. So intrinsic evaluation evaluate the quality of the output itself, so that would be like asking a human directly about how good is this output.

Extrinsic evaluation is evaluating output quality by its utility.

One example, if you can evaluate large language model summaries through question answering accuracy, so you can take the output of an LLM and feed it through a question answering model, and see whether you're able to answer questions based on this, that gives you a better idea of whether the summary incorporates requisite information.

But if you think about anything an LLM can be used for, usually it's part of a bigger system. So you can evaluate it as a part of that bigger system.

The problem with this is, it's a very indirect way of assessing things. So let's say, your QA model is just bad, how can you disentagle the effect of summary versus the QA model. That's not a trivial thing to do.

So ideally a combination of these two is practically the best way.

Now I'd like to talk about learning. The first thing I'll cover is Error and Risk. So the way we calculate Error is, we generate an output and we calculate its badness. So generating the output could be argmax, could be sampling, could be anything else. and we calculate its badness. This is defined as Error. What you want to do is, you want to minimize error because in the end, you're going to be deploying a system that just outputs, one thing and you're going to want to be as good a thing as possible.

But the problem with this is, there's no easy way to actually optimize this value in especially in a text generation. We can't easily minimize error because if you look at the surface of error at some point, you're going to have a non-differentiable part when you take the argmax or when you do sampling, you're not going to be able to do gradient based optimization. So what we do normally is, we instead calculate something called Risk.

What risk looks like is, it's expected error of the output. The expected error or the output includes a probability in the objective function and that probability is differentiable. So we can easily do gradient-based optimization through it.

The problem with this is, it's differentiable but for text generation for example, the sum is intractable, because we have a combinatorily large number of potential outputs. We can't take a sum over that many possibilities.

So minimum risk training tries to minimize risk, reinforcement learning also many of the models especially policy gradient models are trying to minimize risk as well.

When we want to optimize risk, what we do is, we sample in order to make this tractable. So a very simple way to minimize risk is, instead of summing over all of the possible outputs, we sum over a small number of possible outputs and we normalize to make this all add up to one. So this normalizer here is the sum over all of the probabilities that we have, and these samples can be created either using sampling or n best search.

The problem with that is, if you sample with temperature one, it gives you a lot of not very good outlets and so if you're sampling with temperature one, you'll be exploring a very large part of the space that actually isn't very good and so because of this, some other alternatives that you can use is, you can do endb search to find the best outputs or you can sample with a temperature that's not one and create a list of possible hypotheses and then normalize.

If you're sampling with not temperature one, you are potentially getting multiple outputs, you should try to de-duplicate or sample without replacement, because if you get multiple outputs, it messes up your equations.

Reinforcement learning is, learning where we have an environment X, ability to make actions A, and get a delayed reward R.

It might be that X is the conversational history up till this point and A could be a next token generation and then R is a reward we get in an "arbitrary time point." It might not be immediately after generating the next token, but it might be later and that's actually really important from the point of view of reinforcement learning.

X could be the compiler, it's probably the compiler and all of the surrounding code context and we would treat each token in the code to be an action A and then, R would be the reward after a long sequence of actions. It could be the reward from the compiler, it could be the reward from a code readability, it could be the reward from a speed execution. So one of the interesting things about R is, you can be really creative about how you form R which is not easy to do.

Why reinforcement learning in NLP? There's three answers. The first one is, you have a typical reinforcement learning scenario where you have a dialogue where you get lots of responses and then you get a reward at the end, so the thumbs up and thumbs down from humans is a very typical example of reinforcement learning because you get a delayed reward at some point in the dialogue when a human presses up or down.

Another actually more technical scenario where reinforcement learning has been used for a long time is call centers, so we've had dialogue systems for call centers and then if you complete a ticket purchase or you complete reserve a ticket without ever having to go to a human operator, you get a really big reward. If you have to go to the human operator, you get a smaller reward and if the person yells at you and hangs up then, you get a really negative reward. So this is kind of the typical example reinforcement learning has been used for a long time there.

Another example is, if you have latent variables, chain of thought, where you decide the latent variable and then get a reward, you get a reward based on how those latent variables affect the output, so this is another example. Because the chain of thought itself might not actually be good, you might have a bad chain of thought and still get the correct answer, so you don't actually know for sure that a chain of thought that was automatically generated is good or not, but that makes it a reinforcement learning problem.

Another thing is, you might have a sequence level evaluation metric, so that you can't optimize the evaluation metric without first generating the whole sequence.

So these are three scenarios where you can use reinforcement planning.

Supervised MLE in the context of reinforcement learning, it is also called "imitation learning." Because you're learning how to perform actions by imitating a teacher. Imitation learning is not just supervised MLE, there's also other varieties of imitation learning, but this is one variety of imitation learning.

Self-training, the idea is that, you sample or argmax according to the current model. So you have your current model and you get a sample from it. And then you use the sample or samples to maximize likelihood. So instead of doing maximum likelihood with respect to the gold standard output, you're doing it with respect to your own output.

Why this is not a good idea? If you don't have any access or any notion well it's good, this will be optimizing towards good outputs and bad outputs. So your model might be outputing bad outputs and you're just reinforcing the errors.

Nonetheless, self-training actually improves your accuracy somewhat in some cases like for example, if your model is right more often than not, optimizing towards the more often than not, due to the implicit regularization that models have and early stopping and other things like that it can actually move you in the right direction and improve accuracy.

So there are alternatives to this that further improve accuracy. For example, if you have multiple models and you only generate sentences where the models agree then this can improve your overall accuracy further. This is called co-training.

So self-training is useful, but there are better alternatives if you can get a reward function. So the simplest variety of this is something called Policy Gradient or REINFORCE. What this does is, this adds a term that scales the loss by the reward. So if you can get a reward for each output, instead of doing self-training entirely by itself, you multiply it by a reward and this allows you to increase the likelihood of things that get a high reward, decrease the likelihood of things that get a low reward.

Under what conditions is this equal to MLE? This objective is equivalent to the MLE objective when you're using a 0 or 1 loss where you're using an evaluation function that gives you a score of one when it's exact match and zero when it's not exact match. That also demonstrates that this can be more flexible because you can have other rewards that are not just one and zero for exact match. You can use things that give you partial credit.

One problem with these methods is, how do we know which action led to the reward? The best scenario is after each action you get a reward. So after each token that you generated, you get a thumbs up or thumbs down from the user about whether they like that token or not, and how much happier they are after you generated that token than they were before you generated that token.

The problem with this is that, that's completely infeasible, like every time after you use ChatGPT, you're not going to press thumbs up and thumbs down after each token. So in reality, what we get is, usually we get it at the end of roll out of many many different actions. And we're not sure which action is responsible for giving us the reward.

So there's a few typical ways of dealing with this. The most typical way of dealing with this right now is just not dealing with it, and just hoping that your optimization algorithm internally will be able to do credit assignment. So what that entails is, you give an equal reward for each token in the output, other ways that you can deal with it, you can assign decaying rewards from future events.

The very simplest thing that you can do is, you can just sample and optimize the subjective function, it's not hard to implement it all as long as you have some source of reward signal. But the problem is, reinforcement learning can be very unstable and it's hard to get it to work properly, if you don't do some additional tricks.

There's a lot of reasons why it's unstable. The first reason is you're sampling individual output and calculating based on the individual sampled outout and then there's an infinity of other outputs that you could be optimizing over. For MLE, this is not a problem because for MLE, you're always contrasting the gold standard output to all of the other outputs in the space and you're saying, I want to upweight the gold standard output and down all the other ones. But for reinforcement learning, you only have a single sampled output that output might be wrong and that's a source of instability. This is particularly a problem when using bigger output spaces like all of the words in the vocabulary.

Anothere problem is, anytime you start using negative rewards, because if you start using negative rewards, those rewards will be downweighting the probability of a particular output sequence and that might be a good idea maybe you're getting a toxic output or something like that and you want to down it but, at the same time, in addition to that toxic output, there's a combinatorial number of completely nonsense outputs that aren't even English and so you can start diverge from the natural language modeling distribution that you have before. So this is a big problem.

So a number of strategies can be used to stabilize. The first one is, pre-training with MLE. So you start with a pre-trained model and then, switch over to RL after you finished pre-training the model. So this makes a lot of sense. If you are training a language model, but it does only work in scenarios where you can run MLE and so it doesn't work if you're predicting like latent variables that aren't included in the original space. It also doesn't work in a setting where like you want to learn a chatbot for customer service for a company that has a product catalog that the language model has never seen before and so if the language model has no information about the product catalog, you don't provide it through RAG or something like that, it's going to have to explore infinitely large space. And you're never going to converge with your language modeling objectives. So you need to basically be able to create at least some supervised training data to train with MLE.

But I'm assuming that almost everybody is going to do some sort of pre-training with MLE.

The next step that people use in reinforcement learning that's really important to stabilize is, regularization to an existing model. You have an exsting model and you want to prevent it from getting too far away.

And the reeson why you want to do this is, let's say you start assigning a negative reward to toxic utterances for example, if your model stops being a language model whatsoever, that's bad idea, so you want to keep it as a language model, keep it close enough to still being a competent language model while removing the toxic utterances.

So there's a number of methods that people use to do this.

The most prominent ones are KL regularization. The way this works is, you have two terms. The first term is a term that improves your reward, so you have your old model where your old model is creating probability and then you have the probability assigned by your new model and then you have your reward signal here, so this is improving the log odds or improving the odds of getting a good reward for high reward sequences. Separately from this, you have this KL regularization term and this KL regularization term is keeping the probability distribution of your new model similar to the probability distribution of your old model, and this beta parameter, you can increase it or decrease it based on how similar you want to keep the model.

Another method that people use is something called proximal policy optimization or PPO. This is a method that is based on clipping the outputs. We define this ratio here so this ratio is equivalent to this here, so it's the amount that the new model up weights high reward sequences and so we hve the same thing that we had above. But here we have a clipped version of this, what we do is we clip this ratio to be within a certain range of the original ratio. What this is doing is, this is forcing the model not reward large jumps in the space. By taking the minimum of this, you're encouraging the models keep examining the space where you don't diverge much from the original model and if the space where the original model was in is better than the new space that your model move back towards the original model. So if you started learning a model that looked like it was optimizing your reward but then suddenly the model went off the rails and it starts generating completely nonsense outputs that get really bad reward, this will push it back towards the original policy. That's the basic idea behind PPO.

Adding a baseline. The basic idea is that, you have expectations about your reward for a particular sentence. So the basic idea is can we predict a priori how difficult this example is? and then adjust our reward based on that.

You have a baseline model that predicts this and you adjust appropriately.

An extreme example of creating a baseline is contrasting pairwise examples or contrasting different outputs for the same input. You can easily learn directly from pairwise Human preferences which can provide more stability because, one is better than the other, so you can be sure that you're upweighting a better one and down weighting a worse one.

This is the idea behind DPO which is a recently pretty popular model. The way DPO works is it calculates this ratio of the probability of the new model to the old model, but it upweights this probability for a good output and it downweights this probability for a bad output.

So here we have our better output, our worse outputs and it's learning to upweight the probability and downweight probability accordingly.

You can notice that DPO is very similar to PPO that it's using these ratios, but the disadvantage of this is, you obviously require pairwise judgments and you can't learn a model if you don't have these pairwise judgments.

So Beta term is a normalization term. It's a hyper-parameter.

'Research > NLP_CMU' 카테고리의 다른 글

Large Language Models (0) 2024.07.09 Ensembling & Mixture of Experts (0) 2024.07.08 Quantization, Pruning, Distillation (0) 2024.07.07 Long-context Transformers (0) 2024.07.07 Retrieval & RAG (0) 2024.07.06